GSoC_2023

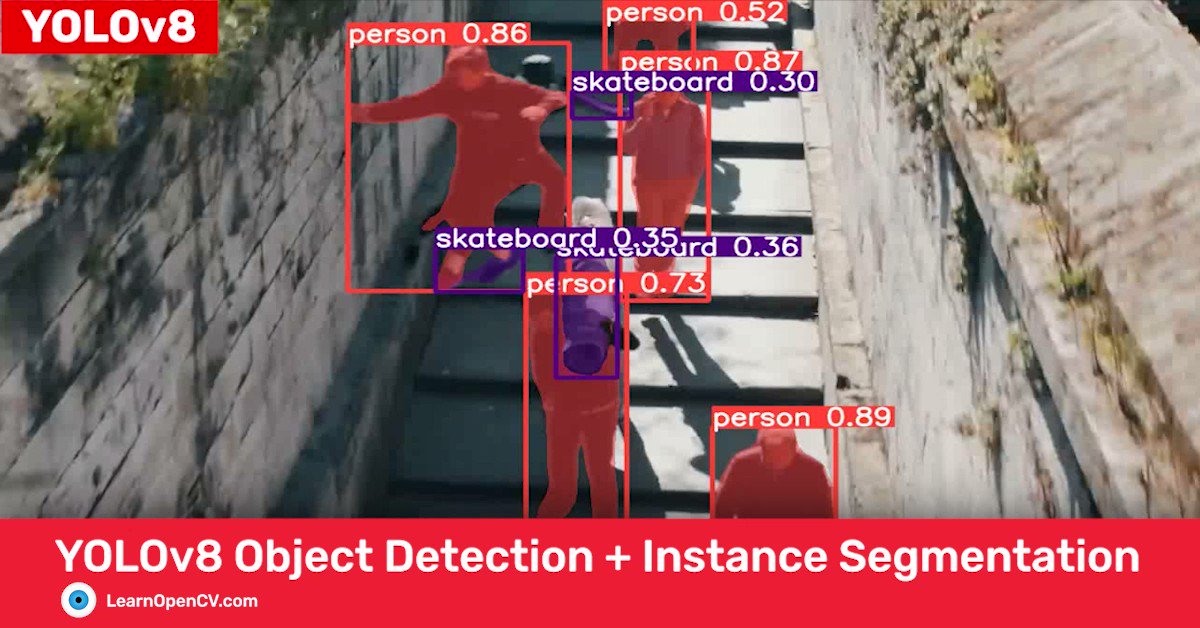

Example use of computer vision:

- Parent of this page

- Last year's idea page

- Merged Pull Requests from the previous GSoC programs: opencv, opencv_contrib and opencv_zoo

- Jump to Project Idea List

Contributor + Mentor + Project Discussion List

- 🚧 TBD Spreadheet of projects link

| Contributor | Title | Mentors | Passed |

|---|---|---|---|

| Yuhang Wang | Point Cloud Compression | Wanli Zhong | |

| Likhit Talasila | Leveraging OpenCV with GPT | Gary Bradski, Douglas B Lee | |

| Joshua Ahn | A Pipeline for NeRF Experimentation and Visualization | Gary Bradski, Douglas B Lee | |

| Pengyu Liu | Realtime Object Tracking Models | Zihao Mu | |

| Aser Atawya | Lightweight Optical Flow Model | Yuantao Feng | |

| Jose Rios | Realtime Object Tracking Models | ViKtor Bebnev | |

| Tianyu Yang | G-API: A Complete Python Tutorial | Dmitry Matveev | |

| Ningyu Zhu | Simple Triangle Rendering | Rostislav Vasilikhin | |

| Dmitrij Pesztov | Point Cloud Compression | Shiqi Yu, Jia Wu, Wanli Zhong | |

| Linfei Pan | Multi-camera Calibration Part 2 | Maksym Ivashechkin, Jean-Yves Bouguet | |

| Ben Kang | Realtime Object Tracking Models | Zihao Mu | |

| Hamsaa Sayeekrishnan | G-API: Implement Custom Stream Sources in Python | Anatoliy Talamanov |

| Date (2023) | Description | Comment |

|---|---|---|

| January 23 | Organization Applications Open | 👍 |

| February 7 | Organization Application Deadline | 👍 |

| February 22 | Organizations Announced | 💯 ! |

| March 20 - April 4 | Contributors' Application Period | 👍 |

| April 27 | OpenCV Slot Request | 👍 |

| May 4 | Accepted GSoC contributor projects announced | 👍 |

| May 4 - May 28 | Community Bonding | 👍 |

| May 29 - July 10 | Coding (Phase 1) | 👍 |

| July 10 | Phase 1 Evaluations start | 👍 |

| July 14 | Phase 1 Evaluations deadline | 👍 |

| July 14 - August 21 | Coding (Phase 2) | |

| August 21 - August 28 | Contributors Submit Code and Final Evaluations | |

| August 28 - September 4 | Mentors Submit Final Evaluations | |

| September 5 | Initial Results Announced | |

| September 4 - November 6 | Extended coding phase | |

| November 6 | Deadline for GSoC contributors with extended timeline to submit their work | |

| November 13 | Deadline for Mentors to submit evaluations for GSoC contributors with extended timeline |

California switches PST->PDT (Pacific Standard->Pacifc Daylight) Sun, Mar 14 2:00am

- GSoC Home Page

- OpenCV Project Ideas List

- OpenCV Home Site

- OpenCV Wiki

- OpenCV Forum, Questions and Answers

- How to do a pull request/How to Contribute Code

- Source Code can be found at GitHub/opencv and GitHub/opencv_contrib

- Developer meeting notes

- Mentor only list

- Contributor+Mentor Mailing List

- IRC Channel:

#opencvon freenode - Slack: https://open-cv.slack.com

Mailing list to discuss: opencv-gsoc-2023 mailing list

- GPT with OpenCV

- NeRFs

- FP16 and INT8 for DNN

- Point Cloud Compression

- Simple Triangle Rendering

- 3D samples using OpenGL

- Demo for Android

- ONNX/numpy operators

- Lightweight optical flow model

- Realtime object tracking models

- RISC-V Optimizations

- Dynamic CUDA support in DNN

- Multi-camera calibration part 2

- Improve DNN Vulkan Backend

- G-API: Implement custom stream sources in Python

- G-API: A complete Python tutorial

- G-API: Inference via OpenCV DNN

- Support Realsense camera by UVC

All work is in C++ unless otherwise noted.

-

- Description: Tune GPT on latest OpenCV; create examples/blog on how to use GPT to leverage DNN and other OpenCV functionality; create an OpenAI GPT API assuming we get such access off the waitlist.

-

Expected Outcomes:

- Tuning GPT on OpenCV 4.7+ code and Github bases.

- Documentation and blog or YouTube videos of using GPT to leverage OpenCV functions and internals of OpenCV

- Assuming we get early access to the GPT API, create an API that has useful functionality to OpenCV and its applications.

- Potentially have some DNN models where users can tune the models to their data that they provide, but GPT would know how to trains such models and get them on given hardware like OAK cameras, Mac, Windows, Linux.

- Resources: OpenCV, Git Code, OpenAI

- Skills Required: Good software development skills, good knowledge and use of GPT and of OpenCV.

- Possible Mentors: Doug Lee, Gary Bradski

- Difficulty: Medium to Hard

- Duration: 200 hours

-

- Description: Create an easy but accurate NeRF code for users to capture NeRF models with. Possibly allow accuracy boosts using Charuco boards in thescene

-

Expected Outcomes:

- NeRF code and application, well documented for ease of user's capturing NeRF models

- Documentation and YouTube videos showing how to use it.

- Resources: OpenCV, Example NeRF Code

- Skills Required: Good software development skills, good knowledge and use of NeRF models.

- Possible Mentors: Doug Lee, Gary Bradski

- Difficulty: Medium to Hard

- Duration: 175 hours

-

- Description: Some modern CPUs include hardware support for FP16 arithmetics as well as special instructions for efficient INT8 convolution, which can be used to accelerate deep learning inference. OpenCV includes basic support for FP16 data format, and there is quite efficient implementation of FP16 and INT8 convolution in Ficus NN (https://github.com/vpisarev/ficus/tree/master/lib/NN), but there is no any FP16 compute paths in OpenCV deep learning module and INT8 convolution is quite slow. It's suggested to port FP16 convolution kernels (3x3 Winograd, general im2row convolution) and INT8 im2row convolution from Ficus NN to OpenCV DNN. In the case of FP16 convolution it's just used optionally for FP32 models (we can call it a "low-precision mode") and the necessary conversion from FP32 to FP16 before the convolution and FP16 to FP32 conversion after convolution should be efficiently merged into the convolution loop (not be done in a separate loop) to provide the best efficiency.

-

Expected Outcomes:

- Series of patches for OpenCV DNN that add support for FP16 convolution and INT8 convolution into the inference pipeline, the actual operations and the graph engine.

- Accelerated implementations of QLinearConv, QLinearAdd, QuantizeLinear, DequantizeLinear and other crucial layers to be able to run quantized models

- Efficient support for QDQ models (https://onnxruntime.ai/docs/performance/quantization.html) where combination of (dequantize + floating-point-op + quantize) operations is replaced, where possible, with dedicated operations (e.g. QLinearConv) and in other cases those quantize & dequantize operations are merged into the corresponding floating-point operations at buffer level in the same parallel loop (to improve cache utilization and reduce a number of parallel regions).

- Updated OpenCV DNN units tests to test the performance and accuracy of FP16 compute paths and QDQ models.

- Skills Required: good software optimization skills, fluent C++ and ARM Assembly, basic understanding of deep learning inference engines

- Possible Mentors: Zihao Mu, Vadim Pisarevsky

- Duration: 175 hours

-

- Description: Implement algorithm(s) to compress point clouds and optionally compress meshes. In OpenCV 5.x there is new 3D module that contains algorithms for 3D data processing. Point cloud is one of the fundamental representations of 3D data and there is growing number of point cloud processing functions, including I/O, RANSAC-based registration, plane fitting etc. Point cloud compression is another desired functionality to add, where we throw away some points from the cloud while preserving the overall geometry that the point cloud implicitly describes.

-

Expected Outcomes:

- Point cloud compression algorithm developed for OpenCV 3d module (need to follow OpenCV coding style)

- Unit tests and at least 1 example in C++ or Python that demonstrates the functionality

- Documentation/tutorial on how to use this functionality.

- (optional, but desired) optional integration of OpenCV with Google's Draco library that provides rich functionality for 3D data compression.

- Resources:

- Skills Required: Mastery experience coding in C/C++, good understanding of clustering algorithms and, more generally, 3D data processing algorithms.

- Possible Mentors: Rostislav Vasilikhin

- Duration: 175 hours

-

- Description: Some 3D algorithms require mesh rendering as their inner part, for example as a feedback for 3d reconstruction. Depending on algorithm type, a depth or a color rendering is required. This function can also be used as a simple debugging tool. Since light, shadows and correct texture rendering are separate huge problems, they are out of scope for this task.

-

Expected Outcomes:

- A function that takes as input a set of points, a set of indices that form triangles and produces depth rendering as output

- Per-vertex color support is desirable. In that case a function should also take vertex colors as input and produce RGB output

- Optional: accelerated version using SIMD, OpenCL or OpenGL

- Optional: jacobians of input parameters as a base for neural rendering

- Resources: * Triangle rasterization tutorial * 3D module in OpenCV

- Skills Required: mastery plus experience coding in C++; basic skills of optimizing code using SIMD; basic knowledge of 3D math

- Possible Mentors: Rostislav Vasilikhin

- Duration: 175 hours

-

- Description: 2 years ago we have added basic non-VTK based 3D visualization API into OpenCV and a few corresponding examples (https://github.com/opencv/opencv/pull/20371). The functionality is really useful and the examples are cool, but there are some drawbacks. In particular, the proposed implementation on Linux is only compatible with GTK+ 2.x, but not with the newer versions of GTK+. Support for macOS is also lacking. It's planned to further polish this code and examples and make it all compatible with all major platforms.

-

Expected Outcomes:

- Patches for core & highgui to improve compatibility of 3D visualization API with GTK+ 3.x, 4.x and with macOS.

- A few more examples to demonstrate the functionality in C++ and Python.

- Optionally, some new 3D visualization functionality

-

Resources:

- OpenCV vis module tutorial

- Essential matrix demo. For example, it can be modified to include some 3D visualization, as shown here: https://youtu.be/ROGa2tutPHQ?t=539

- Skills Required: good experience with OpenGL and UI frameworks on Linux and macOS (GTK and Cocoa, respectively), basic knowledge of 3D vision algorithms, good C++/Python coding skills.

- Possible Mentors: Shiqi Yu, Alexander Smorkalov

- Difficulty: Medium to Hard

-

- Description: Computer vision on mobile phones is a very popular topic for many years already. Several years ago we added support for mobile platforms (Android and iOS) into OpenCV. Now it's time to refresh and update the corresponding parts (build scripts, examples, tutorials). Let's use the formula: Modern Android + OpenCV + OpenCV Deep learning module + camera (maybe even use depth sensor on phones that have it). It can be a really cool project that will show your skills that that will be extremely helpful for many OpenCV users.

-

Expected Outcomes:

- Demo app for Android with all the source code available, no binary blobs or proprietary components.

- The app should preferably be native (in C++).

- The app can use camera via native Android API for via OpenCV API. The latter is preferable, but not necessary.

- The app must use OpenCV DNN module. It should run some deep net using OpenCV and visualize the results (e.g. draw rectangles around found objects, the fancier visualization — the better).

- The app should work in realtime on any reasonably fast phone. If it's slow, it's better to choose some lighter net or run it on a lower resolution.

- Preferably the app should not be tightly bound to a particular model. For example, if it's object detection demo, it should be possible to replace one model with another, not via UI, just replace the model file and put the new model name into a config file or into source code.

- There should be a short tutorial (a markdown file with the key code fragments and some screenshot on how to build it and run.

- There should be some efforts applied (see https://github.com/opencv/opencv/wiki/Compact-build-advice) to make the application compact. That was a separate request from various OpenCV users, and a compact mobile app would be the best example how to do that.

- Skills Required: Mastery experience coding in C/C++ and/or Java, practical experience with developing apps for Android. At least basic understanding of computer vision and deep learning technologies, enough to run a deep net and make it run at realtime speed.

- Possible Mentors: Vadim Pisarevsky

- Duration: 175 or 350 hours, depending on the feature list

-

- Description: Many modern ML frameworks are built in Python or provide Python interface. It's a standard approach to use Python's numpy extension for ML API or at least use numpy as a reference point to describe semantics of tensor processing operations. In particular, a very popular deep learning model representation standard ONNX often refers to numpy in the description of ONNX operations. Even though OpenCV provides a number of functions (mostly in the core and dnn modules) to operate on multi-dimensional dense arrays, so far we cannot claim that we provide equivalent functionality with similar API. The goal of this project is to fulfil the gap.

-

Expected Outcomes:

- Extended/new functionaity in OpenCV core, dnn modules that matches a subset of numpy and covers most of basic ONNX, TFLITE operations. At minimum, FP32 should be supported. FP16/INT8 support is desirable but optional. In some cases the existing operations should be extended, e.g. add(), subtract() etc. should be extended to support numpy broadcast semantics.

- A set of unit tests to check the new/extended functionality. Probably, the easiest way to test it would be to use OpenCV Python bindings and develop the unit tests in Python where output from OpenCV will be compared with the output from numpy.

- A sample/tutorial to demonstrate this functionality. Probably, there can be a table with the list of numpy operations and equivalent OpenCV functions.

-

Resources:

- ONNX Operators. Please note, how often numpy is referred to.

- Broadcasting on ONNX/numpy

- Numpy User Guide

- Skills Required: Mastery experience coding in C/C++, some practical experience with Python and numpy. At least basic understanding of computer vision and deep learning technologies, enough to run a deep net and make it run at realtime speed.

- Possible Mentors: Egor Smirnov, Vadim Pisarevsky

- Duration: 175 hours

-

- Description: There is growing OpenCV model zoo, where we focus on practical efficient models for various computer vision tasks that often can replace the traditional algorithms not just in terms of quality, but are also quite competitive in terms of speed. Here are already some efforts done in this direction, e.g. https://arxiv.org/abs/1903.07414. The goal of this project is to find several candidates for light-weight optical flow model for OpenCV model zoo, perform comparison for the best quality and speed, select the best candidate or candidates and add the corresponding model, as well as the examples in Python on how to use it. The examples should use OpenCV DNN for the inference.

-

Expected Outcomes:

- One or more lightweight optical flow models trained (or borrowed if the license is appropriate), quantized if accuracy does not drop too much, and submitted to OpenCV Model Zoo along with scripts for quantization and evaluation.

- Necessary patches, if any, for OpenCV DNN to support the provided lightweight optical flow models.

- Examples in C++ and Python that demonstrate the use of provided models.

- Skills Required: Good Python and C++ coding skills, training and using deep learning, basic knowledge of computer vision

- Possible Mentors: Yuantao Feng, Shiqi Yu

- Duration: 175 hours

-

- Description: Deep learning techniques impressively improve the performance of object tracking algorithms. Such algorithms have been deployed on some drones and robot dogs, with which people can record videos in a distance without the concern of losing cameras. Computation capacity and power supply can be critical on edge devices such as drones and robot dogs. Object tracking models should be real-time and power-efficient enough to run on edge devices.

-

Expected Outcomes:

- One or more lightweight object detection models trained (or borrowed if the license is appropriate), quantized if accuracy does not drop too much, and submitted to OpenCV Model Zoo along with scripts for quantization and evaluation.

- Necessary patches, if any, for OpenCV DNN to support the provided lightweight object detection models.

- Examples in C++ and Python that demonstrate the use of provided models.

- Resources:

- Skills Required: Good Python and C++ coding skills, training and using deep learning, basic knowledge of computer vision

- Possible Mentors: Zihao Mu, Shiqi Yu

- Duration: 175 hours

-

-

Description: RISC-V is still one of our main target platforms. During past couple of years we brought in some RISC-V optimizations based on RISC-V Vector extension by adding another backend to OpenCV universal intrinsics. The implementation works correctly and the latest implementation is also quite efficient, but it introduced some API changes that makes it somewhat incompatible with the majority of vectorized loops in OpenCV. For example, v_add() function should be used instead of the overloaded "+" operator, the number of vector lanes should be captured into a local variable, e.g. using

VTraits<v_float32>::nlanesinstead of using already efficient compile-time constantv_float32::nlanes. The goal of this project is to convert many of the vectorized loops in core, imgproc, video and dnn modules to the form which will become compatible with vector extensions and yet will stay compatible with traditional fixed-size SIMD extensions like SSE2, AVX2, NEON etc. -

Expected Outcomes:

- A series of patches for core, imgproc, video and dnn that will make the code compatible with the new RISC-V extensions without loosing compatibility with existing SIMD extensions. Since it's a lot of boring work, if done manually, we expect that some automated or semi-automated approach is developed and used.

- Resources:

- Skills Required: mastery plus experience coding in C++; good skills of optimizing code using SIMD.

- Possible Mentors: Mingjie Xing, Elena Gvozdeva, Maxim Shabunin

- Difficulty: Hard

- Duration: 175 hours

-

Description: RISC-V is still one of our main target platforms. During past couple of years we brought in some RISC-V optimizations based on RISC-V Vector extension by adding another backend to OpenCV universal intrinsics. The implementation works correctly and the latest implementation is also quite efficient, but it introduced some API changes that makes it somewhat incompatible with the majority of vectorized loops in OpenCV. For example, v_add() function should be used instead of the overloaded "+" operator, the number of vector lanes should be captured into a local variable, e.g. using

-

- Description: OpenCV DNN module includes several backends for efficient inference on various platforms. Some of the backends are heavy and bring in a lot of dependencies, so it makes sense to make the backends dynamic. Recently, we did it with OpenVINO backend: https://github.com/opencv/opencv/pull/21745. The goal of this project is to make CUDA backend of OpenCV DNN dynamic as well. Once it's implemented, we can have a single set of OpenCV binaries and then add the necessary plugin (also in binary form) to accelerate inference on NVidia GPUs without recompiling OpenCV.

-

Expected Outcomes:

- A series of patches for dnn and maybe core module to build OpenCV DNN CUDA plugin as a separate binary that could be used by OpenCV DNN. In this case OpenCV itself should not have any dependency of CUDA SDK or runtime - the plugin should encapsulate it. It is fine if the user-supplied tensors (cv::Mat) are automatically uploaded to GPU memory by the engine (cv::dnn::Net) before the inference and the output tensors are downloaded from GPU memory after the inference in such a case.

- Resources:

- Skills Required: mastery plus experience coding in C++; good practical experience in CUDA. Acquaintance with deep learning is desirable but not necessary, since the project is mostly about software engineering, not about ML algorithms or their optimization.

- Possible Mentors: Alexander Smorkalov

- Difficulty: Hard

- Duration: 175 hours

-

- Description: During GSoC 2022 a new cool multi-camera calibration algorithm has been developed: https://github.com/opencv/opencv/pull/22363. This year we would like to extend this work with more test cases, tune the accuracy and build higher-level user-friendly tool (based on the script from the tutorial) to perform multi-camera calibration.

-

Expected Outcomes:

- A series of patches with more unit tests and bug fixes for the multi-camera calibration algorithm

- Tool with convenient API that will be more or less comparable and compatible with Kalibr tool (https://github.com/ethz-asl/kalibr)

- New/improved documentation on how to calibrate cameras

- Skills Required: Mastery of C++ and Python, mathematical knowledge of camera calibration, ability to code up mathematical models

- Difficulty: Difficult

- Possible Mentors: Jean-Yves Bouguet

- Duration: 175 hours

-

- Description: Vulkan is a low-overhead, cross-platform API 3D graphics and computing. And It's intended to offer higher performance and more efficient CPU and GPU. GPU chips are already in mobile phones, PCs, and even some development boards. In order for the DNN model to benefit from the GPU, we will use Vulkan to call the GPU. Actually, in 2018, OpenCV supported Vulkan backend (PR 12703). However, due to too few supported Vulkan layers and the lack of necessary speed optimization, the current Vulkan backend is very slow. The goal of this project is to let more layers support Vulkan and accelerate layers that are already supported.

-

Expected Outcomes:

- Improve/Support Vulkan layer in OpenCV DNN modules. For now, we can just focus on FP32 precision.

- A list of higher priority layers:

- Fully support NaryEltwise Layer, the key is broadcast mechanism.

- Some activation layers like Mesh, Ceil, Celu, Selu, Sigmoid, HardSigmoid, etc.

- Fully Connected/GEMM layer

- Reshape layer

- DeConvolution layer

- Flatten layer

- BatchNorm layer

- Resources: * 1. Vulkan implementation of NCNN * 2. Vulkan implementation of MNN * 3. Vulkan implementation of Tengine

- Skills Required: Mastery of C++; good practical experience in Vulkan; at least a basic understanding of computer vision and deep learning technologies.

- Difficulty: Difficult

- Possible Mentors: Zihao Mu, Shiqi Yu

- Duration: 175 hours

-

-

Description: G-API is a graph execution framework for OpenCV. Among many other features, it supports a pipelined execution model for efficient video stream processing. Its C++ interface provides a method to implement a custom stream source to feed a compiled pipeline with data, and there's a number of those available already (e.g. atop of

cv::VideoCaptureand GStreamer framework). In its Python interface, however, there's no way to implement a custom source for the pipeline. Given a large number of available Python libraries, it would be beneficial for OpenCV users to glue their own sources (or algorithms) with G-API in Python. The design of this feature is up to the student, though we can give some useful hints (see "expected outcomes"). -

Expected Outcomes:

- Introduce a proxy class for Python-parametrizable source in C++ (as G-API remains to be a native C++ framework, there still must be a C++ part in the integration).

- Expose & decorate this proxy class in Python bindings.

- Cover with tests and (ideally) a tutorial.

- Resources:

- Skills Required: Good understanding of C++ and Python; ideally experience with writing apps atop of GStreamer and/or FFMPEG.

- Possible Mentors: Anatoliy Talamanov, Dmitry Matveev

- Difficulty: Easy

-

Description: G-API is a graph execution framework for OpenCV. Among many other features, it supports a pipelined execution model for efficient video stream processing. Its C++ interface provides a method to implement a custom stream source to feed a compiled pipeline with data, and there's a number of those available already (e.g. atop of

-

- Description: G-API is a graph execution framework for OpenCV. Originally it was a C++ framework, but back in ~2020-21 it was also exposed in OpenCV bindings for Python. In addition to core functionality and basic functions, some extra capabilities like declaring new operations, writing kernels in Python directly, and managing stateful kernels were added in Python G-API. It all sums into a great potential which needs to be properly exposed. The goal of this project is to develop a complete tutorial on G-API in Python.

-

Expected Outcomes:

- A new tutorial set of chapters is landed in the OpenCV documentation (namely, tutorials, ideally structured as well as the this one).

- Cover the G-API aspects from basic (defining and running graphs) to advanced (defining own operations, kernels, +

numpyinter-op) topics; - Propose a way to utilize/reuse the Jupyter notebook format for the tutorials; ideally write tutorials in that format & extend OpenCV doxygen to integrate those.

- Resources:

- Skills Required: Python, Jupyter, Doxygen, CMake, English, presentation and storytelling skills.

- Possible Mentors: Dmitry Matveev, Anatoliy Talamanov

- Difficulty: Medium

-

- Description: G-API is a graph execution framework for OpenCV. G-API allows to build and run complete application pipelines including input (camera/decode), pre-processing, DL inference, post-processing, image processing, and other CV operations. Historically, there were two full-featured inference backends in G-API: OpenVINO™ Toolkit and Microsoft® ONNX Runtime. The OpenCV's own DNN module-based inference backend was also started, but due to missing some functionality (required by G-API at its graph compilation stage, when no data is present) that development discontinued. Now there is a great chance to contribute the missing functionality to OpenCV DNN module and complete the DNN backend for G-API.

-

Expected Outcomes:

- Missing functionality is identified and contributed into OpenCV DNN module;

- A new DNN-based inference backend is added to G-API, supporting the main G-API operations for inference:

infer,infer-roi,infer-list,infer2. - Respective tests and a sample (using models from different frameworks) are implemented.

- Ideally: Python G-API is extended with DNN module for inference.

-

Resources:

- One of the most recent PRs where G-API's

inferinterface has been implemented (for OpenCV AI Kit): #21504.

- One of the most recent PRs where G-API's

- Skills Required: Advanced C++, good knowledge of modern inference frameworks and runtimes. Experience with OpenCV DNN is a plus. Python is a plus.

- Possible Mentors: Dmitry Matveev

- Difficulty: Hard

-

- Description: The RealSense camera is a depth camera made of Vision Processors, Depth and Tracking Modules. Currently, OpenCV can support Realsense cameras by calling the official realsense SDK. But users need to re-build the OpenCV with SDK from source code to run correctly. This project is intended to let OpenCV support RealSense cameras by UVC protocol. So that users can directly get the depth stream through the OpenCV binary release without compiling from scratch.

-

Expected Outcomes:

- OpenCV can successfully read Realsense's depth stream through UVC.

- At least one system is supported: windows or macOS or Ubuntu.

- Resources: * Orbbec supports depth cameras through uvc protocol. * cmt-uvc-realsense

- Skills Required: Advanced C++; basic knowledge of UVC protocol and opencv/videoio framework. A realsense depth camera is required for debugging.

- Possible Mentors: < TBD >

- Difficulty: Medium

1. #### _IDEA:_ <Descriptive Title>

* ***Description:*** 3-7 sentences describing the task

* ***Expected Outcomes:***

* < Short bullet list describing what is to be accomplished >

* <i.e. create a new module called "bla bla">

* < Has method to accomplish X >

* <...>

* ***Resources:***

* [For example a paper citation](https://arxiv.org/pdf/1802.08091.pdf)

* [For example an existing feature request](https://github.com/opencv/opencv/issues/11013)

* [Possibly an existing related module](https://github.com/opencv/opencv_contrib/tree/master/modules/optflow) that includes some new optical flow algorithms.

* ***Skills Required:*** < for example mastery plus experience coding in C++, college course work in vision that covers optical flow, python. Best if you have also worked with deep neural networks. >

* ***Possible Mentors:*** < your name goes here >

* ***Difficulty:*** <Easy, Medium, Hard>

-

-

- Use the OpenCV How to Contribute and Aruco module in opencv_contrib as a guide.

- Add unit tests described here, see also the Aruco test example

- Add a tutorial, and sample code

- see the Aruco tutorials and how they look on the web.

- See the Aruco samples

- Make a short video showing off your algorithm and post it to Youtube. Here's an Example.

-

The process is described at GSoC home page

- Contributors will be paid only if:

-

Phase 1:

- You must generate a pull request

- That builds

- Has at least stubbed out (place holder functions such as just displaying an image) functionality

- With OpenCV appropriate Doxygen documentation (example tutorial)

- Includes What the function or net is, what the function or net is used for

- Has at least stubbed out unit test

- Has a stubbed out example/tutorial of use that builds

- See the contribution guild

- and the coding style guild

- the line_descriptor is a good example of contribution

- You must generate a pull request

-

Phase 2:

- You must generate a pull request

- That builds

- Has all or most of the planned functionality (but still usable without those missing parts)

- With OpenCV appropriate Doxygen documentation

- Includes What the function or net is, what the function or net is used for

- Has some unit tests

- Has a tutorial/sample of how to use the function or net and why you'd want to use it.

- Optionally, but highly desirable: create a (short! 30sec-1min) Movie (preferably on Youtube, but any movie) that demonstrates your project. We will use it to create the final video:

- You must generate a pull request

-

Extended period:

- TBD

-

Phase 1:

- Contact us, preferably in February or early March, on the opencv-gsoc googlegroups mailing list above and ask to be a mentor (or we will ask you in some known cases)

- If we accept you, we will post a request from the Google Summer of Code OpenCV project site asking you to join.

- You must accept the request and you are a mentor!

- You will also need to get on:

- You then:

- Look through the ideas above, choose one you'd like to mentor or create your own and post it for discussion on the mentor list.

- Go to the opencv-gsoc googlegroups mailing list above and look through the project proposals and discussions. Discuss the ideas you've chosen.

- Find likely contributors, ask them to apply to your project(s)

- You will get a list of contributors who have applied to your project. Go through them and select a contributor or rejecting them all if none suits and joining to co-mentor or to quit this year are acceptable outcomes.

- Make sure your contributors officially apply through the Google Summer of Code site prior to the deadline as indicate by the Contributor Application Period in the time line

- Then, when we get a slot allocation from Google, the administrators "spend" the slots in order of priority influenced by whether there's a capable mentor or not for each topic.

- Contributors must finally actually accept to do that project (some sign up for multiple organizations and then choose)

- Get to work!

If you are accepted as a mentor and you find a suitable contributor and we give you a slot and the contributor signs up for it, then you are an actual mentor! Otherwise you are not a mentor and have no other obligations.

- Thank you for trying.

- You may contact other mentors and co-mentor a project.

You get paid a modest stipend over the summer to mentor, typically $500 minus an org fee of 6%.

Several mentors donate their salary, earning ever better positions in heaven when that comes.

Ankit Sachan

Anatoliy Talamanov

Clément Pinard

Davis King

Dmitry Kurtaev

Dmitry Matveev

Edgar Riba

Gholamreza Amayeh

Grace Vesom

Jiri Hörner

João Cartucho

Justin Shenk

Michael Tetelman

Ningxin Hu

Rostislav Vasilikhin

Satya Mallick

Stefano Fabri

Steven Puttemans

Sunita Nayak

Vikas Gupta

Vincent Rabaud

Vitaly Tuzov

Vladimir Tyan

Yida Wang

Jia Wu

Yuantao Feng

Zihao Mu

Gary Bradski

Vadim Pisarevsky

Shiqi Yu

© Copyright 2024, OpenCV team

- Home

- Deep Learning in OpenCV

- Running OpenCV on Various Platforms

- OpenCV 5

- OpenCV 4

- OpenCV 3

- Development process

- OpenCV GSoC

- Archive