New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add LOBPCG solver for large symmetric positive definite eigenproblems #184

Conversation

|

pls let me know if you need any advice on LOBPCG implementation tricks |

|

Yes it is always a bit difficult to re-implement something from code and understand the reasoning behind it. So I currently have some free time and decided to implement LOBPCG for manifold learning. I brushed up some numeric classes for the conjugated gradient and Rayleigh-Ritz method.

Thanks for your help! |

|

@bytesnake Thanks for your efforts! Please see my answers below:

Cf. https://scikit-learn.org/stable/modules/generated/sklearn.manifold.spectral_embedding.html

Without the "conjugated" matrix

Two reasons:

Manually, after a large battery of tests, checking for failures. "Explicit" is more stable, but slower. 'float32' is less stable compared to 'float64', so requires switching to "explicit" more aggressively. are more on the safe and slower side. |

I already looked into the code. For now I try to have the code as simple as possible, though support for Lanczos method through

Sorry I wasn't very clear in my comment. I expressed

Thank you for clarifying that.

Okay, I will just adopt these. The second case happens, when the preconditioner operates in 32bit and the "stiffness matrix" in 64bit. |

|

@bytesnake you might also want to look at the LOBPCG C code for inspiration: https://github.com/lobpcg/blopex |

|

I wrote a small benchmark this morning, comparing |

|

@termoshtt please let me know when this is ready to merge |

|

Thanks a lot, and sorry for later response :< My concern is about Is it possible to replace ndarray-rand part using ndarray-lianlg::generate::random_* functions? |

|

Yes of course, the |

|

pushed a new commit which removes the dependency to |

|

I put a little advertisement at Please let me know if it is OK and ping me with your LinkedIn name if you want me to credit you there. |

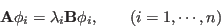

This PR ports the LOBPCG algorithm from scipy to Rust. The algorithm is useful for the symmetric eigenproblem for just a couple of eigenvalues (for example for multidimensional scaling of gaussian kernels). Solves the issue #160

I did not implement the generalized eigenproblem for matrix

Bdifferent to identity, as its a uncommon use-case (at least in machine-learning), but if required the modification should be minor.It also adds access to the functions

ssygv,dsygv,zhegv,chegvfor the generalized eigenvalue problemwith additional mass matrix

B. The traits are implemented for tuples ofAandB, so you can use it like thisRemaining issues:

Ylobpcg.rsfile and add examplesExample: