New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Rewrite BiLock lock/unlock to be allocation free

#2384

base: master

Are you sure you want to change the base?

Conversation

1de24ef

to

7b5f662

Compare

|

Thanks! It seems the previous attempt had performance issue, so I'm curious if this implementation fixed that issue. |

|

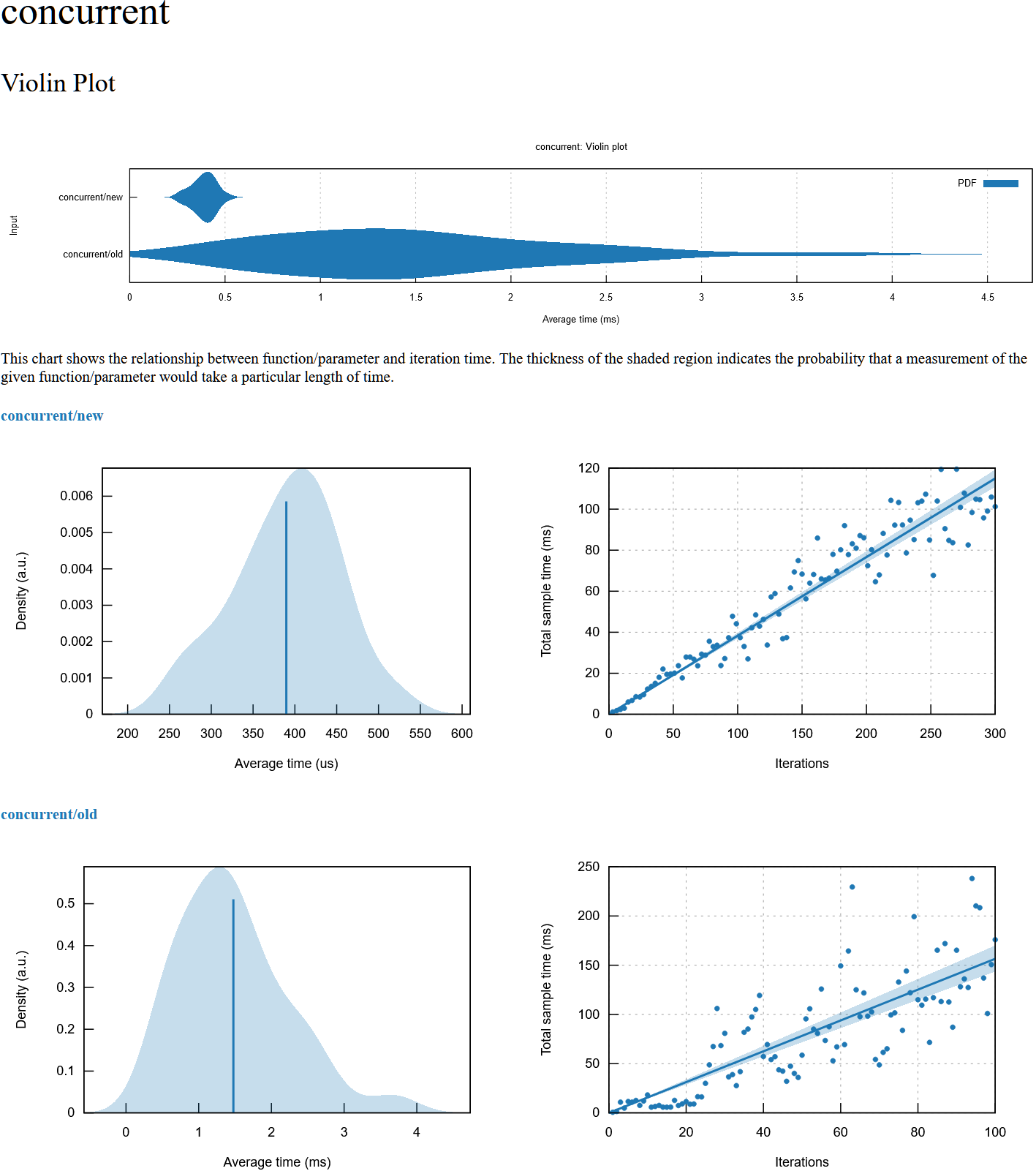

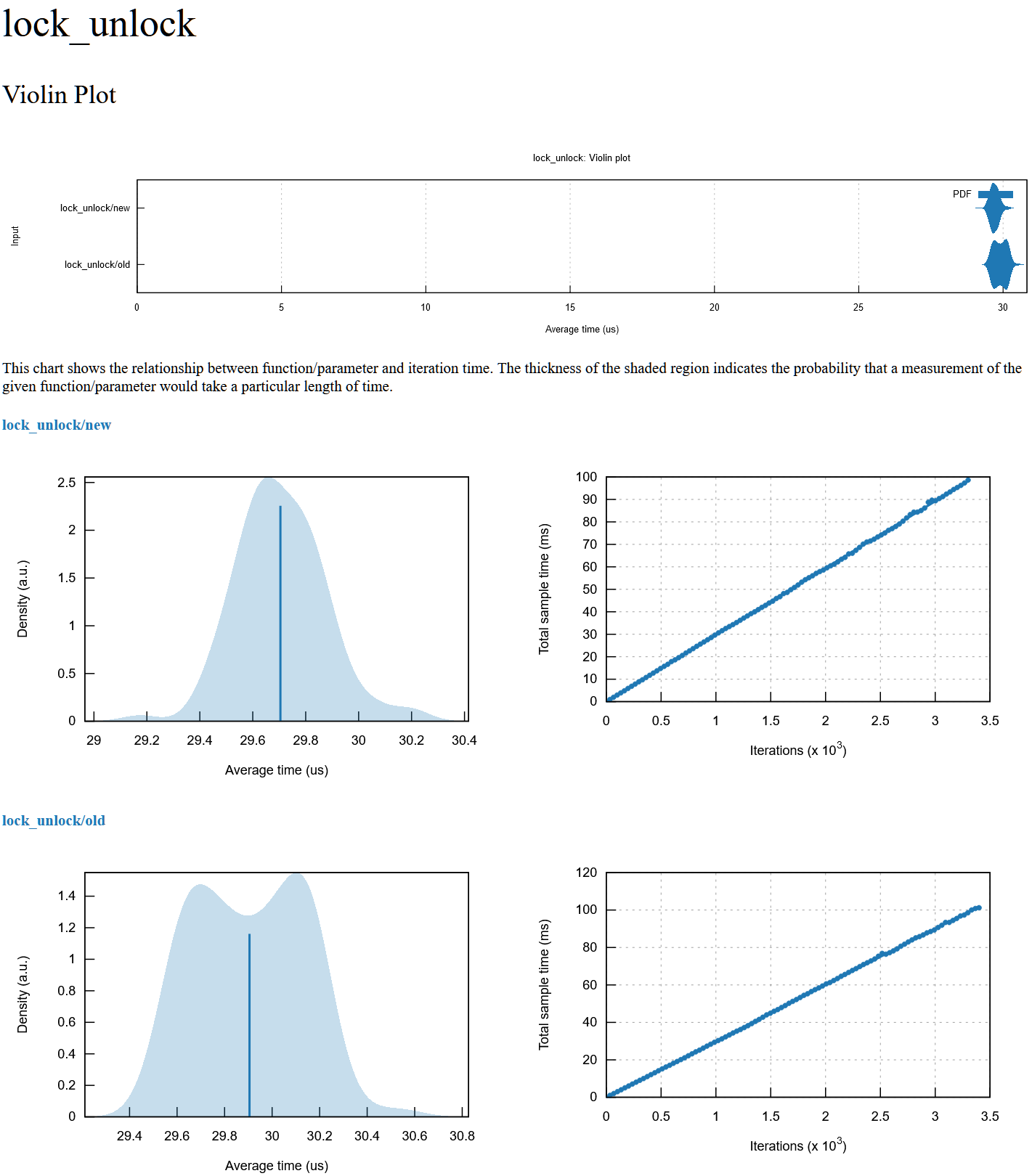

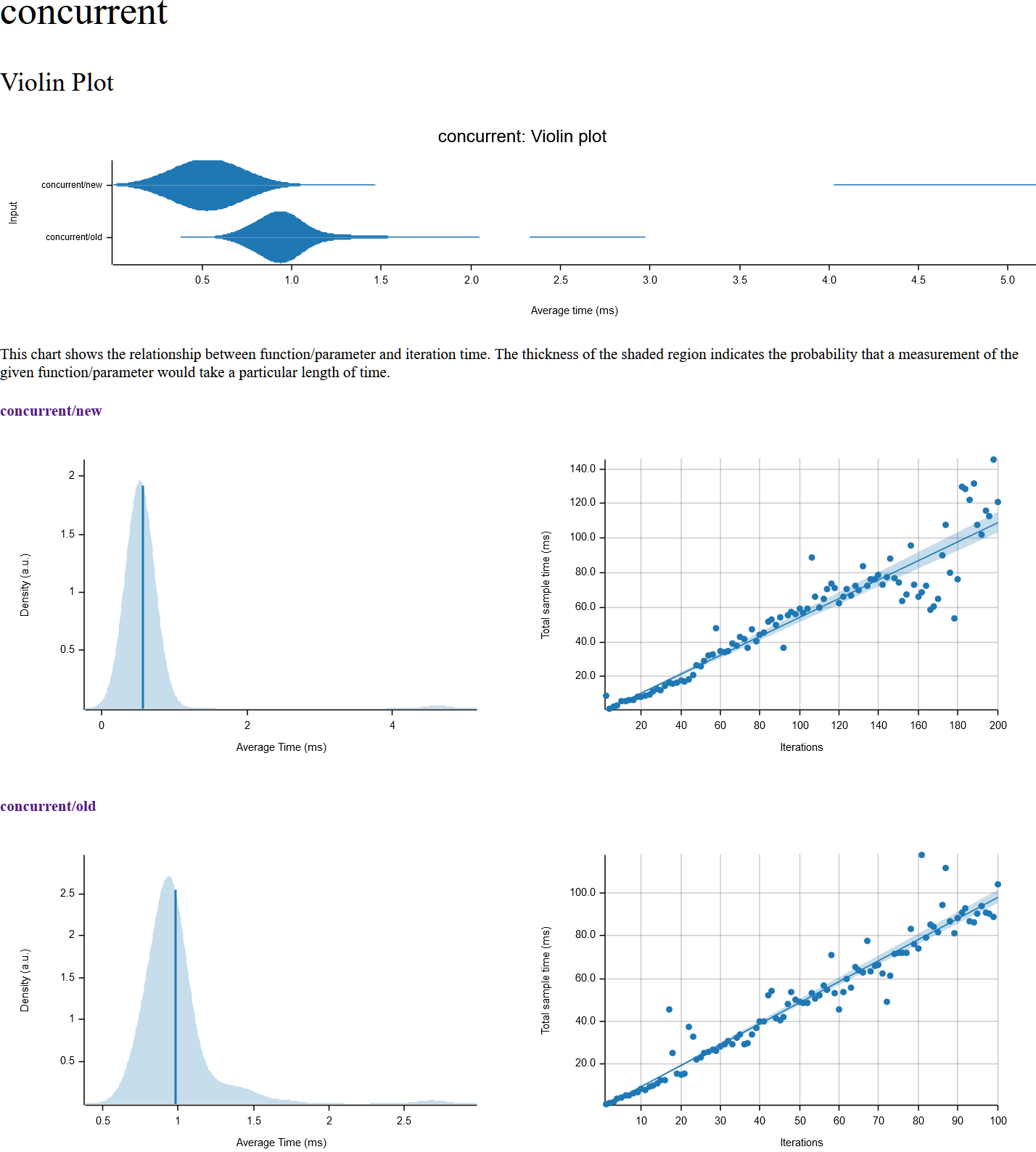

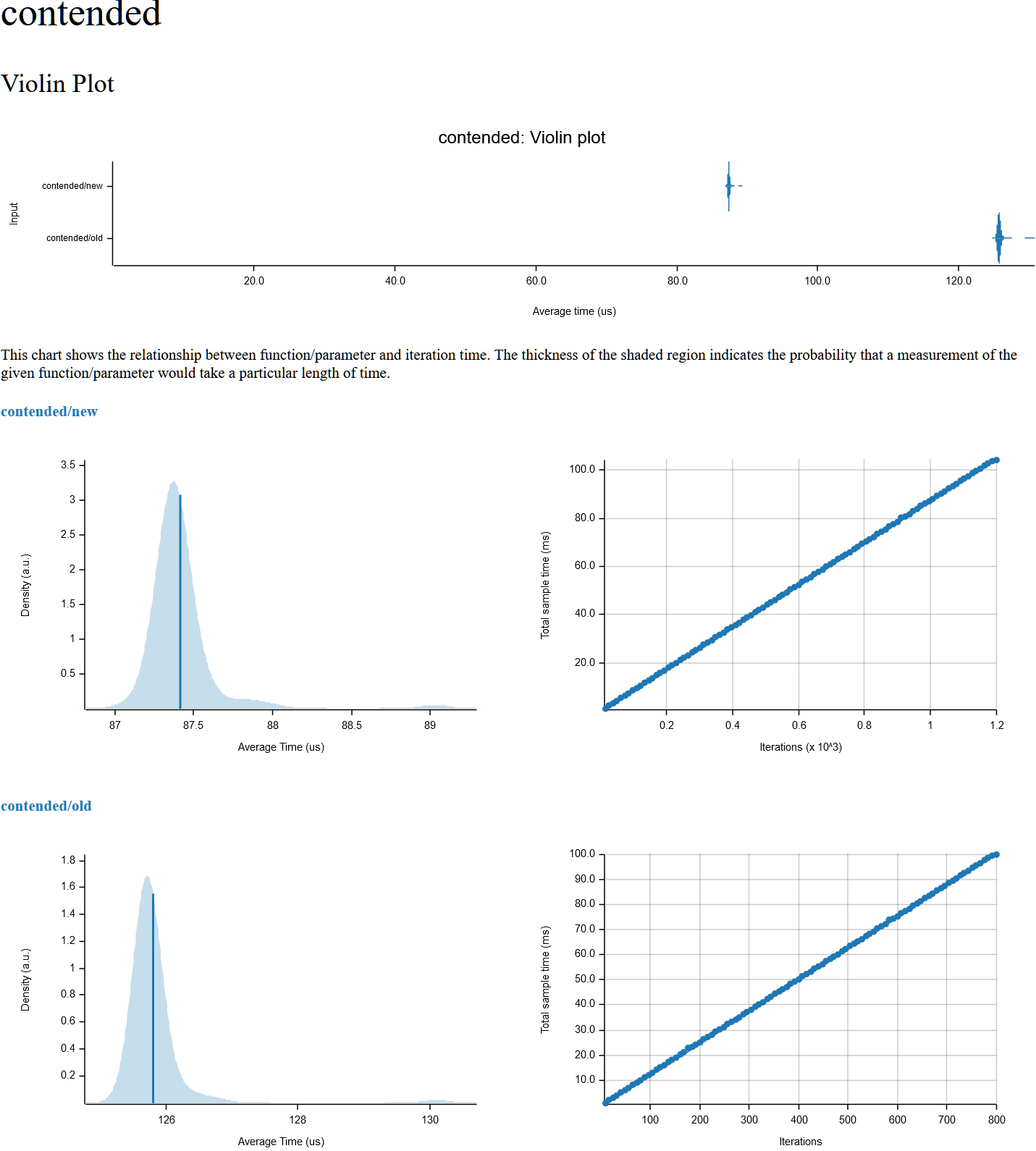

Sure, here's some benchmarks with criteron on my Ryzen 2700X desktop. I also did some preliminary benchmarks on my ARM phone with similar results, although with a slight (40us -> 50us) regression on lock_unlock for some reason. Additionally with the old implementation the contended benchmark was leaking memory like crazy on ARM, allocating over 8GB during the ~5s benchmark run. |

Add concurrent bilock bench from rust-lang#606 Add bilock exclusion test Run rustfmt on bilock.rs

Inspired by a previous attempt to remove allocation (rust-lang#606), this version uses three tokens to synchronize access to the locked value and the waker. These tokens are swapped between the inner struct of the lock and the bilock halves. The tokens are initalized with the LOCK token held by the inner struct, the WAKE token held by one bilock half, and the NULL token held by the other bilock half. To poll the lock, our half swaps its token with the inner token: if we get the LOCK token we now have the lock, if we get the NULL token we swap again and loop if we get the WAKE token we store our waker in the inner and swap again, if we then get the LOCK token we now have the lock, otherwise we return Poll::Pending To unlock the lock, our half swaps its token (which we know must be the LOCK token) with the inner token: if we get the NULL token, there is no contention so we return if we get the WAKE token, we wake the waker stored in the inner Additionally, this change makes the bilock methods require &mut self

7b5f662

to

37c66f6

Compare

45e044f

to

aa207ca

Compare

aa207ca

to

08707e3

Compare

|

FWIW, results on my MacBook Pro (Intel Core i7-9750H, macOS v11.2.3). old (2be999d) new (37c66f6) new (3208bb5) new (08707e3) new (be2242e) new (aa5f129) new (c8788cd) Both 3208bb5 and be2242e look good on my machine (I tend to prefer 3208bb5, which is compatible with thread sanitizer). |

|

any progress here? I would love to see the bilock feature not requiring nightly. |

Inspired by a previous attempt to remove allocation (#606), this version

uses three tokens to synchronize access to the locked value and the waker.

These tokens are swapped between the inner struct of the lock and the

bilock halves. The tokens are initalized with the LOCK token held by the

inner struct, the WAKE token held by one bilock half, and the NULL token

held by the other bilock half.

To poll the lock, our half swaps its token with the inner token:

if we get the LOCK token we now have the lock,

if we get the NULL token we swap again and loop

if we get the WAKE token we store our waker in the inner and swap again,

if we then get the LOCK token we now have the lock, otherwise we return

Poll::Pending

To unlock the lock, our half swaps its token (which we know must be the LOCK

token) with the inner token:

if we get the NULL token, there is no contention so we return

if we get the WAKE token, we wake the waker stored in the inner

Additionally, this change makes the bilock methods require &mut self