Home

A cache stores a portion of the data retrieved from a slower media on a faster media, and the cache hit and miss rates are important factors in determining its performance. Since the time for cache misses is usually much grater than the time for cache hits, the miss rate (number of misses per second) has a significant impact on the performance.

When cache's capacity is exceeded, it has to select and evict existing entries to make some room. The choice of the eviction algorithm (policy) has direct impact on the hit and miss rates.

The Least Recently Used (LRU) is a popular eviction policy due to its simplicity and good hit rate in common scenarios. However, in typical workloads, LRU is not optimal and may have a poor hit rate in cases like full scans.

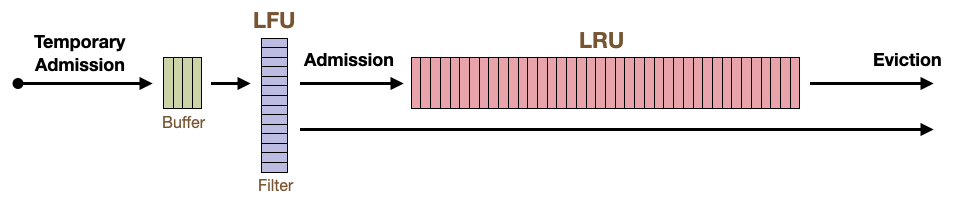

Moka is currently using the TinyLFU policy due to its high hit rate and low memory footprint.

It uses the Least Frequently Used (LFU) filter as the admission policy to determine whether to accept a new entry based on its popularity. The LFU filter relies on a frequency sketch to probabilistically estimate the historic usage of an entry. Once an entry is admitted to the cache, it will be managed by the LRU eviction policy implemented by a doubly linked list.

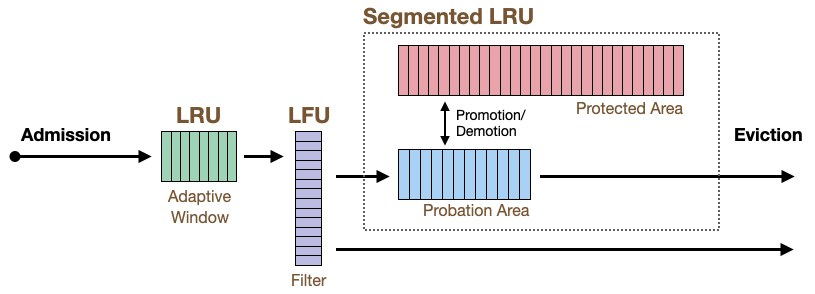

In the future, we will upgrade Moka's TinyLFU to the Window-TinyLFU (W-TinyLFU), which is used by Caffeine cache today. (Caffeine is a popular cache library for Java)

It has an admission LRU window in front of the LFU filter. The admission window prefers entries with high temporal (recency), while the LFU filter and segmented LRU prefer entries with high frequency (popularity). The admission window is adaptive, and the cache will automatically adjust the window size based on the characteristics of the workload.

Here are some benchmarking results. We used Caffeine cache's simulator written in Java, with a JNI-based Moka driver developed by us.

We are comparing Moka v0.7.0's hit ratio against the following cache policies available in the simulator:

- Bélády's optimal policy, for the theoretical upper bound.

- W-TinyLFU policy, used by Caffeine.

- LRU policy, popular in cache implementations.

The hit ratio was calculated by:

- number of hits / (number of hits + number of misses)

The simulator takes access traces in various formats to replay the workloads of real-world systems. It can also simulate many other eviction policies and supports some Java-based cache products. If you are curious, please see the configuration file for the complete list of supported policies and products.

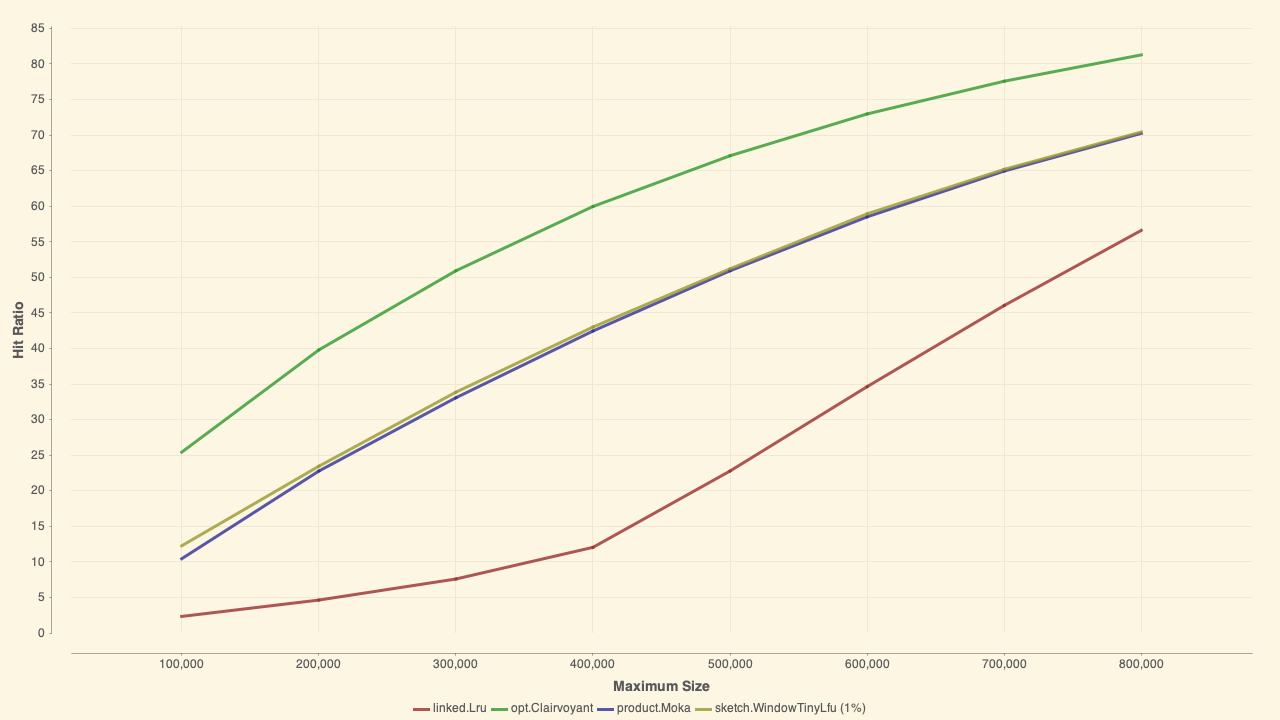

This trace is provided by the authors of the ARC algorithm and is described as "disk read accesses initiated by a large commercial search engine in response to various web search requests."

Higher is better.

- Green line: The optimal policy

- Olive line: W-TinyLFU policy

- Blue line: Moka v0.7.0

- Red line: LRU policy

Moka (TinyLFU) and W-TinyLFU showed results much closer to the optimal hit ratios than LRU policy.

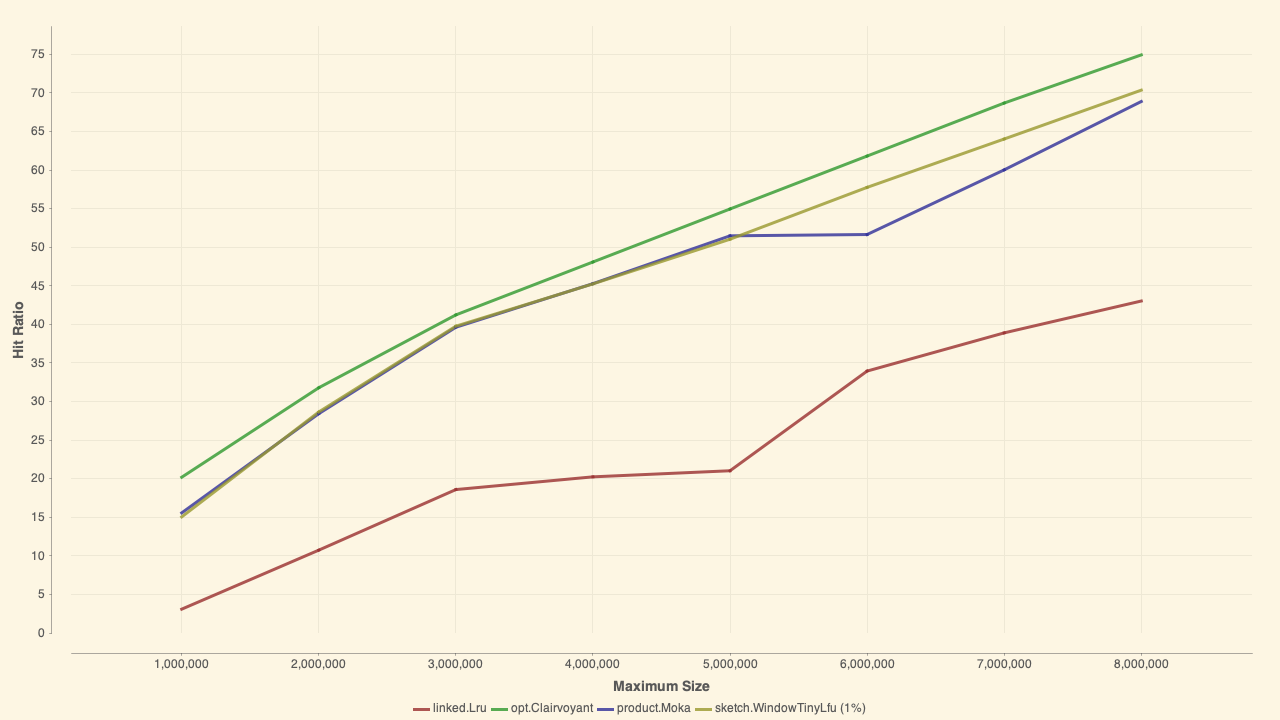

This trace is provided by the authors of the ARC algorithm and is described as "a database server running at a commercial site running an ERP application on top of a commercial database."

Higher is better.

- Green line: The optimal policy

- Olive line: W-TinyLFU policy

- Blue line: Moka v0.7.0

- Red line: LRU policy

Moka's hit ratio dropped when the max size was 6 million entries. W-TinyLFU showed no such trend and maintained nearly optimal hit ratios.

- Caffeine cache's Efficiency page, which has more detailed descriptions about advanced cache policies.

- TinyLFU: A Highly Efficient Cache Admission Policy

- ARC: A Self-Tuning, Low Overhead Replacement Cache