TODO: Investingate discounting.

Reimplementation of Causal Entropic Forces, bolstered by pseudocode and equations in supplementary material.

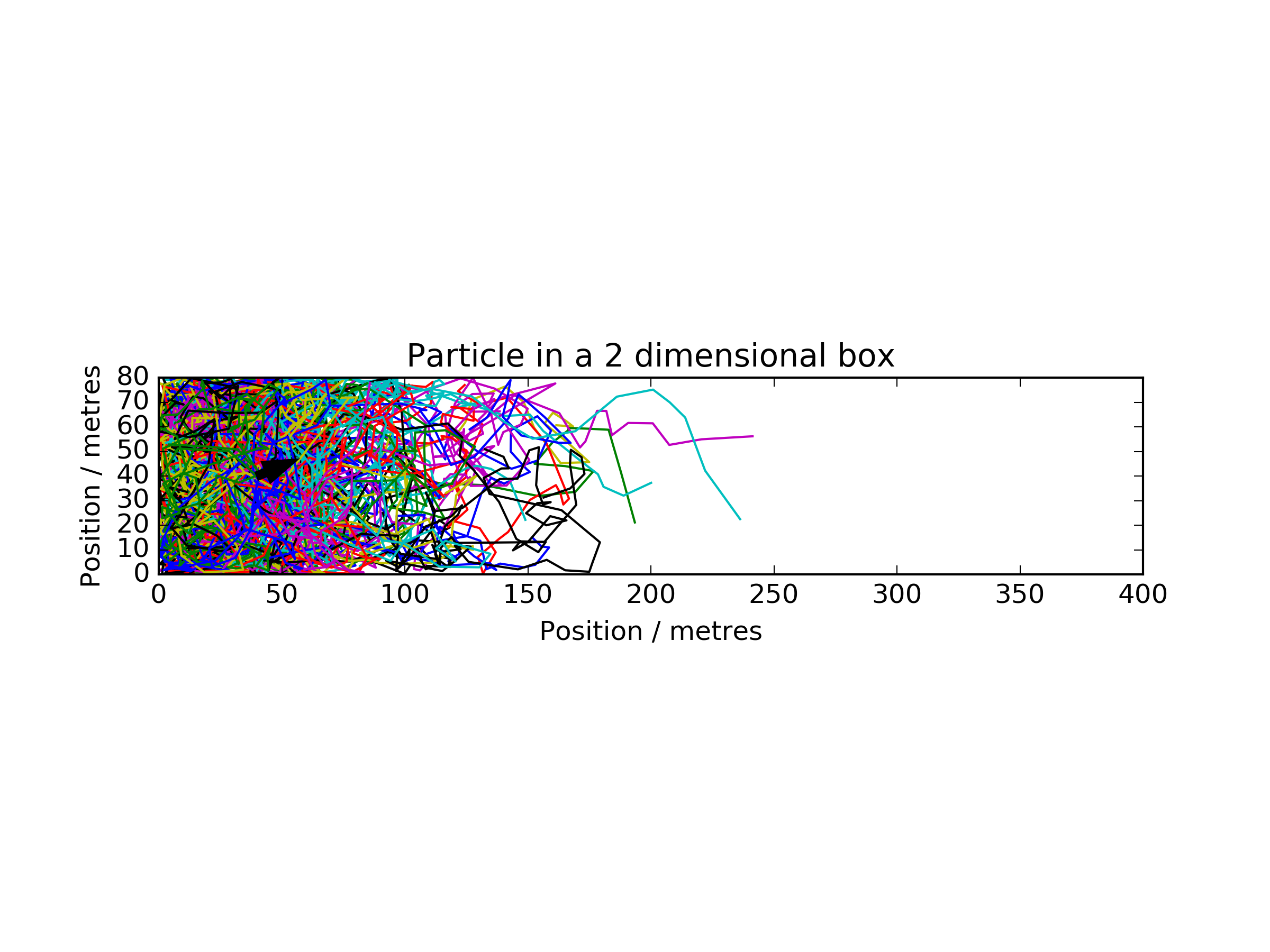

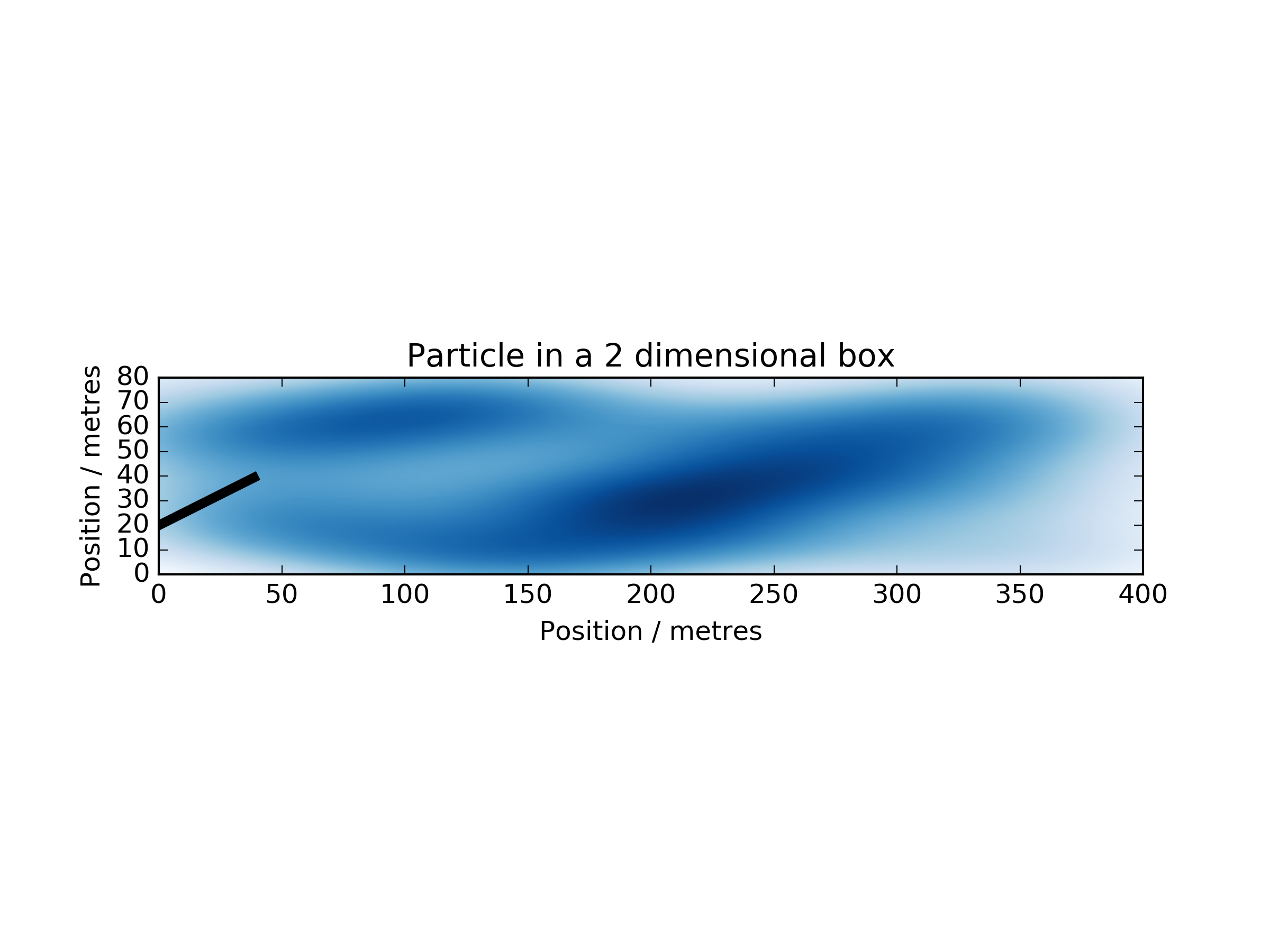

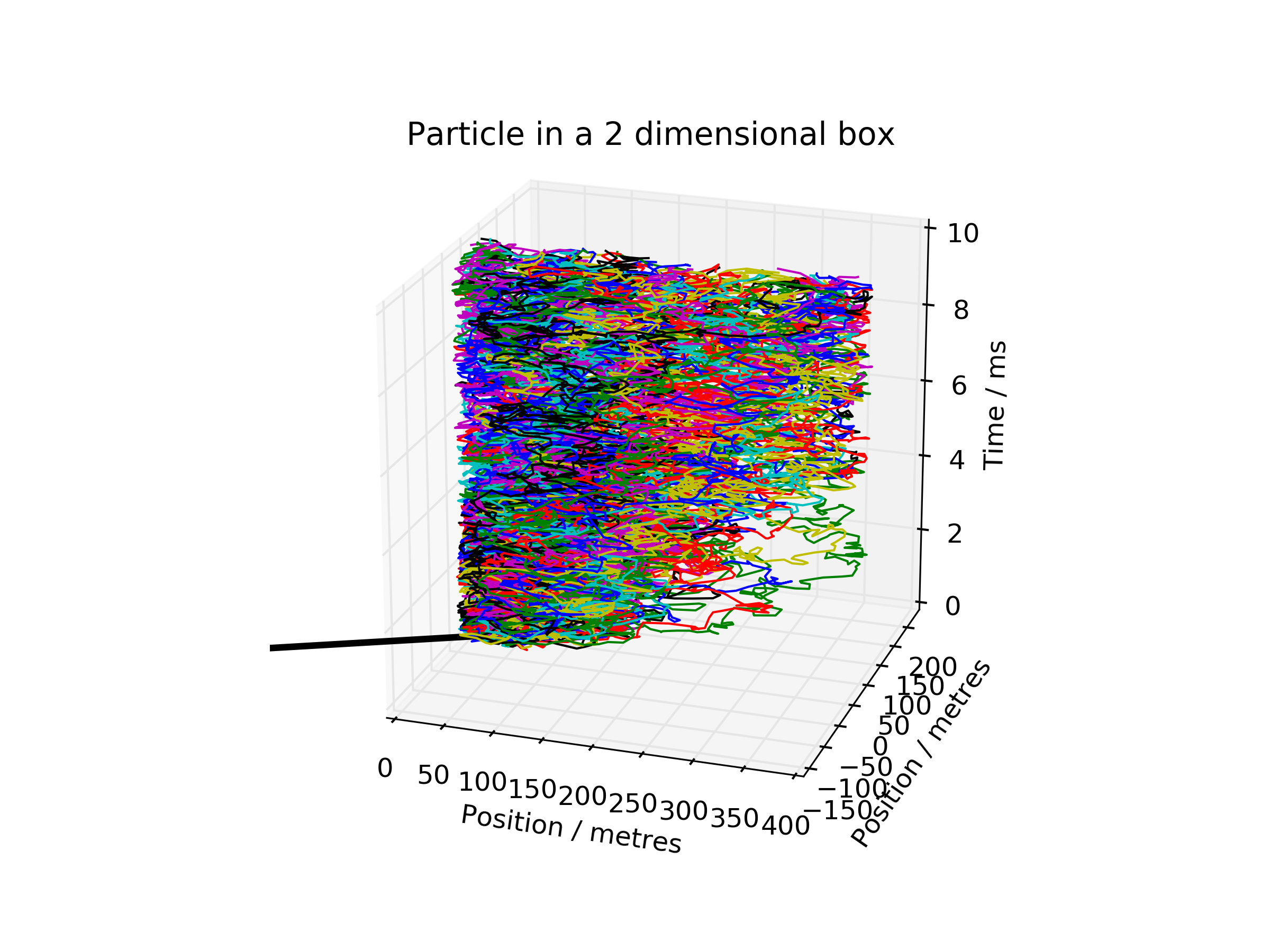

The algorithm is able to find policies unsupervised from an environment, provided it can interact with the environment beforehand. To do this, it iteratively finds the next best move it can take, represented in the below diagrams by the thick black arrow.

First, it generates random walks throughout the environment to sample the evolution of the environment and agent.

For each destination the random walks end, the algorithm computes the likelihood (conditional probability density) that the system will evolve to that particular destination. Then, a weighted average of all first steps are taken with this likelihood to find the step that maximises the number of potential futures.

The first 50 steps are here expressed as a light cone.

Python libraries: numpy, matplotlib, scipy

$ pip install numpy matplotlib scipy

Properties of the agent can be specified in config.json.

{

"environment" : "<name_of_python_file_with_environment_class>",

"num_sample_paths" : 200, // number of paths to sample

"plot" : {true, false},

"steps" : 100, // number of steps within the policy

"cur_macrostate" : null // for use in plotting light cones

}

To design your own environment, ensure that it contains the same methods and variables as those in particleBox.py. The agent can then be run by the following command.

$ python agent.py

For more code and other derivative agents, download the v0.5 release