A set of Kubernetes controllers to automate Kubernetes clusters upgrade using Cluster API's ClusterClass.

This project is a set of Kubernetes controllers that build Kubernetes machine images using upstream image-builder, and then use those images to upgrade Kubernetes clusters that are using Cluster API's ClusterClass.

This should work with any Infrastructure provider supported by image-builder, but it was only tested with Docker's CAPD and vSphere's CAPV.

The API and controllers design take inspiration from Cluster API's including:

- There are template types that are used to create the actual types. This is similar to CAPI's bootstrap and infrastructure templates.

- Some of the types comply to API contracts and share information through status fields. This is similar to CAPI's infrastructure provider contracts and allows external controllers implementations.

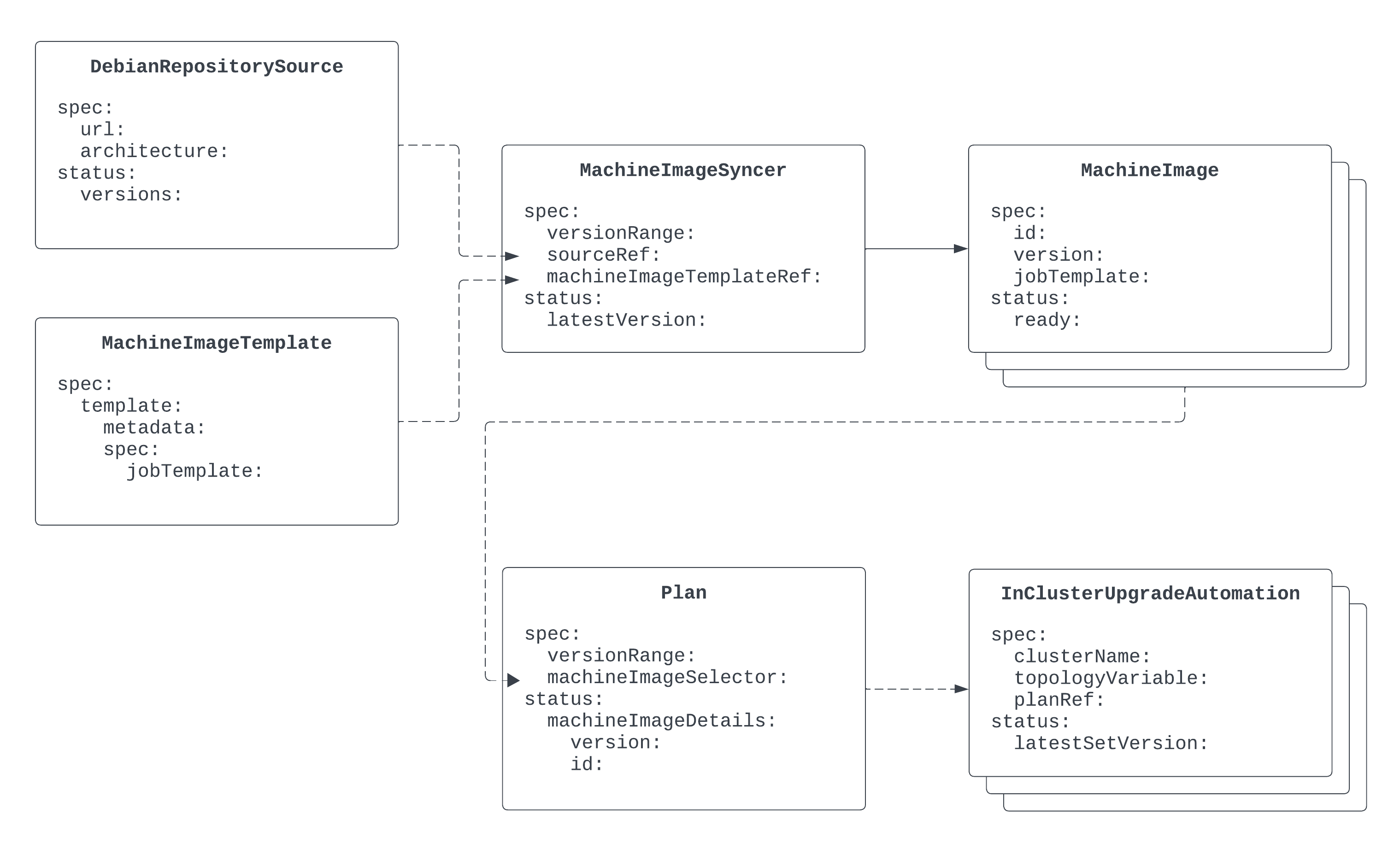

DebianRepositorySourcesatisfies theMachineImageSyncercontract, fetching all available versions from a Kubernetes Debian repository and settingstatus.versions.MachineImageTemplateis a template forMachineImageused byMachineImageSyncer.MachineImageSyncercreates a newMachineImageobjects with the latest version fromsourceRefobject.DebianRepositorySourceis one implementation of the contract, but any type can be used as long as it sets thestatus.versionsfield.MachineImageruns a Job to build a Kubernetes machine image. The examples use image-builder, but any tool can be used as long as it generates a Kubernetes machine image and labels the Job withkubernetesupgraded.dimitrikoshkin.com/image-id. The controller setsstatus.readyonce the Job succeeds and copies the label value tospec.id.Planfinds the latestMachineImagewithspec.versionthat is inspec.versionRangeand matches an optionalmachineImageSelector. It then setsstatus.machineImageDetailswithversionand imageid.InClusterUpgradeAutomationsatisfies the Plan contract. It will updatespec.topology.versionand (an optional) field fromtopologyVariableof the CAPI Cluster, using the values from the Plan'sstatus.machineImageDetails. .

You’ll need a Kubernetes cluster to run against. You can use KIND to get a local cluster for testing, or run against a remote cluster.

Note: Your controller will automatically use the current context in your kubeconfig file (i.e. whatever cluster kubectl cluster-info shows).

This will deploy the controllers and a sample to build a vSphere OVA, read the upstream docs on how to configure the vSphere provider.

-

Deploy the CRDs and the controllers:

kubectl apply -f https://github.com/dkoshkin/kubernetes-upgrader/releases/latest/download/components.yaml

-

Create a Secret with vSphere credentials:

cat << 'EOF' > vsphere.json { "vcenter_server":"$VSPHERE_SERVER", "insecure_connection": "true", "username":"$VSPHERE_USERNAME", "password":"$VSPHERE_PASSWORD", "vsphere_datacenter": "$VSPHERE_DATACENTER", "cluster": "$VSPHERE_CLUSTER", "datastore":"$VSPHERE_DATASTORE", "folder": "$VSPHERE_TEMPLATE_FOLDER", "network": "$VSPHERE_NETWORK", "convert_to_template": "true" } EOF kubectl create secret generic image-builder-vsphere-vars --from-file=vsphere.json

-

Deploy the samples:

kubectl apply -f https://github.com/dkoshkin/kubernetes-upgrader/releases/latest/download/example-vsphere-with-job-template.yaml

-

The controller will create a Job to build the image, after some time you should see the image in the vSphere UI. Check the status of

MachineImageto see if the image was successfully built:kubectl get MachineImage -o yaml

You should see

status.readyset totrueandspec.idset to a newly created OVA template.

You’ll need a Kubernetes cluster to run against.

Follow CAPI's Quickstart documentation to create a cluster using KIND and the Docker provider.

Use Kubernetes version v1.26.3 if you are planning on using the sample config.

-

Create a KIND bootstrap cluster:

kind create cluster --config - <<EOF kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 networking: ipFamily: dual nodes: - role: control-plane extraMounts: - hostPath: /var/run/docker.sock containerPath: /var/run/docker.sock EOF

-

Deploy CAPI providers:

export CLUSTER_TOPOLOGY=true clusterctl init --infrastructure docker --wait-providers -

Create a workload cluster:

# The list of service CIDR, default ["10.128.0.0/12"] export SERVICE_CIDR=["10.96.0.0/12"] # The list of pod CIDR, default ["192.168.0.0/16"] export POD_CIDR=["192.168.0.0/16"] # The service domain, default "cluster.local" export SERVICE_DOMAIN="k8s.test" # Create the cluster clusterctl generate cluster capi-quickstart --flavor development \ --kubernetes-version v1.26.3 \ --control-plane-machine-count=1 \ --worker-machine-count=1 | kubectl apply -f -

-

Generated the components manifests and build the image:

make release-snapshot

-

If using a local KIND cluster:

kind load docker-image ghcr.io/dkoshkin/kubernetes-upgrader:$(gojq -r '.version' dist/metadata.json) -

Otherwise, push the image to a registry accessible by the cluster:

make docker-push IMG=ghcr.io/dkoshkin/kubernetes-upgrader:$(gojq -r '.version' dist/metadata.json) -

Deploy the controller to the cluster with the image specified by

IMG:make deploy IMG=ghcr.io/dkoshkin/kubernetes-upgrader:$(gojq -r '.version' dist/metadata.json) -

Deploy the Docker samples:

kubectl apply -f config/samples/example-docker-static.yaml

-

You should see Pods created for Jobs that will "build" and image and the Cluster will be upgraded to the newest v1.27.x Kubernetes version.

Undeploy the controller from the cluster:

make undeployThis project aims to follow the Kubernetes Operator pattern.

It uses Controllers, which provide a reconcile function responsible for synchronizing resources until the desired state is reached on the cluster.

If you are editing the API definitions, generate the manifests such as CRs or CRDs using:

make manifestsNOTE: Run make help for more information on all potential make targets

More information can be found via the Kubebuilder Documentation