A free and open-source inpainting tool powered by SOTA AI model.

example-0.24.0.mp4

- Completely free and open-source

- Fully self-hosted

- Classical image inpainting algorithm powered by cv2

- Multiple SOTA AI models

- Support CPU & GPU

- Various inpainting strategy

- Run as a desktop APP

1. Remove any unwanted things on the image

| Usage | Before | After |

|---|---|---|

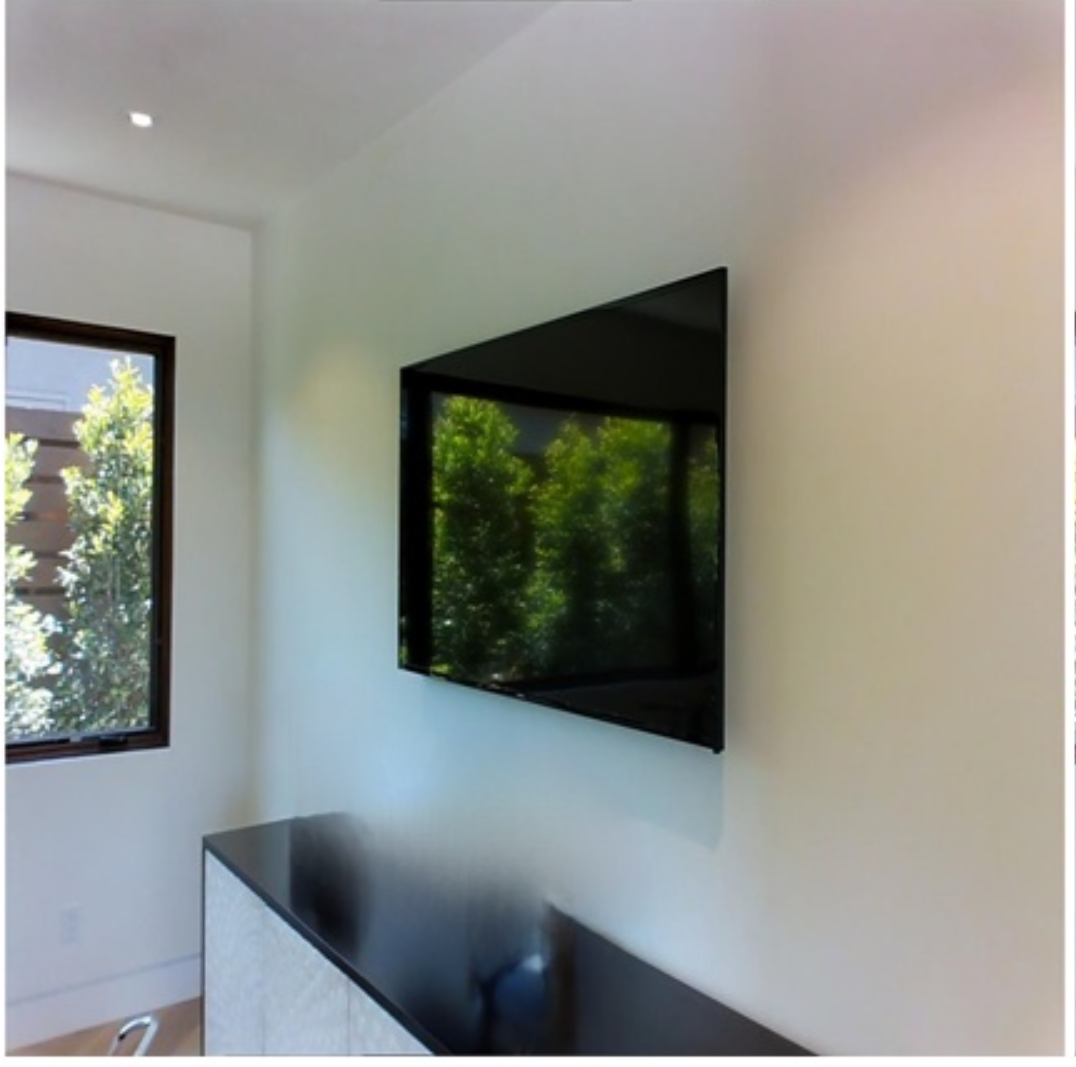

| Remove unwanted things |  |

|

| Remove unwanted person |  |

|

| Remove Text |  |

|

| Remove watermark |  |

|

3. Replace something on the image

| Usage | Before | After |

|---|---|---|

| Text Driven Inpainting |  |

Prompt: a fox sitting on a bench |

The easiest way to use Lama Cleaner is to install it using pip:

pip install lama-cleaner

# Models will be downloaded at first time used

lama-cleaner --model=lama --device=cpu --port=8080

# Lama Cleaner is now running at http://localhost:8080For stable-diffusion model, you need to accepting the terms to access, and get an access token from here huggingface access token

If you prefer to use docker, you can check out docker

If you hava no idea what is docker or pip, please check One Click Installer

Available command line arguments:

| Name | Description | Default |

|---|---|---|

| --model | lama/ldm/zits/mat/fcf/sd1.5 See details in Inpaint Model | lama |

| --hf_access_token | stable-diffusion need huggingface access token to download model | |

| --sd-run-local | Once the model as downloaded, you can pass this arg and remove --hf_access_token |

|

| --sd-disable-nsfw | Disable stable-diffusion NSFW checker. | |

| --sd-cpu-textencoder | Always run stable-diffusion TextEncoder model on CPU. | |

| --device | cuda or cpu | cuda |

| --port | Port for backend flask web server | 8080 |

| --gui | Launch lama-cleaner as a desktop application | |

| --gui_size | Set the window size for the application | 1200 900 |

| --input | Path to image you want to load by default | None |

| --debug | Enable debug mode for flask web server |

| Model | Description | Config |

|---|---|---|

| cv2 | 👍 No GPU is required, and for simple backgrounds, the results may even be better than AI models. | |

| LaMa | 👍 Generalizes well on high resolutions(~2k) |

|

| LDM | 👍 Possible to get better and more detail result 👍 The balance of time and quality can be achieved by adjusting steps 😐 Slower than GAN model 😐 Need more GPU memory |

Steps: You can get better result with large steps, but it will be more time-consuming Sampler: ddim or plms. In general plms can get better results with fewer steps |

| ZITS | 👍 Better holistic structures compared with previous methods 😐 Wireframe module is very slow on CPU |

Wireframe: Enable edge and line detect |

| MAT | TODO | |

| FcF | 👍 Better structure and texture generation 😐 Only support fixed size (512x512) input |

|

| SD1.5 | 👍 SOTA text-to-image diffusion model |

See model comparison detail

LaMa vs LDM

| Original Image | LaMa | LDM |

|---|---|---|

|

|

|

LaMa vs ZITS

| Original Image | ZITS | LaMa |

|---|---|---|

|

|

|

Image is from ZITS paper. I didn't find a good example to show the advantages of ZITS and let me know if you have a good example. There can also be possible problems with my code, if you find them, please let me know too!

LaMa vs FcF

| Original Image | Lama | FcF |

|---|---|---|

|

|

|

Lama Cleaner provides three ways to run inpainting model on images, you can change it in the settings dialog.

| Strategy | Description | VRAM | Speed |

|---|---|---|---|

| Original | Use the resolution of the original image | High | ⚡ |

| Resize | Resize the image to a smaller size before inpainting. The area outside the mask will not loss quality. | Midium | ⚡ ⚡ |

| Crop | Crop masking area from the original image to do inpainting | Low | ⚡ ⚡ ⚡ |

If you have problems downloading the model automatically when lama-cleaner start,

you can download it manually. By default lama-cleaner will load model from TORCH_HOME=~/.cache/torch/hub/checkpoints/,

you can set TORCH_HOME to other folder and put the models there.

Only needed if you plan to modify the frontend and recompile yourself.

Frontend code are modified from cleanup.pictures, You can experience their great online services here.

- Install dependencies:

cd lama_cleaner/app/ && yarn - Start development server:

yarn start - Build:

yarn build

You can use pre-build docker image to run Lama Cleaner. The model will be downloaded to the cache directory when first time used. You can mount existing cache directory to start the container, so you don't have to download the model every time you start the container.

The cache directories for different models correspond as follows:

- lama/ldm/zits/mat/fcf: /root/.cache/torch

- sd1.5: /root/.cache/huggingface

docker run -p 8080:8080 \

-v /path/to/torch_cache:/root/.cache/torch \

-v /path/to/huggingface_cache:/root/.cache/huggingface \

--rm cwq1913/lama-cleaner:cpu-0.24.4 \

lama-cleaner --device=cpu --port=8080 --host=0.0.0.0

- cuda11.6

- pytorch1.12.1

- minimum nvidia driver 510.39.01+

docker run --gpus all -p 8080:8080 \

-v /path/to/torch_cache:/root/.cache/torch \

-v /path/to/huggingface_cache:/root/.cache/huggingface \

--rm cwq1913/lama-cleaner:gpu-0.24.4 \

lama-cleaner --device=cuda --port=8080 --host=0.0.0.0

Then open http://localhost:8080

cpu only

docker build -f --build-arg version=0.x.0 ./docker/CPUDockerfile -t lamacleaner .

gpu & cpu

docker build -f --build-arg version=0.x.0 ./docker/GPUDockerfile -t lamacleaner .