New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Pool commands #1590

base: main

Are you sure you want to change the base?

Pool commands #1590

Conversation

|

No change on fortunes, so tried on updates:

|

|

@roji I think we have tested this approach by the past |

|

@roji does the command reparse the cmd string (to convert to from ado => postgres format, e.g. |

|

Raised issue npgsql/npgsql#3200; it reduces allocations but reparses the query and generates a new one in pg format for each execution |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sorry for disappearing here, personal issues.

Yeah, at some point I tested an approach of pooling the ADO.NET facade objects (we can do the same for NpgsqlConnection BTW), and didn't get convincing results - the way I understood it, the overhead of pooling synchronization isn't worth it for very light-weight objects. It's true that skipping the SQL parsing is a more convincing argument, but see npgsql/npgsql#3200 (comment) about improvements in 6.0 which should obviate all that anyway. Also, when I last benchmarked fortunes, SQL parsing was pretty negligible (SQL is very small and doesn't even contain parameters).

But we can definitely revisit all this.

| @@ -237,7 +259,55 @@ private async Task<World> ReadSingleRow(NpgsqlCommand cmd) | |||

| } | |||

| } | |||

|

|

|||

| private static readonly object[] _cacheKeys = Enumerable.Range(0, 10001).Select((i) => new CacheKey(i)).ToArray(); | |||

| internal class SqlFortuneCommand : IDisposable | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are these wrapper classes actually necessary, why not pool NpgsqlCommand directly? As far as I can tell they're mainly there to enqueue back when disposing, but that can just be done by the code using the command instead of disposing, no?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Mainly so the callsites are just a using block and doon't have to worry about pooling e.g. its

using (var cmd = CreateReadCommand())

{

cmd.Connection = db;

// do something with pooled command

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK. Moving the pooling logic here and removing the wrappers might make a tiny bit of difference too.

All the SQL other than the fortunes benchmark contains parameters? |

| { | ||

| cmd.Connection = db; | ||

| var param = cmd.Parameter; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In npgsql/npgsql#3200 (comment) you say

The typical scenario of reexecuting the same command with the same SQL also does it on the same connection, in which case explicit preparation is the right choice and bypasses everything.

Since this is executed 20 times just changing the value, should it do a prepare here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should definitely give it a try... I don't pay enough attention as I should to the non-Fortunes benchmarks.

Automatic preparation still has the advantage of doing one less roundtrip - the first execution prepares and executes in the same go, where with explicit preparation they're split. But as you add more executions for a single initial prepare the impact of that goes down.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

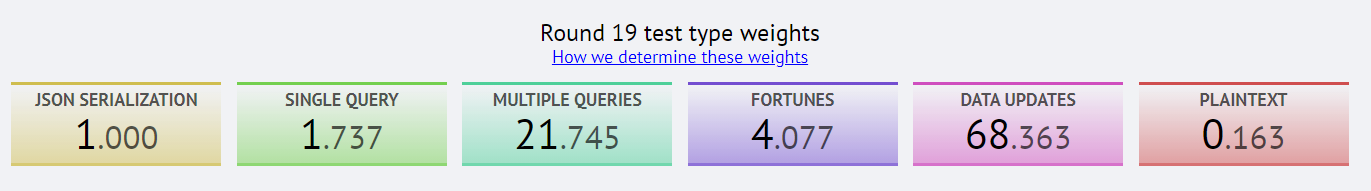

Ye olde score multiplier for composite scores

Automatic preparation still has the advantage of doing one less roundtrip - the first execution prepared and executes in the same go, where with explicit preparation they're split. But as you add more executions for a single initial prepare the impact of that goes down.

Which is kinda why I want to bypass the parse; then its auto prep + parse once, rather than parse 20 times with auto prep?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK, I'll try to take some time this weekend to play around with bypassing the parse. In any case I need to run (and update) the benchmarks for the newest Npgsql 5.0.0 (just released preview1) - will look into the parsing thing as part of that.

IIRC yeah (though whether a parameter exists or not doesn't matter that much for SQL parsing, just a little bit). |

|

@benaadams @roji is this old PR still relevant? |

@roji said he was introducing a better way of doing it in a newer version of the driver; not sure of status of that |

|

Things have changed quite a lot since this was done... Here are some thoughts. Re SQL parsing, Npgsql 6.0 did introduce support for using (native) positional parameters and not parsing SQL (@p -> $1); for more details, see this write-up. This is automatically triggered when the command parameters are unnamed, but when there are no parameters, we do have to parse for backwards compat. IIRC the only benchmark that doesn't have parameters is fortunes, so we currently do parse there, which is unneeded overhead. We do have an app context switch which allows disabling SQL parsing/rewriting globally in the application, even where there are no parameters. Since that switch is global and since Dapper and EF don't work with positional parameters, turning it on would break them. But unlike our implementation here, our TechEmpower platforms implementation has only raw (no Dapper/EF), so I'm doing that there (in TechEmpower/FrameworkBenchmarks#8005). Though we'll have to figure out what to do if we unify the implementations (#1815). |

|

The 2nd thing here is pooling the ADO.NET objects (e.g. NpgsqlCommand). With the introduction of NpgsqlDataSource, we'll soon be switching to creating commands directly from that instead of instantiating connections (https://github.com/aspnet/Benchmarks/pull/1816/files#r1139401127): // instead of:

using var connection = new NpgsqlConnection(...);

using var command = new NpgsqlCommand("SQL", connection);

// we'll just do this:

using var command = dataSource.CreateCommand();(We can do this since the benchmarks don't involve any connection state (e.g. transactions), and this models multiplexing much more correctly, i.e. just execute a command against the database, without needing to care about which connection it goes throw or how. This will also likely bring some optimizations later.) Currently, NpgsqlDataSource.CreateCommand() doesn't pool. If it's really beneficial to do so, this is an optimization we can and should implement inside Npgsql itself; opened npgsql/npgsql#5001 to track this. /cc @vonzshik @NinoFloris |

|

So beyond the above two things, I think this can be closed... We should definitely experiment with command pooling in Npgsql and see what happens. |

Are there plans to enable setting this via a property on |

|

Not really... This whole thing is tricky and somewhat complex, and comes from the fact that someone in Npgsql's history decided to accept named parameter placeholders (@p) instead of positional ones ($1), and also to support batching by parsing the command's SQL for semicolons and splitting that to multiple batched statements at the wire protocol level. Neither of these are natively supported by PG, so Npgsql has to parse/rewrite in order to suppotr them (here's a writeup). Now, if the command has parameters, we check whether they're named on or not (is DbParameter.ParameterName set). If they're unnamed, we take that as a signal that SQL parsing isn't required, i.e. positional parameters are being used. Since we already have a user gesture here (unset parameter name), we don't need an additional flag on NpgsqlCommand. Note that if you're using unnamed positional parameters, Npgsql also doesn't support semicolons for batching: you must instead use the DbBatch abstraction we introduced in Npgsql 6.0 (partially for this). The only corner case is when there's no parameters at all. For this case, there's still the problem of semicolons inside the SQL (batching) - we must parse since there's no user gesture here. We could in theory introduce a bool property command just to skip parsing/rewriting in the no-parameters case, but that seems really excessive... I'd rather we made EF (and Dapper) compatible with positional parameters and DbBatch (yet another thing on my list...) For now we can probably have #if FORTUNES or similar to enable this AppContext switch only when running fortunes... (it's all been quite a long journey...) |

|

The idea was that the parsed state would remain in the command if the command text didn't change; so reusing the command would skip the reparsing. Alas that isn't what happens and it reparses each time even though its the same command object with an unchanged command |

|

@benaadams right. Parsing/rewriting was already disabled in all benchmarks with parameters, since we switched to positional placeholders a while ago; TechEmpower/FrameworkBenchmarks#8005 (comment) does that for Fortunes as well. So I don't think we need to worry about that part any more. There's another kind of "parsing" which happens every time: to look up the PostgreSQL prepared statement in an internal dictionary. We're planning to add a data-source level API for "globally-available" prepared statements, that would skip this step (npgsql/npgsql#4509). In the meantime, we could in theory pool commands and assume that a command rented from the pool already has the correct SQL. That assumption would hold only in a single-statement benchmark, so it seems a bit unrealistic/problematic. |

|

@benaadams Any reuse that we might introduce on the DbDataSource will likely release all query specific resources during return. If we really need fast pooling we could store relevant instances on the kestrel connection and pass them down. |

Rather than creating them per request