This repository is based on facebookresearch/moco, with modifications.

pip install numpy torch blobfile tqdm pyYaml pillow jaxtyping beartype pytorch-lightning omegaconf hydra-core wandb

This is a PyTorch implementation of the MoCo paper:

@Article{he2019moco,

author = {Kaiming He and Haoqi Fan and Yuxin Wu and Saining Xie and Ross Girshick},

title = {Momentum Contrast for Unsupervised Visual Representation Learning},

journal = {arXiv preprint arXiv:1911.05722},

year = {2019},

}

It also includes the implementation of the MoCo v2 paper:

@Article{chen2020mocov2,

author = {Xinlei Chen and Haoqi Fan and Ross Girshick and Kaiming He},

title = {Improved Baselines with Momentum Contrastive Learning},

journal = {arXiv preprint arXiv:2003.04297},

year = {2020},

}

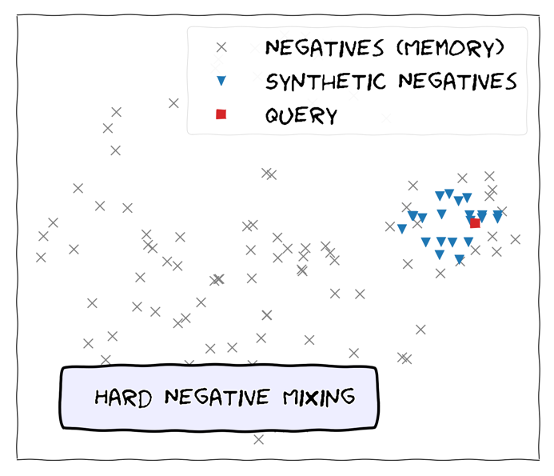

And an implementation of the Mochi paper:

@InProceedings{kalantidis2020hard,

author = {Kalantidis, Yannis and Sariyildiz, Mert Bulent and Pion, Noe and Weinzaepfel, Philippe and Larlus, Diane},

title = {Hard Negative Mixing for Contrastive Learning},

booktitle = {Neural Information Processing Systems (NeurIPS)},

year = {2020}

}

For more details on data, training, loading and results, please see the original repo.

I use Hydra configuration and not the original argparse.

New main file for pretraining is lightning_main_pretraining.py and new file for fine-tuning is lightning_main_finetuning.py.

This implementation with Pytorch Lightning should support ddp as well as single GPU implementation.

This project is under the CC-BY-NC 4.0 license. See LICENSE for details.

- moco.tensorflow: A TensorFlow re-implementation.

- Colab notebook: CIFAR demo on Colab GPU.