The repository will show you how to:

- Convert a pure

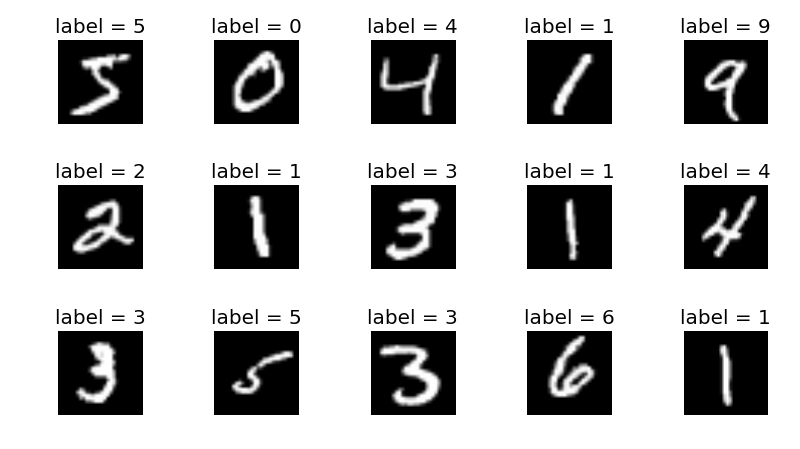

PyTorch Convolutional Neural Network Classifiertrained on MNIST to PyTorch Lightning. - Extend Pure PyTorch trivially with Lightning best practice features.

- Seamlessly scale your training in the cloud with Grid.ai - No code changes.

- Learn about Lighting Flash and its 15+ production ready tasks.

Find below PyTorch Community Voices | PyTorch Lightning | William Falcon & Thomas Chaton presenting this repository.

Add DeepSpeed, FSDP, Multiple Loggers, Mutliple Profilers, TorchScript, Loop Customization, Fault Tolerant Training, etc ....

- PyTorch | requires a huge number of addtional lines. You

definitelydo not want to do that 😫 - PyTorch Lightning | Still ~ 106 lines. Let's keep it simple. 🚀

Learn more with Lighting Docs.

PyTorch Lightning 1.4 is out ! Here is our CHANGELOG.

Don't forget to ⭐ PyTorch Lightning.

Training on Grid.ai

Grid.ai is a ML Platform from the creators of PyTorch Lightning that enables you to train Machine Learning code without worrying about infrastructure.

Learn more with Grid.ai Docs

pip install lightning-grid --upgradegrid run --instance_type 4_M60_8gb ddp_mnist_grid/lightning.py --trainer.max_epochs 2 --trainer.gpus 4 --trainer.accelerator ddpWith Grid DataStores, low-latency, highly-scalable auto-versioned dataset.

grid datastore create --name mnist --source data

grid run --instance_type 4_M60_8gb --datastore_name mnist --datastore_mount_dir data ddp_mnist_grid/lightning.py --trainer.max_epochs 2 --trainer.gpus 4 --trainer.accelerator ddpPure PyTorch:

grid datastore create --name mnist --source data

grid run --instance_type g4dn.xlarge --gpus 2 ddp_mnist_grid/boring_pytorch.pyAdd --use_spot to use interruptible machines.

Grid.ai makes scaling multi node training easy 🚀 Train on 2+ nodes with 4 GPUS using DDP Sharded 🔥

grid run --instance_type 4_M60_8gb --gpus 8 --datastore_name mnist --datastore_mount_dir data ddp_mnist_grid/lightning.py --trainer.max_epochs 2 --trainer.num_nodes 2 --trainer.gpus 4 --trainer.accelerator ddp_shardedTrain Andrej Karpathy minGPT converted to PyTorch Lightning by @williamFalcon and bencharmked with DeepSpeed by @SeanNaren

git clone https://github.com/SeanNaren/minGPT.git

git checkout benchmark

grid run --instance_type g4dn.12xlarge --gpus 8 benchmark.py --n_layer 6 --n_head 16 --n_embd 2048 --gpus 4 --num_nodes 2 --precision 16 --batch_size 32 --plugins deepspeed_stage_3

Learn how to scale your scripts with PyTorch Lighting + DeepSpeed

Lighting Flash is collection of tasks for fast prototyping, baselining, finetuning and solving problems with deep learning built on top of PyTorch Lightning.

Train a PyTorchVideo Classifier with Lighting Flash. Check out Grid.ai reproducible button:

import os

import flash

from flash.core.data.utils import download_data

from flash.video import VideoClassificationData, VideoClassifier

# 1. Create the DataModule

# Find more datasets at https://pytorchvideo.readthedocs.io/en/latest/data.html

download_data("https://pl-flash-data.s3.amazonaws.com/kinetics.zip", "./data")

datamodule = VideoClassificationData.from_folders(

train_folder=os.path.join(os.getcwd(), "data/kinetics/train"),

val_folder=os.path.join(os.getcwd(), "data/kinetics/val"),

clip_sampler="uniform",

clip_duration=1,

decode_audio=False,

)

# 2. Build the task

model = VideoClassifier(backbone="x3d_xs", num_classes=datamodule.num_classes, pretrained=False)

# 3. Create the trainer and finetune the model

trainer = flash.Trainer(max_epochs=3)

trainer.finetune(model, datamodule=datamodule, strategy="freeze")

# 4. Make a prediction

predictions = model.predict(os.path.join(os.getcwd(), "data/kinetics/predict"))

print(predictions)

# 5. Save the model!

trainer.save_checkpoint("video_classification.pt")Credit to PyTorch Team for providing the Bare Mnist example.

Credit to Andrej Karpathy for providing an implementation of minGPT.

Kill ddp processes

sudo kill -9 $(ps -aef | grep -i 'ddp' | grep -v 'grep' | awk '{ print $2 }')