New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

No progress during prune (0.12, 0.13) #3761

Comments

|

Might this be (or related to) #3710? |

|

It looks so. Is there an easy way to get a build with that fix? |

|

Sure! https://beta.restic.net/ |

|

Thank you. I downloaded latest_restic_linux_amd64 from the beta site and tried it. The behaviour is exactly the same, so the fix for #3710 does not work here. Additional info: On all 3 repos it stops around package 1000. |

|

I have tried to prune the repo with restic 0.12.1. The behaviour is still exactly the same. So it seems like the cause is a completely different. |

|

It would be interesting to have a debug log (see https://github.com/restic/restic/blob/master/CONTRIBUTING.md#reporting-bugs) of the requests restic issues to the S3 backend. To be precise, I'm interested in the requests probably sent to the S3 backend, when listing files gets stuck. If you or someone else who has the same problem creates a debug.log, make sure to remove the |

|

If someone can provide a debug build of restic, I can provide a debug log. |

|

Note that I'm using OTC (Open Telecom Cloud) which mimics the S3 API. May be relevant. |

|

I had to recover most of the stuck backups (by running |

Have a look here. |

|

Looks like it is in an endless loop with the following request/repsonse (redacted, let me know if anything important is missing): |

|

@MichaelEischer does this help in any way? My prunes worked 2-3 times after I ran it once with the |

|

Sorry for the late reply. I'm completely not sure whether the bug is in the Open Telekom Cloud implementation or in the minio-go library. Judging from my understanding of the Amazon S3 API description, the minio library is behaving correctly which would put the blame on the cloud implementation. What seems to happen is that ListObjectsV2 never sets The difference between the list-objects versions, that is probably relevant here, is how request pagination works (aka. the file list is too large for a single request and is thus returned incrementally). My guess right now is that the Telekom Cloud is doing something odd in that regard. Unfortunately the debug log isn't that helpful. What might be interesting is whether the

That's the only workaround for now. It shouldn't have any adverse effects. |

|

@MichaelEischer in the boto3 docs is mentioned that v2 getobjects is someting the requester has to pay for. not sure if that's also true for v1 - if not, using v1 should probably the default. It turned out that the loop caused lot of traffic (20 TB in the last month which is ~2k€). Just got the bill and saw the mess... So I spent the afternoon in reproducing the issue which very clear is an issue with the OTC endpoint. It returns the same set of objects for each requests and ignores the continuation token. |

As both versions of getobjects work essentially the same way, I'd expect that the same pricing applies to both versions.

Oh dear!

So the S3-compatible endpoint is actually not S3-compatible? This has to be fixed by OTC. Returning a continuation token shows that apparently listObjectsV2 is supported, but then ignoring the token is a problem. Besides that, I don't think there's much we can do at the restic-side to avoid this problem. |

|

@MichaelEischer Absolutely, restic is not to blame in any way for that ^^ I have an open ticket in OTC and wait for final response. |

|

Is there anything left which we can do on the restic side here? If no, I'd like to close this issue. |

|

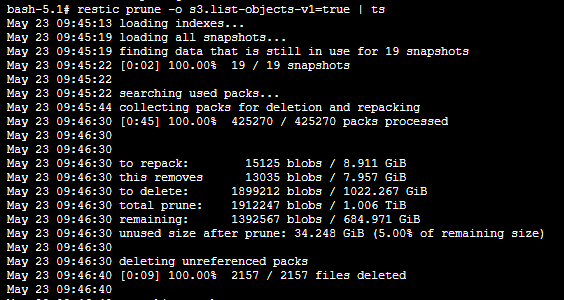

Judging from the |

Output of

restic versionrestic 0.13.1 compiled with go1.18 on linux/amd64How did you run restic exactly?

What backend/server/service did you use to store the repository?

Open Telekom Cloud (via S3 interface)

Expected behavior

Should prune the repository

Actual behavior

Runs up to a certain point (package 977 on this repo) and then stops making progress.

Steps to reproduce the behavior

I have no way to reproduce it on an arbitrary repository. Hoever, I have 3 repos with that issue where the prune stops always at the same package.

Additional info

Do you have any idea what may have caused this?

Do you have an idea how to solve the issue?

Did restic help you today? Did it make you happy in any way?

Restic is great, I use it for all my backups!

The text was updated successfully, but these errors were encountered: