-

Notifications

You must be signed in to change notification settings - Fork 1.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Wheel support for aarch64 #5292

Comments

|

What platform are you interested in aarch64 for?

…On Wed, Jul 1, 2020 at 1:45 AM odidev ***@***.***> wrote:

*Summary*

Installing cryptography on aarch64 via pip using command "pip3 install

cryptography" tries to build wheel from source code

*Problem description*

cryptography don't have wheel for aarch64 on PyPI repository. So, while

installing cryptography via pip on aarch64, pip builds wheel for same

resulting in it takes more time to install cryptography. Making wheel

available for aarch64 will benefit aarch64 users by minimizing cryptography

installation time.

*Expected Output*

Pip should be able to download cryptography wheel from PyPI repository

rather than building it from source code.

@cryptography-team, please let me know if I can help you building

wheel/uploading to PyPI repository. I am curious to make cryptography wheel

available for aarch64. It will be a great opportunity for me to work with

you.

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#5292>, or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAAAGBCAJUYPJYUSF62EELTRZLEOHANCNFSM4ONBWD6Q>

.

--

All that is necessary for evil to succeed is for good people to do nothing.

|

|

@alex , I am interested in Linux AArch64 wheel. Manylinux 2014 has given Linux AArch64 support. I have successfully build wheel on same platform. Thanks |

|

The obstacle here is entirely the poor performance of aarch64 in our CI. We aren’t willing to ship an untested wheel but we aren’t willing to have our CI take 30+ minutes per cycle either. If Travis moves to better performing hardware (like Graviton2) or other options become available we'll happily revisit this. Are you aware of free CI options for aarch64 that run our full test suite more quickly? |

|

An option would be to self-host a github actions workers for arm64 testing. As to where to host them:

|

|

If AP has AArch64 then GA won't be far behind (they're hosted on the same substrate). It would be interesting to see if these are more on the level of AWS's Graviton2 and less like the (current) TravisCI ARM processors, which are too slow to be useful in our CI. |

|

I've the same issue when building a dockerized Python app with |

|

@reaperhulk it looks like AP added just the arm64 agent and not the workers :| |

|

@xrmx The security requirements of this project are such that we're unwilling to ship wheels for anything we aren't testing. However, when we enabled aarch64 on Travis we added 45-60 minutes of wall clock time to every build. Additionally, since AArch64 capacity is lower we frequently had builds stalling on waiting for ARM builders. It is immensely discouraging and demotivating to have a 4 review cycle PR (a low number for us!) take an entire day's worth of time. Our CI is already far too slow so we made the decision to disable those builds. Since we can't run our tests on AArch64 right now we are really stuck on shipping AArch64 wheels. We can't guarantee they work, so for now third parties will need to continue to fill this gap where they can. As soon as performant builders exist (Travis is testing Graviton2 instances apparently) then we'll revisit this. (Incidentally this will also affect Apple Silicon machines when they ship. New arch, but without access to that in our CI we won't be able to officially support it for an unknown amount of time). |

|

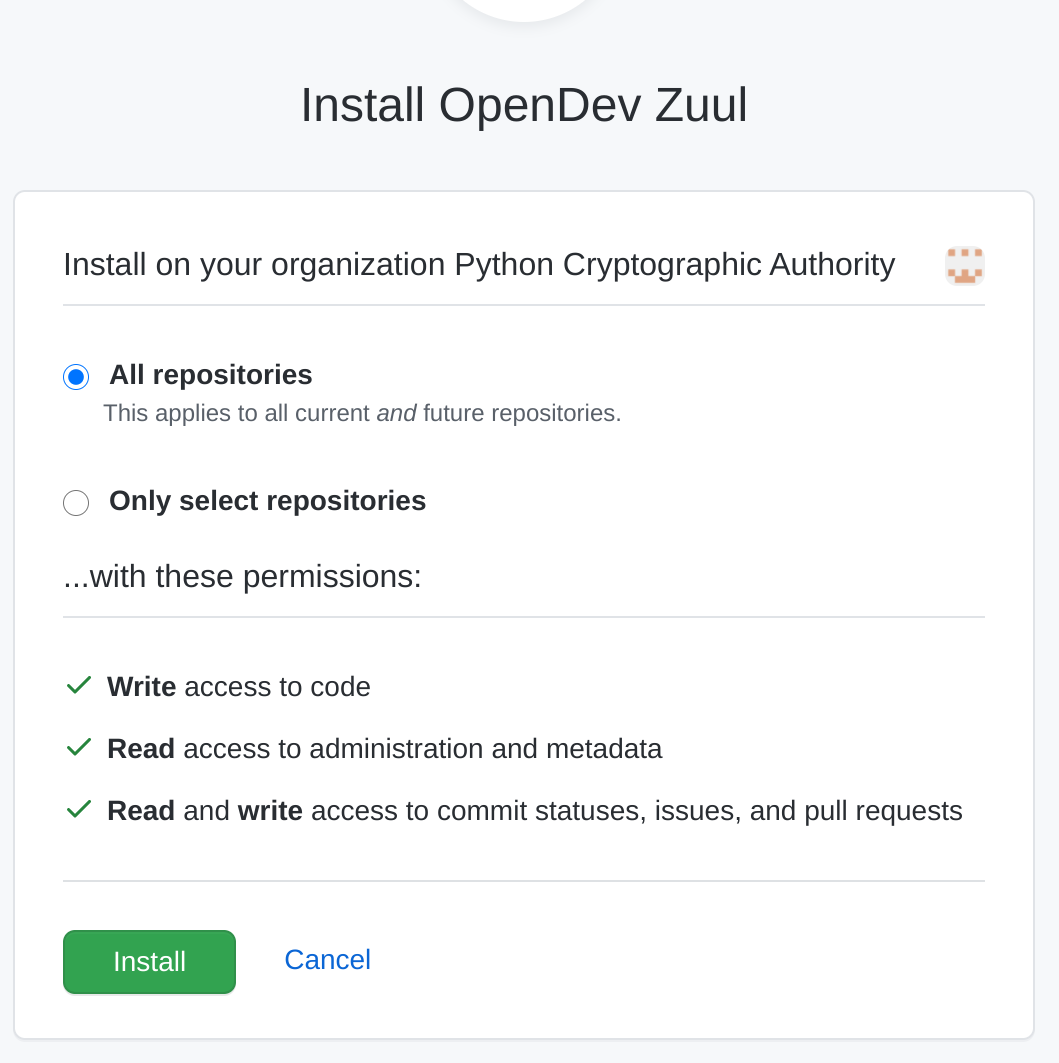

OpenDev (https://opendev.org/) [1] has ARM64 resources provided by Linaro which we can use to do aarch64 testing. This is not emulation (as maybe the other one was) and is based on Qualcomm Falkor. As a first cut, I ran tox on one of these hosts and it's coming out at about 13 minutes run-time. These are pretty big hosts; 8 cpu 8gb ram, which the current testing doesn't seem to make use of. I installed xtest and ran pytest with "-n8" and it didn't bring things down as much as I thought, more like 10 minutes in that case, but I bet I did things a bit wrong and better ways could be found. So all over tis is just a bit anecdotal with me running by hand, but I think it shows we're in the ballpark. CI would be run by Zuul -- if anyone has contributed to OpenStack at all you'll be familiar with it ([3] to look around and click on any of the tests for examples). For me to set this up as automatic CI, somebody needs to add the opendev Zuul https://github.com/apps/opendev-zuul app to this repo so it can grab the changes and test them (read and comment perms only). I can setup a job to run cryptography CI; Zuul will just drop a comment on the pull request then with links to the CI results. There's really no upper limit to what could be done, including automated pypi releases etc. We have centos7/8, ubuntu xenial/bionic/focal and debian buster environments for ARM64. This would be the first example so I'd expect a few bumps, but it would certainly be a nice proof-of-concept. [1] for those not following along, this is OpenStack infrastructure opened up to non-OpenStack specific projects |

|

@alex hrmm, I guess that's for the case like in OpenStack where Zuul does the merging for you. Obviously we would not be setting up any jobs that would merge. Let me loop in @emonty or perhaps @pabelanger who have a bit more experience setting this up in other github contexts, including Ansible, for advice. |

|

@ianw on zuul side, you can setup a 2nd github app without 'write access to code' like https://github.com/apps/ansible-zuul-third-party-ci. Then, projects that don't want to give out write access can still work properly. |

|

@pabelanger Thanks; I think we set this up thinking that Kata containers would be using it to merge here in github. We seem to have some options of either turning that off or starting another R/O app. We don't need access to get a basic job for proof of concept going anyway (only to make it run automatically). I'll see what I can get going and when we have something will start a new issue to not pollute this with chatter about setting up OpenDev CI specifically. |

|

TravisCI arm64 jobs should be getting much faster soon. |

|

@alex, I used drone to build and test AArch64 artifacts. All the jobs have been finished within 30 minutes. I had created jobs for python 3.6, 3,7 and 3.8 with various environments. However, docker related jobs are not added because the used dockers images are x86_64 architecture specific. Please have a look at below .drone.yml and build run logs- https://github.com/odidev/cryptography/blob/master/.drone.yml Please let me know your interest to accept PR for the same. Also, please let me know the next step for deploying AArch64 wheel on pypi. |

|

We're currently working with OpenDev to try out aarch64 on their platform, see #5339 |

|

@alex , TravisCI has a preview using AWS Graviton2 hardware going that should be substantially better than current arm64 by using this in your .travis.yml files: dist: focal

arch: arm64-graviton2 # redirects to aws graviton2

virt: lxd # redirects to LXD instance

group: edge # required for now (for Ubuntu 20.04 focal)I plan to try a PoC soon to see how fast it is, but that should greatly help with issues of capacity, speed and maintainability of 2 CI systems when it goes GA. |

|

oooo, is that publicly available? we tried in #5340 but no love |

|

Hmmm, noted. It should be open for public github accounts with no requirement to sign up. One of my colleagues has been testing a build of scipy-wheels here with Graviton2: https://github.com/janaknat/scikit-learn/blob/master/.travis.yml I'll give it shot myself soon and see if I have any issues and chime in on this issue. |

|

Maybe they turned it on in the last 10 days since we tried that. Time to try again! |

|

@geoffreyblake just tried again, it run on an amd64 bilder: #5366 |

|

Let me give it a try on a forked repo to see if I run into similar issues. |

|

I have tried that too(tested with |

|

@alex , @odidev, no I don't think that is the case. My colleague gave it a quick test: https://travis-ci.com/github/janaknat/wheel-test/jobs/367922635#L1 is his log. It is running on an aarch64 machine. See ln 107 of the log. |

|

🤷 it definitely did not work when we tried in 3 days ago. I have no visibility into the travis infra, so short of retrying again, I don't know what to do to advance this. |

|

I have just forked the wheel-test repository shared and triggered the build. It is showing x86_64 in Check line 189 for the uname output. It is showing linux/amd64 as OS/Arch in Build information as well. I am not sure what we are missing here. |

|

Something is missing, I plan to hack on this some today anyways. Will update any progress here. |

|

I was just comparing the difference and tested my package in https://travis-ci.com and it is giving uname output as aarch64. I think we need to find out the reason why it is |

|

Travis has been trying to migrate people to com from org for a while, and it's likely their deployed code is not the same across those domains. Unfortunately cryptography can't easily move because we have a special queue tied to the PSF and PyPA, who are also on org. |

|

Yes @odidev, it seems that is the issue, my forked version of this repository is on Travis-CI.com, not .org and the arm64-graviton2 tag works. There has to be a solution to help move your project over since my understanding is Travis-CI.org is going away. |

|

@reaperhulk , I don't follow what you mean. Is there a specific blocker? |

|

To move we need to talk to Travis employees, likely coordinate multiple orgs migrating at once, etc. It's almost certainly not an automated process for us given the special settings we possess. This is all to say that we're not in any hurry to try to migrate because it's likely to be a big pain. |

|

Seems to be a complex problem from the description. Though if that gets solved, looking at the source repo, it appears Travis is only the testing infrastructure, and building is done via github workflows, is that correct? That is another potential hurdle to releasing an Arm wheel. |

|

@reaperhulk , I see the OpenCI Zuul scripts are in. If those are working acceptably, what needs to be done to get Arm wheels building? If I'm pointed to the right place, I can certainly help and provide some PRs on that matter. Getting to Travis for all CI would be the best option, but I understand that you account settings with pypa and python projects make moving to .com a more involved effort. |

|

From OpenDev POV, I'm currently looking at how we can use the manylinux images from [1] to output more generic wheels. Currently, we build everything from the OpenStack requirements project [2] and publish that to our AFS volumes (which you can see at [3]). CI jobs that run in OpenDev get their pip configured to use mirrors in the cloud+region they run in (i.e. local network). This is great for OpenStack projects, who all synchronize on the requirements project to make sure their dependencies do not conflict. However, Zuul does not use the versions pinned in the OpenStack requirements project. This is why I'm looking at reworking things to build more than just what the OpenStack requirements are. This is a WIP and I want to try reworking things to get manylinux builds, so things are in general a bit more generic. Thus what would fall out of this would be us building generic manylinux wheels. So in terms of "could OpenDev CI dump out a aarch64 manylinux wheel suitable for uploading to PyPi" I think the answer is yes. I can see the extant wheels are built using github actions and publish artefacts like at https://github.com/pyca/cryptography/actions/runs/176310608. I'm not sure how these then get published to PyPi ... I couldn't see that part in the scripts? There's a lot of trust in the images being used for the github actions, and the manylinux docker images coming from dockerhub at this point anyway, so the OpenDev produced wheel wouldn't be any different IMO. Anyway, I can update when we have the generic building working if interested. [1] https://github.com/pypa/manylinux/#manylinux2014 update I've realised some things to those skilled in the art would already be obvious. The manylinux builds depend on a custom builder image created out of dockerfiles kept at https://github.com/pyca/infra. To get something useful we're going to need a update 2020-08-04 More progress; I've got a I should be able to use this to build wheels. I've started with a framework for that in #5386. However, this isn't quite working because it fails to install docker; our roles to setup docker on the host currently have a small x86_64 assumption baked in (fix proposed with https://review.opendev.org/746272) |

|

Success! #5386 has a Zuul-based job that is building manylinux2014_aarch64 wheels; for example https://zuul.opendev.org/t/pyca/build/3cbec94fa0da41ed936a3112f472174d (click on the "wheelhouse" artifact link or direct). The core parts of this are the docker image used to build the wheels, which is generated from the files https://opendev.org/pyca/infra/src/branch/master/docker/manylinux2014_aarch64 and currently uploaded to my personal account, and the build script which is kept in The rest I need to beat into shape; for example this revealed that on the Linaro ARM64 cloud running in a container modifies the MTU such that connections to fastly CDN via SSL fail (not any connection, not http connections, just https to fastly because of whatever they do!). I can also investigate building the manylinux2014_aarch container on-the-fly which might be practical on native hardware (need to work on some of our docker building bits to get it native arm64). But I'm happy with this as POC and any further thoughts welcome :) |

@reaperhulk have you been reaching to Travis CI support team in the meantime? I've asked our support team to reach out to organizations and ask if you consider migrating from .org to .com soon, as after it's done support team would need to re-create your special account settings on travis-ci.com. The migration of repositories itself is described in Travis CI documentation. |

|

Hi @michal-at-travisci! I reached out about what makes sense in the #embassy Slack a few days ago but didn't get a response. Do we need everyone to migrate to regain our builder queue or would it be possible for us to migrate first, get the builder queue enabled for us, and then when others migrate they can be added? I'm happy to do the migration ASAP if we're not stuck on default concurrency for long! |

|

Hello @reaperhulk, I will be assisting you on this case. Sorry about the silence on the #embassy channel. Before setting up your subscription configuration on travis-ci.com, I will need approval from all three organizations below

I will be keeping you posted about this case. Thanks. |

|

Hi Mustafa. I'm a member of all three of these organizations, who do you

need to approve it? Will everything continue working as currently, or is

some work required for each repo? Will our queue size remain the same (50

concurrent builds IIRC?)

…On Tue, Aug 18, 2020 at 8:52 AM Mustafa Ergül ***@***.***> wrote:

Hello @reaperhulk <https://github.com/reaperhulk>, I will be assisting

you on this case. Sorry about the silence on the #embassy channel.

Before setting up your subscription configuration on travis-ci.com, I

will need approval from all three organizations below

- python (approval taken by your team already)

- pyca

- pypa

I will be keeping you posted about this case. Thanks.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#5292 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAAAGBDVFUIMNT5YVBDHDSDSBJ2RTANCNFSM4ONBWD6Q>

.

--

All that is necessary for evil to succeed is for good people to do nothing.

|

|

Hey @alex , thank you for taking this from here. Since all the build information will be happening on travis-ci.com after migration process, we will need approval from members/admin of Github organizations to move the current subscription that is attached to Everything will be working as currently but your builds will be happening on travis-ci.com instead travis-ci.org. The work you need from the organization admins will be here to follow; https://docs.travis-ci.com/user/migrate/open-source-repository-migration Your subscription(queue size) is currently 25 private builds + 5 public builds which makes it 30 in total. Since your subscription is delegated(as I mention above) this concurrency will be shared all three organizations( We won't be disabling your subscription on travis-ci.org until you give the all green light for your new setup on travis-ci.com. I hope this clarifies |

|

Hi @mustafa-travisci! I'm the director of infrastructure for The Python Software Foundation which manages the I also happen to be an admin for the The migration process for .org -> .com for open source has been overwhelmingly confusing. Is the linked documentation an update from previous? If things are in a working state I'm happy to coordinate migrations for the |

|

And @reaperhulk and I can obviously represent PyCA. |

|

Hey @ewdurbin, @alex, @reaperhulk glad to hear that we are aligned in this case. @ewdurbin : I will be happy to assist if you have any issues during migration but please keep in mind before setting up your subscription, I will be sure about the same setup you have and also no issues occurring. I can also see that those three organizations are already given 3rd party permission for Travis CI(travis-ci.com). So let me set up your subscription on travis-ci.com and you will be able to test some of your repositories if those work well as in travis-ci.org. I will be keeping you posted. Thanks. |

|

I have done the migration for all PyCA repos. |

|

python and pypa repos migrated. the migration process appears to have gone super smoothly. thanks again @mustafa-travisci will let you know if anything crops up. |

|

Hello @alex , @ewdurbin and @reaperhulk I am happy to hear that you could successfully migrate your repositories and contributed with me on this case. I would like to mention that your subscription delegation(grouped accounts with Let's watch your concurrency usage in the upcoming days. When we will be good to, I will be disabling your subscription delegation on travis-ci.org. Again, thank you for your fast contribution to this case ❤️ |

|

#5386 has Zuul building cryptography aarch64 wheels. If anyone would like to test the results see the "wheelhouse" link at a build result like https://zuul.opendev.org/t/pyca/build/220d235fa1244a5eb595c740f882248f (e.g. cryptography-3.1.dev1-cp35-abi3-manylinux2014_aarch64.whl) |

|

Thanks to people in this issue we've shipped a manylinux2014_aarch64 wheel as part of the 3.1 release! |

Summary

Installing cryptography on aarch64 via pip using command "pip3 install cryptography" tries to build wheel from source code

Problem description

cryptography don't have wheel for aarch64 on PyPI repository. So, while installing cryptography via pip on aarch64, pip builds wheel for same resulting in it takes more time to install cryptography. Making wheel available for aarch64 will benefit aarch64 users by minimizing cryptography installation time.

Expected Output

Pip should be able to download cryptography wheel from PyPI repository rather than building it from source code.

@cryptography-team, please let me know if I can help you building wheel/uploading to PyPI repository. I am curious to make cryptography wheel available for aarch64. It will be a great opportunity for me to work with you.

The text was updated successfully, but these errors were encountered: