-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Step generation is done sequentially, making providers that block in Diff extremely slow #12351

Comments

|

Thanks for opening an issue and for the analysis, @aschleck! I'm going to discuss with the team to see what we could do to improve this. |

|

Thank you @justinvp for taking a look at this! Have you folks had any opportunity to discuss what a solution might look like? I'm considering taking a stab at fixing it (since this is shockingly impactful) but I wouldn't want to spend time trying approaches that the team knows will fail. |

|

Hey @justinvp, any update from the team? I believe this is contributing to extremely slow |

|

Hello! I spoke with Justin and the team late last week. @aschleck, we would accept a community PR for this feature! Speaking for myself, I'd love to see a community contribution, especially one this impactful. 😄 However, I just want to issue a word of caution. This change is at the very heart of the engine. When we make changes in this area, we tread very gingerly because any change here will impact every user of Pulumi. We expect the bar to be very high on a PR for this change, and it might take some significant back-and-forth to get it merged. I also received feedback that the team expects the change to be difficult, difficult enough that it's outside of the realm of what we would normally expect to see from community contributions. I don't say any of this to dissuade you, of course! 😸 Like I said, we're happy to receive contributions, and I'd love to see a performance improvement like this come in! We just wanted to set expectations ahead of time. :-) I'd hate for you to have a bad experience as a contributor if you submitted a PR and felt like we were stringing you along! |

|

FWIW, I think your approach using a

Yeah, that makes sense. My gut says you can return the errors over a channel, and close the channel after the wait group completes. I think it might be a little more complicated than that, because you'll have to aggregate the errors into a slice. So as not to block on a full channel from a Diffing goroutine, you'll also need to read from the channel concurrently.

We've seen that error before! We typically enable race detection with the |

|

I'm sorry for never responding Robbie, I really appreciated your insight and just haven't had time to think about this until today. And major thank you to dixler for getting a PR out in the meantime! As I understand it, with pulumi/pulumi-kubernetes@6e329f3 my particular problem is solved (a preview now takes 15 seconds instead of 3 minutes.) I defer to you folks on how you want to move forward. Please let me know if it'd be helpful for me to do further profiling and see if there's anything else I can find. |

|

Hi @aschleck! Good to hear from you! I'm glad my last message two messages didn't deter you from contributing; I was worried I was coming off too strong :) Super cool! I'm glad Levi and Ramone were able to land such an impactful performance fix. I'm no longer an OSS maintainer with Pulumi, so I'll also have to defer judgment. My professional opinion is to leave this issue open it identifies a valuable optimization, and it's plausible that other providers (frankly, all providers) would benefit from this change. It might be open for a bit, since it's a gnarly one, but it's worthwhile to keep around as part of #11598 |

I agree, this will be hard but is worth looking into. |

|

Hey @justinvp I saw this ticket and I tested some of the interesting suggestion provided above. I can confirm that by calling the |

What happened?

(as discussed in https://pulumi-community.slack.com/archives/C84L4E3N1/p1677886059484479)

pulumi-kubernetes with 489 resources takes 3 minutes to run

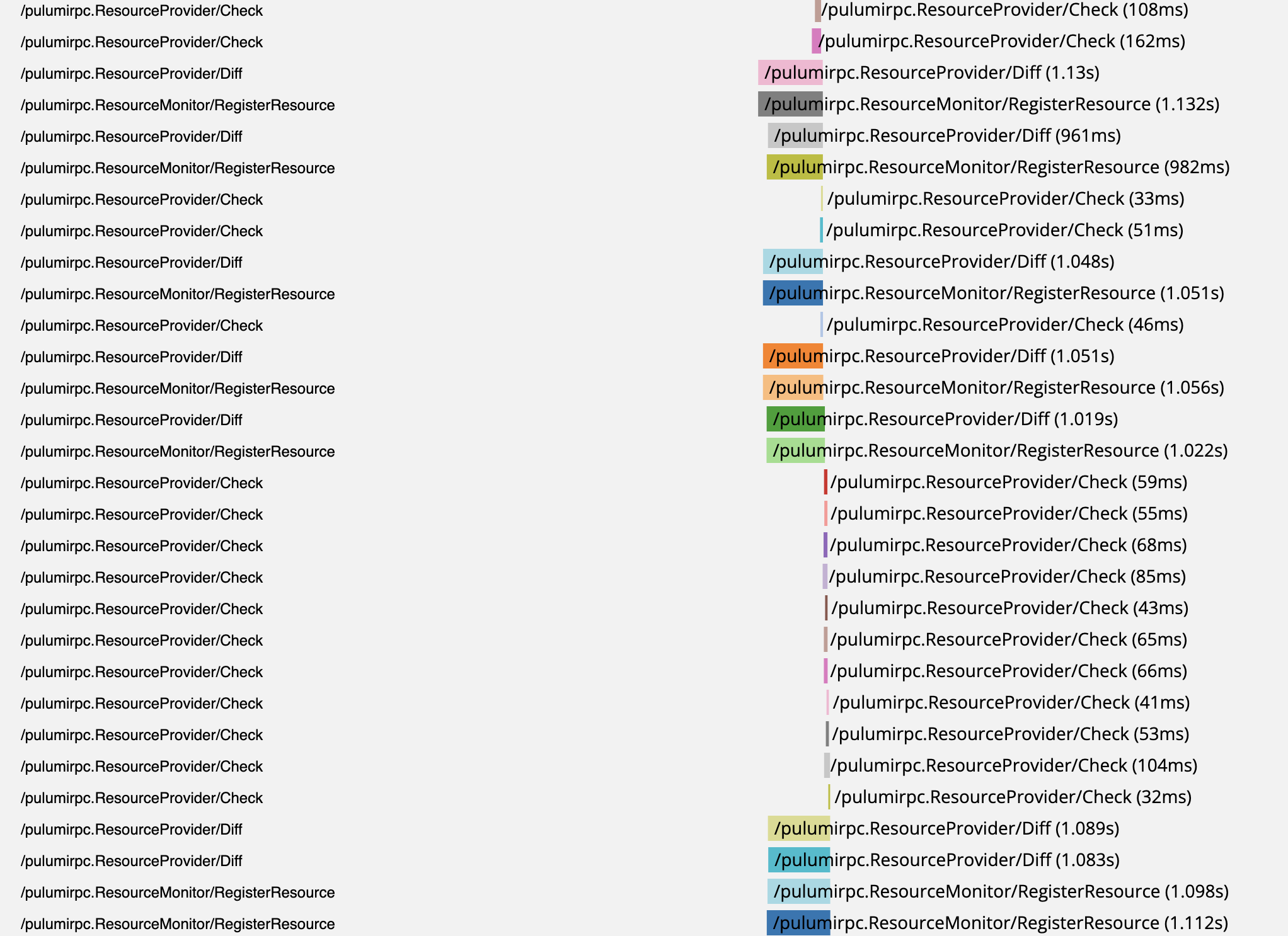

preview(without any refreshing.) This feels sad.Under profiling, we see that many RegisterResource calls are started at the same time, but appear to execute sequentially:

After digging in, I believe the problem is that pulumi-kubernetes makes API calls in its

Diffmethod: https://github.com/pulumi/pulumi-kubernetes/blob/master/provider/pkg/provider/provider.go#L1559 . The step generation logic in deployment_executor.go loops through events one-at-a-time (pulumi/pkg/resource/deploy/deployment_executor.go

Line 230 in 2e3b732

pulumi/pkg/resource/deploy/deployment_executor.go

Line 419 in 2e3b732

After changing step generation to be entirely non-blocking, we suddenly see faster execution (3 minutes -> 30 seconds) and a more heterogynous execution trace:

Expected Behavior

I expected Pulumi preview to be fast.

Steps to reproduce

Have a project with many pulumi-kubernetes resources, then generate a trace while running preview.

Output of

pulumi aboutAdditional context

I have a hacky unsafe patch that I made to verify that making step generation parallel makes a huge difference. The part I know is wrong is that errors are hidden, and instead of Pulumi terminating on an error it just hangs. I think it is likely unsafe in other ways (one guess: it crashes sometimes with

fatal error: concurrent map read and map write.)Contributing

Vote on this issue by adding a 👍 reaction.

To contribute a fix for this issue, leave a comment (and link to your pull request, if you've opened one already).

The text was updated successfully, but these errors were encountered: