:class: attention

**important and/or related to whole book**

- "Catching up on the weird world of LLMs" (summary of the last few years) https://simonwillison.net/2023/Aug/3/weird-world-of-llms

- "Open challenges in LLM research" (exciting post title but mediocre content) https://huyenchip.com/2023/08/16/llm-research-open-challenges.html

- https://github.com/zeno-ml/zeno-build/tree/main/examples/analysis_gpt_mt/report

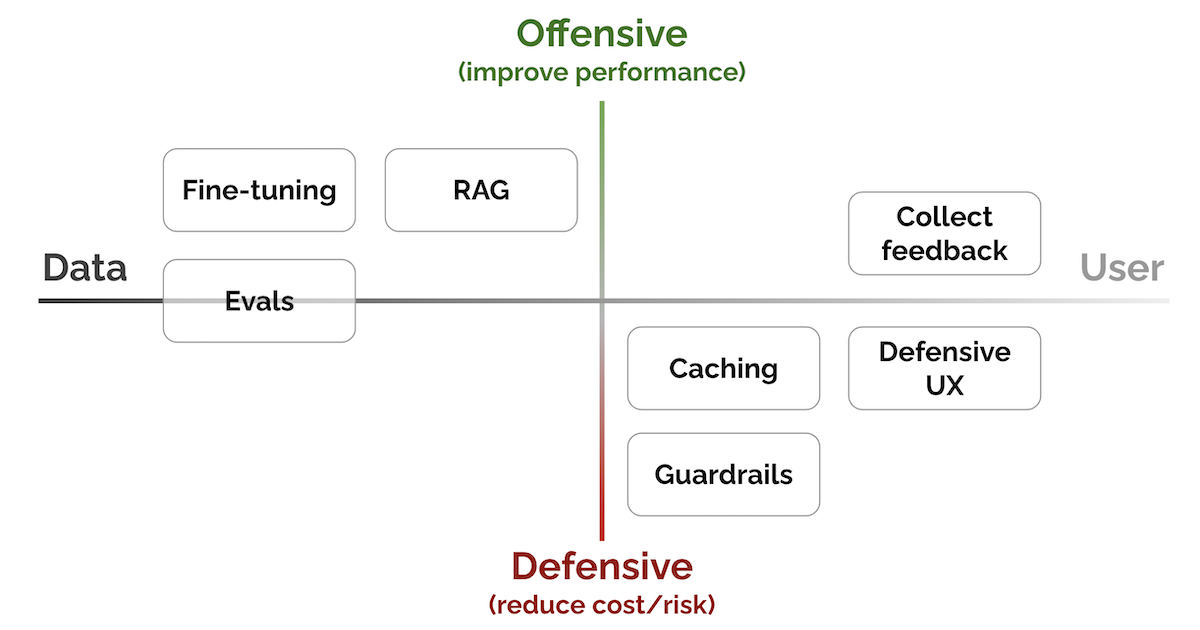

- "Patterns for Building LLM-based Systems & Products" (Evals, RAG, fine-tuning, caching, guardrails, defensive UX, and collecting user feedback) https://eugeneyan.com/writing/llm-patterns

```{figure-md} llm-patterns

:class: margin

[LLM patterns: From data to user, from defensive to offensive](https://eugeneyan.com/writing/llm-patterns)

```

- `awesome-list`s (mention overall list + recently added entries)

+ https://github.com/imaurer/awesome-decentralized-llm

+ https://github.com/huggingface/transformers/blob/main/awesome-transformers.md

+ "Anti-hype LLM reading list" (foundation papers, training, deployment, eval, UX) https://gist.github.com/veekaybee/be375ab33085102f9027853128dc5f0e

+ ... others?

- open questions & future interest (pages 15 & 16): https://mlops.community/wp-content/uploads/2023/07/survey-report-MLOPS-v16-FINAL.pdf

**unclassified**

Couldn't decide which chapter(s) these links are related to. They're mostly about security & optimisation. Perhaps create a new chapter?

- "How I Re-implemented PyTorch for WebGPU" (`webgpu-torch`: inference & autograd lib to run NNs in browser with negligible overhead) https://praeclarum.org/2023/05/19/webgpu-torch.html

- "LLaMA from scratch (or how to implement a paper without crying)" (misc tips, scaled-down version of LLaMA for training) https://blog.briankitano.com/llama-from-scratch

- "Swift Transformers: Run On-Device LLMs in Apple Devices" https://huggingface.co/blog/swift-coreml-llm

- "Why GPT-3.5-turbo is (mostly) cheaper than LLaMA-2" https://cursor.sh/blog/llama-inference#user-content-fn-gpt4-leak

- https://www.marble.onl/posts/why_host_your_own_llm.html

- https://betterprogramming.pub/you-dont-need-hosted-llms-do-you-1160b2520526

- "Low-code framework for building custom LLMs, neural networks, and other AI models" https://github.com/ludwig-ai/ludwig

- "GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers" https://arxiv.org/abs/2210.17323

- "RetrievalQA with LLaMA 2 70b & Chroma DB" (nothing new, but this guy does a lot of experiments if you wanna follow him) https://youtu.be/93yueQQnqpM

- "[WiP] build MLOps solutions in Rust" https://github.com/nogibjj/rust-mlops-template

:style: unsrt_max_authors