New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

proxy: Apparent connection leak #5334

Labels

Projects

Milestone

Comments

|

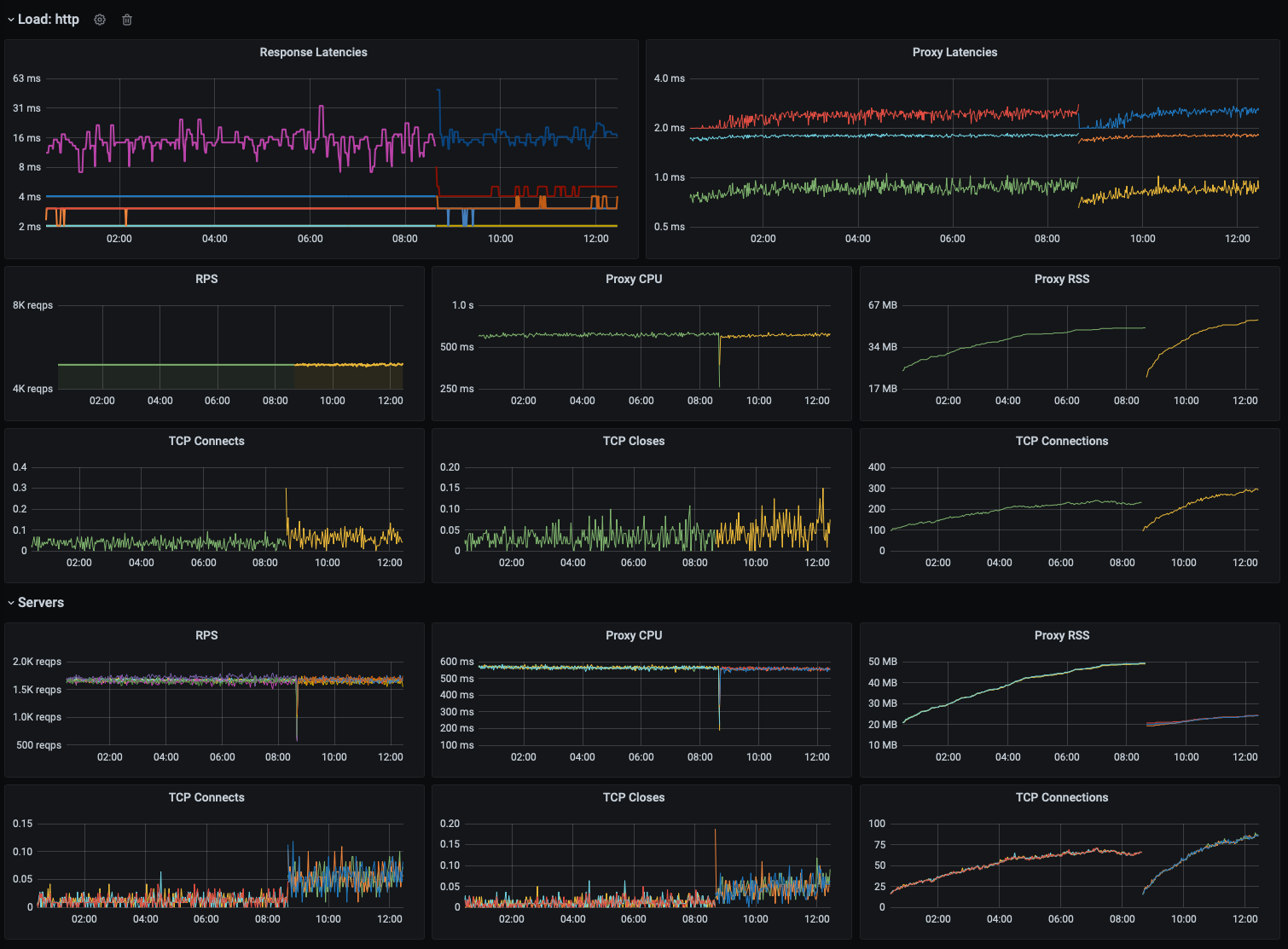

This issue persists with linkerd/linkerd2-proxy#732 and may even by slightly worse (or this may just be some variance between test runs. As suspected, this appears to correlate directly with the number of connections the proxy maintains: This basically confirms that #4900 is still a legitimate issue. |

|

It turns out that this is actually a connection leak, which manifests as increased memory usage. |

olix0r

added a commit

to linkerd/linkerd2-proxy

that referenced

this issue

Dec 10, 2020

Middlewares, especially the cache, may want to use RAII to detect when a service is idle, but the TCP server and HTTP routers drop services as soon as the request is dispatched. This change modifies the HTTP server to hold the TCP stack until all work on that connection is complete. It also introduces a new `http::Retain` middleware that clones its inner service into repsonse bodies. This is necesarry for upcoming cache eviction changes to address linkerd/linkerd2#5334.

olix0r

added a commit

to linkerd/linkerd2-proxy

that referenced

this issue

Dec 11, 2020

Middlewares, especially the cache, may want to use RAII to detect when a service is idle, but the TCP server and HTTP routers drop services as soon as the request is dispatched. This change modifies the TCP server to hold the TCP stack until all work on that connection is complete. It also introduces a new `http::Retain` middleware that clones its inner service into response bodies. This is necessary for upcoming cache eviction changes to address linkerd/linkerd2#5334.

olix0r

added a commit

to linkerd/linkerd2-proxy

that referenced

this issue

Dec 11, 2020

Cache eviction is currently triggered when a service has not processed new requests for some idle timeout. This is fragile for caches that may process a few long-lived requests. In practice, we would prefer to only start tracking idleness when there are no *active* requests on a service. This change restructures the cache to return services that are wrapped with tracking handles, rather than passing a tracking handle into the inner service. When a returned service is dropped, it spawns a background task that retains the handle for an idle timeout and, if no new instances have acquire the handle have that timeout, removes the service from the cache. This change reduces latency as well as CPU and memory utilization in load tests. Fixes linkerd/linkerd2#5334

olix0r

added a commit

to linkerd/linkerd2-proxy

that referenced

this issue

Dec 11, 2020

Cache eviction is currently triggered when a service has not processed new requests for some idle timeout. This is fragile for caches that may process a few long-lived requests. In practice, we would prefer to only start tracking idleness when there are no *active* requests on a service. This change restructures the cache to return services that are wrapped with tracking handles, rather than passing a tracking handle into the inner service. When a returned service is dropped, it spawns a background task that retains the handle for an idle timeout and, if no new instances have acquire the handle have that timeout, removes the service from the cache. This change reduces latency as well as CPU and memory utilization in load tests. Fixes linkerd/linkerd2#5334

olix0r

added a commit

to linkerd/linkerd2-proxy

that referenced

this issue

Dec 12, 2020

Cache eviction is currently triggered when a service has not processed new requests for some idle timeout. This is fragile for caches that may process a few long-lived requests. In practice, we would prefer to only start tracking idleness when there are no *active* requests on a service. This change restructures the cache to return services that are wrapped with tracking handles, rather than passing a tracking handle into the inner service. When a returned service is dropped, it spawns a background task that retains the handle for an idle timeout and, if no new instances have acquire the handle have that timeout, removes the service from the cache. This change reduces latency as well as CPU and memory utilization in load tests. Furthermore, it ultimately eliminates the need for a specialized buffer implementation. Fixes linkerd/linkerd2#5334

Sign up for free

to subscribe to this conversation on GitHub.

Already have an account?

Sign in.

I've been running a burn-in/load test that highlights what appears to be a memory leak in recent proxies.

The load generators are configured with:

Over 8 hours, we observe sustained RSS growth for both the HTTP/1 load generator and the target servers (which serve both HTTP/1 and gRPC traffic). The gRPC load generator does not exhibit this behavior. It appears that load generator's rate of growth slows over time, but it doesn't appear to totally stabilize.

The text was updated successfully, but these errors were encountered: