New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Envtest #382

Comments

|

A minimal version that creates a temporary cluster without affecting https://gist.github.com/kazk/3419b8dd0468e1640c4575c638ef590b #[tokio::test]

async fn test_integration() {

let test_env = TestEnv::new();

let client = test_env.client().await;

let pods: Api<Pod> = Api::default_namespaced(client);

let _pod = pods.get("example").await.unwrap();

}

// Multiple tests each creating a cluster can run in parallel tooI think we can extend this (make it configurable, load CRDs, assertion helpers, etc.) to get a pretty good test helper. |

|

I think this would be a fantastic out of the box thing to have, particularly if it can be done without screwing over the kubeconfig! One philosophical aspect about this though. Should we be optimising this to be a general integration test setup that you only need a handful of (because of the spin-up cost), or maybe something that can do tons of little verifications in dynamic namespaces? If each |

Maybe we should have both? Namespaces are a good ideal but it breaks down as soon as you want to test something cluster-scoped.. The question would be about how to ensure that we tear down the shared testenv in the end, though.. :/ |

Maybe I'm misunderstanding, but this set up can run multiple tests in parallel, each creating a cluster without issues.

It's actually much faster than I had imagined. The example above finishes in about 12s on my laptop. Running 5 of them (just repeated with different names) finished in 36s. From the test output, it seems like For shared cluster setup with dynamic namespace, maybe a helper macro to expand into a test case would work. Each test is passed a configured client with default namespace set. Use |

|

Another thing: Many tests probably shouldn't need anything else than the API and maybe the controllers, so that should be able to get the provisioning time down a fair bit too. Especially the container runtime can be pretty slow and annoying to run in sandboxed environments (such as Nix). |

|

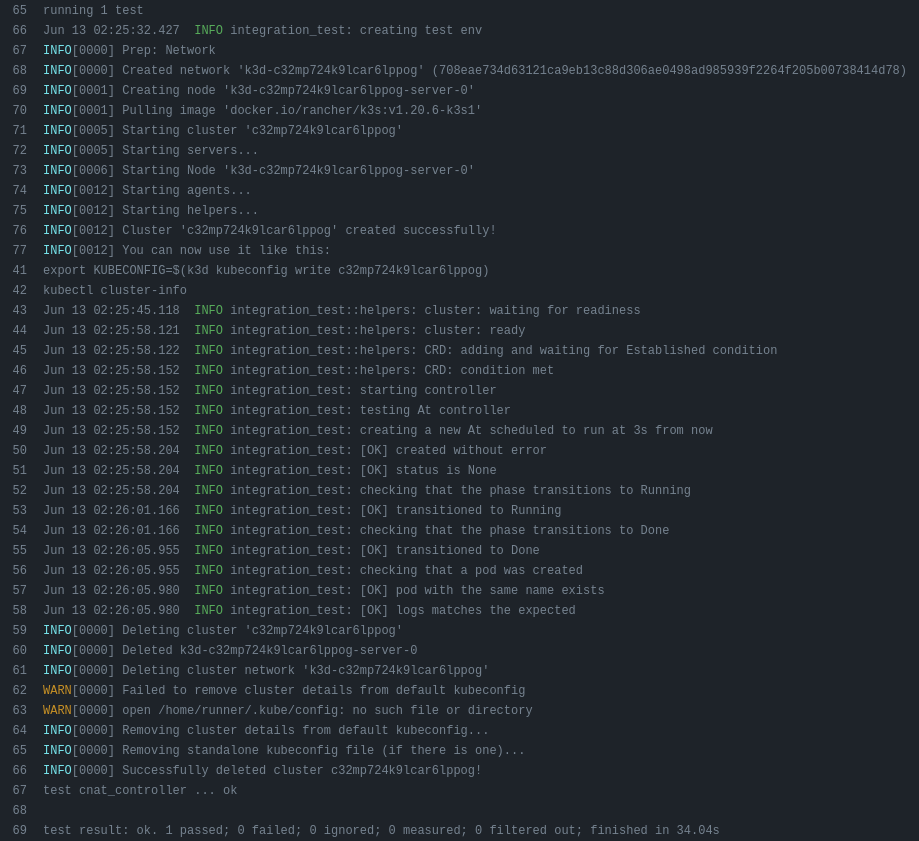

I tried writing an integration test for my example controller https://github.com/kazk/cnat-rs. See https://github.com/kazk/cnat-rs/tree/main/tests. GitHub Actions: Multiple tests like this can run in parallel just fine. To create a minimal cluster: let test_env = TestEnv::new();Builder to enable features (not much is configurable at the moment): let test_env = TestEnv::builder()

.servers(1)

.agents(3)

.inject_host_ip()

.build();If these look like a good start, we can create

I can't think of a way to put each of the small tests in a separate |

I think this would be super useful. Sorry it took so long to get back on this. A Happy to review as usual, but the code inside your test repo already looks great as a first release imo. Also, very cool repo! Did you build

Maybe that type of parallelisation is best left up to the user if the default behaviour would be confusing. |

|

No problem, I've been busy too. I'll open a PR with mostly the same code and more docs.

I was going to, but only built their

Yeah, it's interesting to see what's necessary to implement the same thing. I love how straightforward the |

|

Going to close as it's impractical to do something like this inside Some reasons outlined in #564 (comment) but basically; k3d is not the most reliable way to test, so we should probably not encourage multiple clusters being spun up as part of tests as a main testing method (if people want to do that they should do it on CI configuration where they know what machine they are dealing with). Easier ways to test are through mocking, or idempotent integration tests using k3d (with some care taken to work in non-isolated environments) as outlined in https://kube.rs/controllers/testing/ Something closer to the original |

It would be nice to support something like controller-runtime's envtest helper for starting a minimal cluster per test.

https://book.kubebuilder.io/cronjob-tutorial/writing-tests.html

The text was updated successfully, but these errors were encountered: