New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hazelcast 4.0 Memory Leak Detected #16672

Comments

|

Thank you for the report @pkgonan! Could you please share your heap and GC settings as well if anything specified? I see metrics related objects in the report you attached, something introduced in 4.0. Could you please try disabling the metrics entirely and see if it makes the issue to disappear: |

|

Also, do you have GC logs or statistics? The unreachable objects means the GC can free them up on the next run, but I suppose it runs infrequently so some garbage can pile up in the heap. Judging based on the memory chart and assuming the scarps on it are anyhow related to the GC activity, it runs approximately once an hour, while metrics are collected every 5 seconds. So if that's the case, metrics related garbage is expected. It's not clear if the memory chart shows the machine's memory or the JVM's heap, I guess it's the former. Having that said, if the issue disappears after the metrics collection got disabled with the above config, it means only the pattern you see is caused by that, but it's not necessarily a leak. Could you please also enable and send the GC logs? That should tell more. You can enable the GC log by adding the following to the JVM arguments: |

|

@blazember Thank you for your reply. [Hazelcast 4.0 With Ignore Metric Memory Pattern] [My G1 GC setting] But, i can not understand why this situation is not memory leak. I will append gc logs that you requested. |

|

@pkgonan great, we identified the main cause. I still see a very minimal increase though in the last screenshot as well, but it can be caused by anything.

In the JVM heap a memory leak occurs if references to objects no longer in use are retained, preventing these objects from freed up by the GC. In the end, the GC will struggle with freeing up memory for new allocations and Besides the GC log, another idea is that you can capture the output of the following periodically, let's say once an hour: It doesn't require an application restart. The first shows low-level GC related JVM metrics such as GC invocation count and memory pool metrics. The second shows the top of the class histogram of the reachable objects. After a few samples, it should give an idea of what's going on. Note that this I will try to reproduce this with the JVM arguments you provided. Will get back to you with my findings. |

|

@blazember Thank you |

|

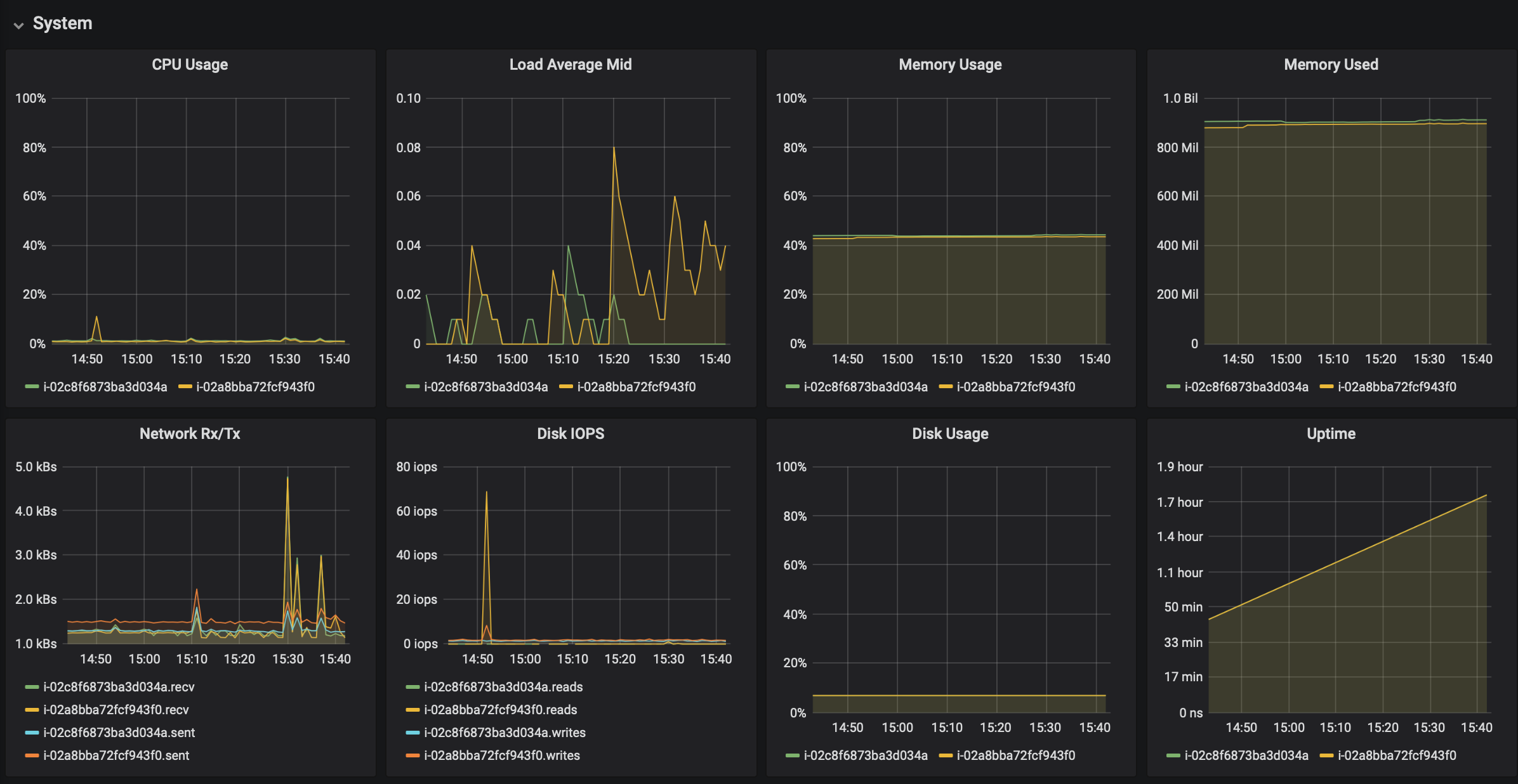

Thank you @pkgonan! This GC log shows no symptoms of leaking. You can analyze it with https://gceasy.io/ if you wish and check the My local test showed the same results. I ran two HZ members on the same machine, one with metrics disabled and the other with metrics enabled. I used the configuration and JVM arguments you provided, with the only exception of making metrics collection more frequent (1s instead of the default5s) to show results earlier. Both allocate memory, but the member with metrics enabled allocates more, as expected. Also, see how the machine memory chart looked at the same time: It started with a flat chart and after the JVMs hosting the HZ members started the used memory started increasing, despite that the JVM heap charts looked healthy in the meantime. This is because the JVM needs to warm up, which amongst other things comes with allocating, resizing its internal memory pools. The warmup time depends on the hosted application and I believe this is what you see if you compare the two charts with metrics enabled and disabled. Therefore, it is advisable to monitor the JVM heap besides monitoring the machine memory. For this, one option is Jolokia: https://jolokia.org/. Telegraf, for example, has a Jolokia input plugin: https://docs.influxdata.com/telegraf/v1.13/plugins/plugin-list/#jolokia2_agent, also there is a ready-to-use Grafana Jolokia dashboard: https://grafana.com/grafana/dashboards/8991. Note that Jolokia is a Java agent bridging JMX to HTTP, therefore it comes with some additional allocation. I think we clarified that what you see is not a leak. Please let me know if you have further questions or if we can close the issue as explained. |

|

@blazember I will check heap memory usage not physical memory.! |

|

just some update info |

Hazelcast 4.0 memory leak detected.

First, i setup Hazelcast 4.0 only one instance server in aws elastic beanstalk.

Memory usage increased even though doing nothing.

Not connected any client, and did not touch anything.

Second, setup Hazelcast 3.12.6, it does not increased memory looks like hazelcast 4.0 memory pattern.

[Hazelcast 4.0 Memory Pattern]

[Hazelcast 4.0 Memory Pattern About 4 Days]

[Hazelcast 3.12.6 Memory Pattern]

[About JVM Memory Analysis]

I analyzed heap dump, and there are too many unreachable objects founded.

Unreachable objects are 99.6 percentage of total heap size, and they seems not collected by garbage collector.

[1. Temporary Share Link]

https://heaphero.io/my-heap-report.jsp?p=YXJjaGl2ZWQvMjAyMC8wMi8xOS8tLWhlYXBkdW1wLmhwcm9mLTE2LTQ4LTQ5Lmpzb24tLQ==

[2. PDF file]

heapReport-2020-02-20-01-50.pdf

[Config File]

The text was updated successfully, but these errors were encountered: