-

Notifications

You must be signed in to change notification settings - Fork 4.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

.NET 6 Managed ThreadPool / Thread performance and reliability degradations

#62967

Comments

|

I couldn't figure out the best area label to add to this issue. If you have write-permissions please help me learn by adding exactly one area label. |

|

Tagging subscribers to this area: @mangod9 Issue DetailsDescriptionSince upgrading Akka.NET's test suite to run on .NET 6, we've noticed two major classes of problems that occur on .NET 6 only:

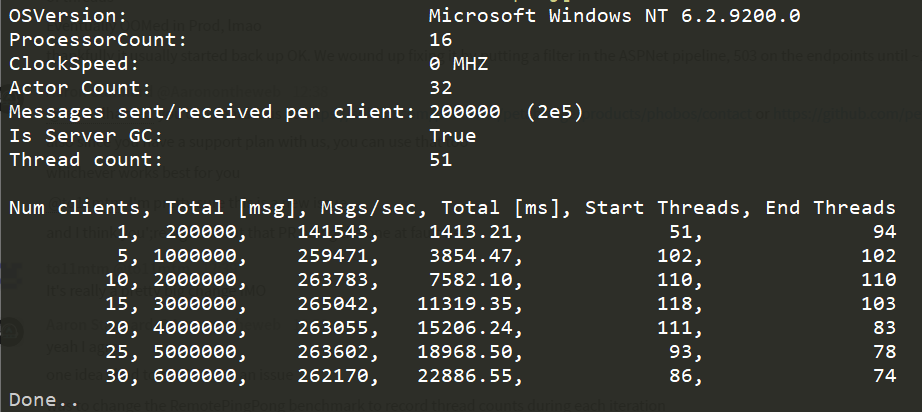

We run the exact same tests and benchmarks on .NET Core 3.1 and .NET Framework 4.7.1 and neither of those platforms exhibit these symptoms (large performance drops, long delays in execution) ConfigurationMachine that was used to generate our benchmark figures: BenchmarkDotNet=v0.13.1, OS=Windows 10.0.19041.1348 (2004/May2020Update/20H1)

AMD Ryzen 7 1700, 1 CPU, 16 logical and 8 physical cores

.NET SDK=6.0.100 Machine that generates our test failures: Regression?.NET Core 3.1 numbers: .NET 6 numbers: We've observed similar behavior in some of our non-public repositories as well. Relevant benchmark: https://github.com/akkadotnet/akka.net/tree/dev/src/benchmark/RemotePingPong Managed AnalysisWe're performing some testing now using the

|

|

Thanks for reporting these issues @Aaronontheweb. We will take a look soon (but might be delayed due to holidays). cc: @kouvel |

|

@mangod9 @kouvel 100% understood. We've been trying to research this problem on our end before we brought it to the CoreCLR team's attention since we know your time is valuable, scarce, and spread across many projects. We will continue to do that and try to provide helpful information to you and your team. |

|

sounds good. Yeah please update this issue if you find interesting info. With managed Threadpool changes in .net 6 some pattern differences are expected, but hoping we can remedy them especially the reliability concerns. |

|

What I would ideally like to get out of this issue:

Either outcome would be fine. |

@Aaronontheweb, did you get a chance to see if disabling that config option changes anything in your tests? The portable thread pool implementation is not intended to change any thread pool heuristics, it is mostly just a switch from a native implementation to a managed implementation of a portion of the implementation. As such there could be expected timing differences, and perhaps unexpected behavior differences, but it would be a good start to disable the portable thread pool with that config option as a first step to narrow things down. Disabling the portable thread pool implementation with the above config option would cause the thread pool to work in pretty much exactly the same way as before, not much at all has changed there since 3.1. |

|

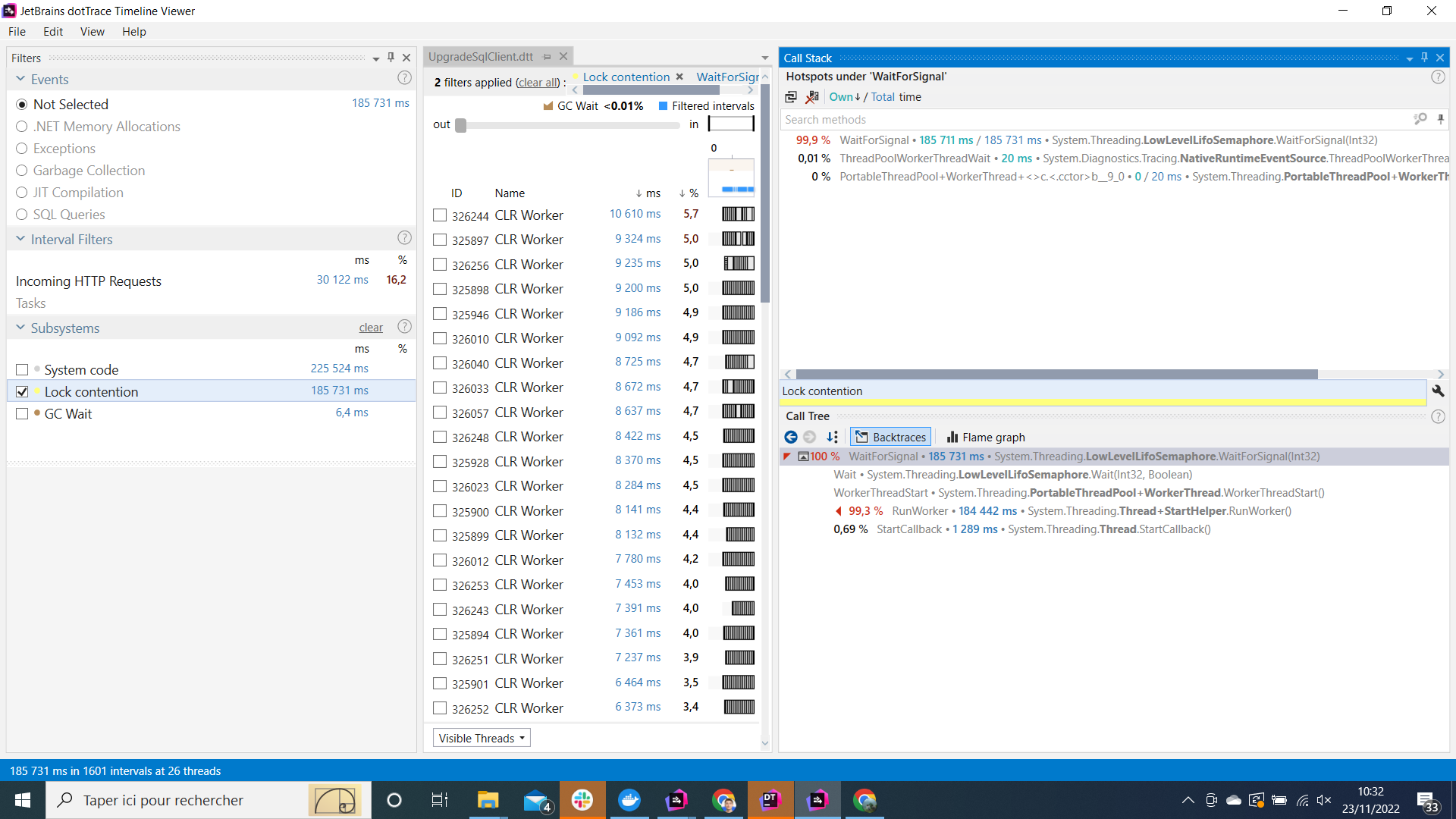

We did gather some additional evidence that the rate of thread injection might be interfering with our dedicated thread we use for scheduling (hashed wheel timer running on a fixed interval) - and this issue becomes more prevalent on machines with low vCPU counts (such as AzDo agents,) but we need to pick up research on this again. Things slowed down a bit over the holidays. Is there a specific PerfCounter or ETW event I can look for during profiling to compare the rate of thread injection on .NET Core 3.1 vs. .NET 6 with some of our affected benchmarks? |

|

I see. One difference is that the portable thread pool implementation uses The value returned by

It may be useful to see the following event to see if starvation is occurring:

It indicates starvation if the event data contains If on the other hand thread scheduling is affecting the dedicated thread from getting scheduled and you're looking to determine how many thread pool threads are active at a given time, there isn't an event for that, but it can be determined in code periodically by subtracting the values gotten from |

|

I've made more data available here: akkadotnet/akka.net#5385 (comment) Using the values from Both .NET Core 3.1 and .NET 6 start reducing thread counts around the same intervals and the thread counts are roughly the same, but there's no loss of performance when .NET Core 3.1 does this. |

|

Thread pool worker threads only exit after about 20 seconds of not being used, and thread pool IO completion threads exit after about 15 seconds of not being used. Hill climbing oscillates the worker thread count initially and slowly seems to stabilize near the proc count. So the worker threads that are exiting are just the ones not being used anymore. It's the same behavior on .NET Core 3.1 and .NET 6, so I don't think that would be the cause. I tried running the benchmark on my 12-proc x64 Windows machine. I'm seeing something similar, though not exactly, and I'm seeing a similar throughput drop in .NET Core 3.1 too sometimes. I'm also seeing a few different multimodal kinds of results on .NET Core 3.1 and .NET 6 similarly. The following results are with hill climbing disabled, I'll explain that below. .NET Core 3.1Run 1: Run 2: .NET 6Run 1: Run 2: After seeing some results like that, just to be sure, I also disabled hill climbing ( Can you try to disable hill climbing and try with .NET 6 on your machine again to see if the same issue shows up? It may also be useful to profile a run with PerfView on your machine and see what's happening differently during the time range where throughput is lower in .NET 6 compared with .NET Core 3.1. |

|

Also tried running the benchmark on a 32-proc Zen 3 Ryzen processor. There I'm seeing some but relatively less variance in the numbers between runs but much more stable during the run at different client numbers, on both .NET Core 3.1 and .NET 6. Throughput seems to be slightly better on .NET 6 on average. .NET Core 3.1.NET 6 |

|

Thanks for running this!

Are the Ryzen numbers with hill-climbing disabled? |

No I just ran them with default settings. |

|

Any news / insight on this subject ? |

|

is this fixed yet? |

|

@Aaronontheweb it seems this was resolved by #68881 according to akkadotnet/akka.net#5385 (comment). Can this issue be closed, or is it tracking something else? |

|

It can be closed! Thank you! |

Description

Since upgrading Akka.NET's test suite to run on .NET 6, we've noticed two major classes of problems that occur on .NET 6 only:

Threadbehavior that causes tools such as ourHashedWheelTimerSchedulerto no longer be reliable - i.e. https://github.com/akkadotnet/akka.net/blob/60807febbc63c249a113f4768ad597bcb55bf56e/src/core/Akka.Tests/Actor/Scheduler/TaskBasedScheduler_TellScheduler_Cancellation_Tests.cs#L128-L150 fails, which is odd given that that code runs on its own dedicated thread. It looks as though theThreadis totally unable to start or get scheduled within a reasonable period of time (i.e. 3 seconds). We run our test suite sequentially so we don't have load problems that could cause this.We run the exact same tests and benchmarks on .NET Core 3.1 and .NET Framework 4.7.1 and neither of those platforms exhibit these symptoms (large performance drops, long delays in execution)

Configuration

Machine that was used to generate our benchmark figures:

Machine that generates our test failures:

windows-2019shared agent on Azure DevOps.Regression?

.NET Core 3.1 numbers:

.NET 6 numbers:

We've observed similar behavior in some of our non-public repositories as well.

Relevant benchmark:

https://github.com/akkadotnet/akka.net/tree/dev/src/benchmark/RemotePingPong

Managed

ThreadPool-only benchmark (most relevant for this issue) :akkadotnet/akka.net#5386

Analysis

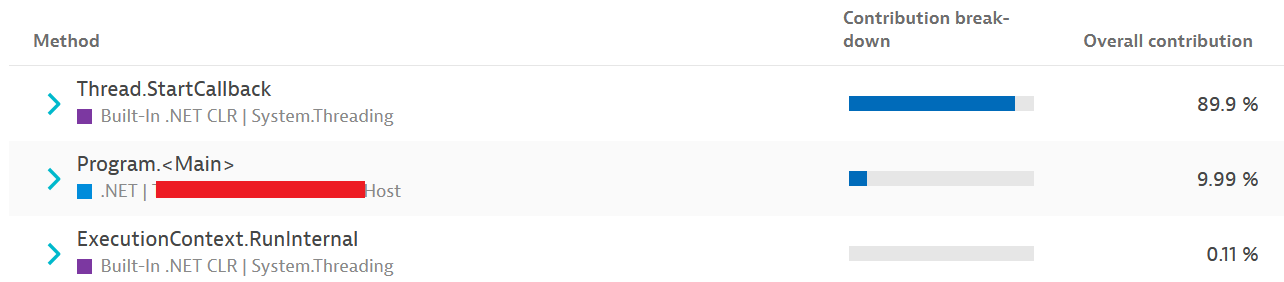

We're performing some testing now using the

COMPlus_ThreadPool_UsePortableThreadPoolenvironment variable to see how it affects our benchmark and testing behavior (see akkadotnet/akka.net#5441), but we believe that these two issues may be related to the problems we've been observing on .NET 6:The text was updated successfully, but these errors were encountered: