New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

dockerd: high memory usage #848

Comments

|

@ceecko could you provide steps to reproduce? With current description of the issue, hard to nail down what is happening on your system. |

|

@andrewhsu I understand. I don't have any specific steps. We run tens of servers with 32GB of memory where containers come and go and all of them experience this high memory usage over time. Usually within 2-4 weeks. Is there any debugging information I can get you to see what's using the memory? |

|

@andrewhsu I managed to replicate the issue. After running the following script the memory usage jumps to 262MB. It appears Fluentd runs ok and accepts logs. Removing all containers does not decrease the memory usage. |

|

@andrewhsu is there any other information which would be useful? |

|

@ceecko can you please collect memory usage dumps and share it with us? The following article explains how to do that: https://success.docker.com/article/how-do-i-gather-engine-heap-information |

|

Attached you can find the files There appears to be an error in the output |

|

I am running into a similar problem on one of my kubernetes clusters. After 4 weeks memory consumption of dockerd is climbing from 1.5 gigabyte to 54 gigabyte. Only a reboot temporary solves the problem. Docker commands such as docker stats also become unresponsive when the memory usage starts rising. This happens on 18.06.2-ce on debian stretch. Problem does not happen on another cluster composed out of nodes that run coreos; which have the same deployments. |

|

@ceecko Thanks for the dump. It seems like this only has a very small portion and shows 25MB of allocated objects. The reason the svg is not working for you is the golang image does not have graphviz installed, which is what is used to generate that svg. |

|

The dump has been taken at a time when Maybe I'm reading the output of |

|

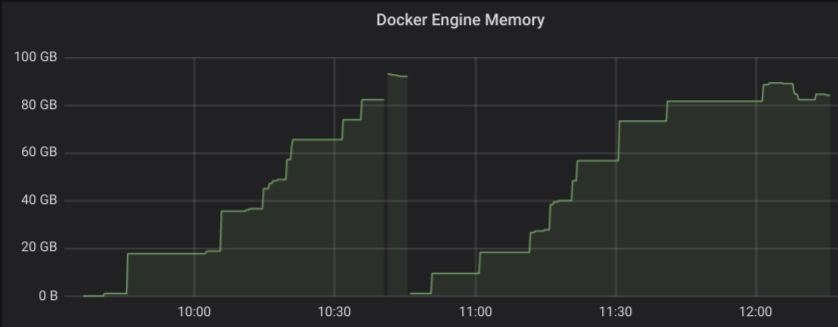

I can confirm this memory leak. Each container deployment with Here's a Grafana graph of dockerd memory usage ( In our case this leads to Also, this seems to be a regression: only 19.x docker engines seem to be affected. 18.x pprof results: dockerd_fluentd_async_leak.tar.gz Tested with:

I'm also seeing similar pprof results as @ceecko; the memory usage reported by the pprof output (at least the svgs) is much lower than the memory usage reported by the OS and Docker's own |

|

@thaJeztah is there any other information you need? |

|

We are also facing a similar problem where docker daemon consumes 5GB+ of data, but the go pprof heap shows only ~1GB and it is caused by log driver. docker_heap.pb.gz Steps to reproduce the behavior Daemon configuration: Docker info: Additional environment details (AWS, VirtualBox, physical, etc.)

|

|

I think this is related to excessive allocations rather than an actual leak. |

|

I believe moby/moby#40796 should fix the problem. |

|

@cpuguy83 Thanks for looking at this. Just to be clear, the inital bug report and my issue description both use the

If that's the case, this issue probably shouldn't be closed by your PR? |

|

Right on, I fixed it. |

|

@cpuguy83 Is this issue fixed? Not entirely clear to me if moby/moby#40796 only applies to to json-file and local or if it also affects the fluentd log driver. I see from the PR that most of the code changes are to local and json-file, but there are also changes to a generic logger utility that may have fixed this fluentd issue? (https://github.com/moby/moby/pull/40796/files#diff-0d16783edb4c661112478f7e13a17694) |

|

It is not fixed for fluentd. The logging utility is a shared implementation of a rotating log file used by |

|

Did the fix for |

|

@flixr the PR that was linked above is not in docker 19.03 (see moby/moby#41130 (review)), but it's in the docker 20.10 release candidates (GA to be released soon as well) |

|

Any update on GA? |

|

docker 20.10 has been release quite some time ago; people on this thread still running into this with the 20.10 (or above) version? |

|

I was running It only happened once. |

|

I confirm this is no longer an issue with |

|

I think another issue moby/moby#43165 related to high memoy usage, and it has been fixed in 20.10.13: Prevent an OOM when using the “local” logging driver with containers that produce a large amount of log messages moby/moby#43165. I can reproduce the problem by running (thanks to @aeriksson ) terminal 1

terminal 2

|

Expected behavior

dockerd should use less memory

Actual behavior

dockerd uses 4.5GB+ memory

Steps to reproduce the behavior

Not sure. We run multiple servers with docker and all of them experience high memory usage after some time.

I'm happy to provide any debugging logs as needed.

Output of

docker version:Output of

docker info:Additional environment details (AWS, VirtualBox, physical, etc.)

The text was updated successfully, but these errors were encountered: