-

Notifications

You must be signed in to change notification settings - Fork 3.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

proposal: add tracing support #3057

Comments

|

I agree tracing would be helpful. It would be tremendously helpful to also propagate tracing over GRPC to the daemon. |

|

Hi @cpuguy83, thanks for comment.

Yes, absolutely. I agree. For client, I think it is always up to users.

I was thinking that the containerd components don't call each other. It seems that the client is good enough. However, it is good to add that into daemon. |

Where this becomes useful is so a client can receive tracing data propagated from the daemon. |

|

@kevpar - FYI. We have been looking into this for distributed tracing as well because the runtime v2 shim calls happen out of proc and it would be amazing to flow activity id's across the gRPC boundaries for logs correlation. |

|

/cc @dashpole Who is working on tracing in Kubernetes, and also experimented tracing down to containerd. |

|

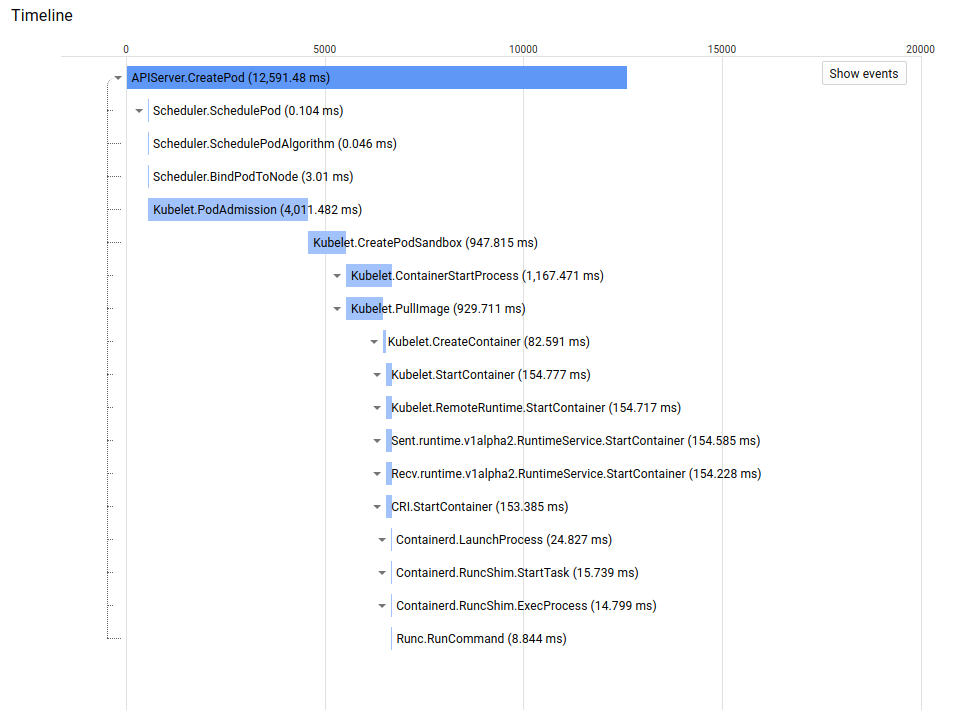

I had an intern last fall who did a PoC of something similar. His containerd code changes are here: master...Monkeyanator:opencensus-tracing. We ended up using OpenCensus instead of OpenTracing just because then we didn't need pick a tracing backend in open-source components, such as containerd. Then you can run the "OC Agent" to handle pushing traces to a tracing backend, and can ship the components independently. Since containerd uses a stripped-down version of gRPC for it's shims, we explicitly added the trace context to the API, rather than try and add context propagation back in. But if we actually added it, we should do that properly. Other than that, adding tracing to containerd was pretty simple. If you are curious how I think this should work in kubernetes, feel free to check out my proposal: kubernetes/enhancements#650. We used tracing to help us debug a slow start runc issue our autoscaling team sent us, so I definitely think this has value. Here is the trace from the reproduction in just containerd: |

|

I've spent some time specifically thinking about how to propagate traces over ttrpc. |

|

Sounds good to me |

|

It seems the industry is moving toward |

|

|

|

FYI, I created a rough tracing package here: https://github.com/virtual-kubelet/virtual-kubelet/blob/master/trace/trace.go In VK we use opencensus and logrus... have interfaces for trace and logging... but these are primarily for the purposes of supporting logging through a span and not so much about providing multiple backing implementations of the tracing... but here is how it works: ctx, span := trace.StartSpan(ctx)

log.G(ctx).WithField("foo", "bar").Debug("whatever")In this case, the |

|

I guess godoc would be better: https://godoc.org/github.com/virtual-kubelet/virtual-kubelet/trace |

|

All this to say, I don't think we should be worried about what opencensus does wrt logging or not... use what's most convenient/has the most community support. |

|

I make a doc for proposal. please take a look. Thanks https://docs.google.com/document/d/12l0dEa9W8OSZdUYUNZJZ7LengDlJvO5J2ZLategEdl4/edit?usp=sharing /cc @containerd/containerd-reviewers @containerd/containerd-maintainers |

|

+1 doc looks good. OC is very flexible and very nice that you can write exporters or even just use an agent exporter and not have to pull in a ton of dependencies in. |

|

+1 for the doc. Thanks @fuweid |

|

How far are we with the tracing support? Is anyone actively working on it. @kevpar? I would like to help/collaborate on tasks actively. Appreciate your comments! |

Previously it was unclear if we should really be using OpenTracing or OpenCensus, so we got bogged down in that. Now that they have merged into OpenTelemetry it's much clearer what we should use. I've been intending to work on this again once Go OpenTelemetry was production ready, but maybe we don't really need to wait for that. |

|

Thanks @kevpar. I will spend some time this week to come up to speed on how we can use OpenTelemetry. I guess coming up with a detailed plan on how to implements is the next step? |

|

I think the first steps are:

|

|

Regarding PR #5570 has few TODO items. |

|

Will containerd pass traceing to registry, CSI downstream, CRI downstream? |

|

I found that there is still a lot to do, and I can complete the trace delivery related to image pull. I am currently discussing the added trace issue in the distribution project. |

|

The remaining items are tracked by #6083. |

|

@estesp sgtm. I removed the milestone and am closing it. |

Proposal

There are many component systems here, such as

content,snapshotter,containerandtasks. And the containerd package providessmartclient with powerful functionality, something likeBackend for frontend. For instance, theClient.Pullis simple function call, but it already combines several calls to different components in there.If we can add the tracing in

containerd smart client sideDocument

In order to make it easy to discuss, I make a google doc for this proposal.

https://docs.google.com/document/d/12l0dEa9W8OSZdUYUNZJZ7LengDlJvO5J2ZLategEdl4/edit?usp=sharing

And welcome to comment for this.

The text was updated successfully, but these errors were encountered: