diff --git a/.devcontainer/Dockerfile b/.devcontainer/Dockerfile

index 7e11288aa3..7ae205d5de 100644

--- a/.devcontainer/Dockerfile

+++ b/.devcontainer/Dockerfile

@@ -1,6 +1,6 @@

# syntax=docker/dockerfile:1.4-labs

-FROM python:3-bullseye

+FROM --platform=linux/amd64 python:3-bullseye

# [Option] Install zsh

ARG INSTALL_ZSH="true"

@@ -12,10 +12,8 @@ ARG ENABLE_NONROOT_DOCKER="true"

ARG USE_MOBY="true"

# [Option] Select CLI version

ARG CLI_VERSION="latest"

-

# Enable new "BUILDKIT" mode for Docker CLI

ENV DOCKER_BUILDKIT=1

-

ENV DEBIAN_FRONTEND=noninteractive

# Install needed packages and setup non-root user. Use a separate RUN statement to add your

@@ -23,16 +21,28 @@ ENV DEBIAN_FRONTEND=noninteractive

ARG USERNAME=automatic

ARG USER_UID=1000

ARG USER_GID=$USER_UID

-COPY library-scripts/*.sh /tmp/library-scripts/

-RUN --mount=type=cache,target=/var/cache/apt \

- --mount=type=cache,target=/var/lib/apt \

- apt-get update \

- && apt-get install -y build-essential software-properties-common vim \

+COPY .devcontainer/library-scripts/*.sh /tmp/library-scripts/

+

+RUN --mount=type=cache,target=/var/lib/apt \

+ --mount=type=cache,target=/var/cache/apt \

+ apt-get update -y \

+ # Remove imagemagick due to https://security-tracker.debian.org/tracker/CVE-2019-10131

+ && apt-get purge -y imagemagick imagemagick-6-common

+

+# install common packages

+RUN --mount=type=cache,target=/var/lib/apt \

+ --mount=type=cache,target=/var/cache/apt \

+ apt-get install -y build-essential software-properties-common vim \

&& /bin/bash /tmp/library-scripts/common-debian.sh "${INSTALL_ZSH}" "${USERNAME}" "${USER_UID}" "${USER_GID}" "${UPGRADE_PACKAGES}" "true" "true" \

# Use Docker script from script library to set things up

&& /bin/bash /tmp/library-scripts/docker-debian.sh "${ENABLE_NONROOT_DOCKER}" "/var/run/docker-host.sock" "/var/run/docker.sock" "${USERNAME}" "${USE_MOBY}" "${CLI_VERSION}" \

# Clean up

- && apt-get autoremove -y && apt-get clean -y && rm -rf /var/lib/apt/lists/* /tmp/library-scripts/

+ && rm -rf /var/lib/apt/lists/* /tmp/library-scripts/

+

+COPY requirements/*.txt /tmp/pip-tmp/

+RUN --mount=type=cache,target=/root/.cache/pip \

+ pip install --no-warn-script-location -r /tmp/pip-tmp/dev-requirements.txt -r /tmp/pip-tmp/docs-requirements.txt \

+ && rm -rf /tmp/pip-tmp

# Setting the ENTRYPOINT to docker-init.sh will configure non-root access to

# the Docker socket if "overrideCommand": false is set in devcontainer.json.

diff --git a/.devcontainer/devcontainer.json b/.devcontainer/devcontainer.json

index 8c3364e937..3cc51c5f0b 100644

--- a/.devcontainer/devcontainer.json

+++ b/.devcontainer/devcontainer.json

@@ -3,6 +3,7 @@

{

"name": "BentoML",

"dockerFile": "Dockerfile",

+ "context": "..",

"containerEnv": {

"BENTOML_DEBUG": "True",

"BENTOML_BUNDLE_LOCAL_BUILD": "True",

@@ -22,15 +23,16 @@

// Configure properties specific to VS Code.

"vscode": {

"extensions": [

- "ms-azuretools.vscode-docker",

- "ms-python.vscode-pylance",

"ms-python.python",

+ "ms-python.vscode-pylance",

+ "ms-azuretools.vscode-docker",

"ms-vsliveshare.vsliveshare",

"ms-python.black-formatter",

"ms-python.pylint",

"samuelcolvin.jinjahtml",

"GitHub.copilot",

- "esbenp.prettier-vscode"

+ "esbenp.prettier-vscode",

+ "VisualStudioExptTeam.intellicode-api-usage-examples"

],

"settings": {

"files.watcherExclude": {

@@ -52,18 +54,14 @@

"[jsonc]": {

"editor.defaultFormatter": "esbenp.prettier-vscode"

},

- "editor.minimap.enabled": true,

+ "editor.minimap.enabled": false,

"editor.formatOnSave": true,

- "editor.wordWrapColumn": 88,

+ "editor.wordWrapColumn": 88

}

}

},

// Use 'forwardPorts' to make a list of ports inside the container available locally.

- "forwardPorts": [3000, 8080, 9000, 9090],

- // Link some default configs to codespace container.

- "postCreateCommand": "bash ./.devcontainer/lifecycle/post-create",

+ "forwardPorts": [3000, 3001],

// install BentoML and tools

- "postStartCommand": "bash ./.devcontainer/lifecycle/post-start",

- // Comment out to connect as root instead. More info: https://aka.ms/vscode-remote/containers/non-root.

- "remoteUser": "vscode"

+ "postStartCommand": "bash ./.devcontainer/lifecycle/post-start"

}

diff --git a/.devcontainer/lifecycle/post-create b/.devcontainer/lifecycle/post-create

deleted file mode 100755

index 5e72c9360a..0000000000

--- a/.devcontainer/lifecycle/post-create

+++ /dev/null

@@ -1,3 +0,0 @@

-#!/usr/bin/env bash

-

-pip install --user -r requirements/dev-requirements.txt

diff --git a/.devcontainer/lifecycle/post-start b/.devcontainer/lifecycle/post-start

index c36ed88eb7..6edd411cc3 100755

--- a/.devcontainer/lifecycle/post-start

+++ b/.devcontainer/lifecycle/post-start

@@ -7,4 +7,16 @@ git config --global pull.ff only

git fetch upstream --tags && git pull

# install editable wheels & tools for bentoml

-pip install --user -e ".[tracing]" --isolated

+pip install -e ".[tracing,grpc]" --verbose

+pip install -r requirements/dev-requirements.txt

+pip install -U "grpcio-tools>=1.41.0" "mypy-protobuf>=3.3.0"

+# generate stubs

+OPTS=(-I. --grpc_python_out=. --python_out=. --mypy_out=. --mypy_grpc_out=.)

+python -m grpc_tools.protoc "${OPTS[@]}" bentoml/grpc/v1alpha1/service.proto

+python -m grpc_tools.protoc "${OPTS[@]}" bentoml/grpc/v1alpha1/service_test.proto

+# uninstall broken protobuf typestubs

+pip uninstall -y types-protobuf

+

+# setup docker buildx

+docker buildx install

+docker buildx ls | grep bentoml-builder &>/dev/null || docker buildx create --use --name bentoml-builder --platform linux/amd64,linux/arm64 &>/dev/null

diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

index 76f6387247..3a56683e15 100644

--- a/.github/workflows/ci.yml

+++ b/.github/workflows/ci.yml

@@ -257,9 +257,15 @@ jobs:

path: ${{ steps.cache-dir.outputs.dir }}

key: ${{ runner.os }}-tests-${{ hashFiles('requirements/tests-requirements.txt') }}

+ # Simulate ./scripts/generate_grpc_stubs.sh

+ - name: Generate gRPC stubs

+ run: |

+ pip install protobuf==3.19.4 "grpcio-tools==1.41"

+ find bentoml/grpc/v1alpha1 -type f -name "*.proto" -exec python -m grpc_tools.protoc -I. --grpc_python_out=. --python_out=. "{}" \;

+

- name: Install dependencies

run: |

- pip install -e .

+ pip install -e ".[grpc]"

pip install -r requirements/tests-requirements.txt

pip install -r tests/e2e/bento_server_general_features/requirements.txt

diff --git a/.gitignore b/.gitignore

index a2baefb2a7..69360490f2 100644

--- a/.gitignore

+++ b/.gitignore

@@ -114,3 +114,10 @@ typings

# test files

catboost_info

+

+# ignore pyvenv

+pyvenv.cfg

+

+# generated stub that is included in distribution

+*_pb2*.py

+*_pb2*.pyi

diff --git a/DEVELOPMENT.md b/DEVELOPMENT.md

index a1370404be..15a034c82d 100644

--- a/DEVELOPMENT.md

+++ b/DEVELOPMENT.md

@@ -18,22 +18,26 @@ If you are interested in proposing a new feature, make sure to create a new feat

2. Fork the BentoML project on [GitHub](https://github.com/bentoml/BentoML).

3. Clone the source code from your fork of BentoML's GitHub repository:

+

```bash

git clone git@github.com:username/BentoML.git && cd BentoML

```

4. Add the BentoML upstream remote to your local BentoML clone:

+

```bash

git remote add upstream git@github.com:bentoml/BentoML.git

```

5. Configure git to pull from the upstream remote:

+

```bash

git switch main # ensure you're on the main branch

git branch --set-upstream-to=upstream/main

```

6. Install BentoML with pip in editable mode:

+

```bash

pip install -e .

```

@@ -41,11 +45,13 @@ If you are interested in proposing a new feature, make sure to create a new feat

This installs BentoML in an editable state. The changes you make will automatically be reflected without reinstalling BentoML.

7. Install the BentoML development requirements:

+

```bash

pip install -r ./requirements/dev-requirements.txt

```

8. Test the BentoML installation either with `bash`:

+

```bash

bentoml --version

```

@@ -62,65 +68,72 @@ If you are interested in proposing a new feature, make sure to create a new feat

with VS Code

1. Confirm that you have the following installed:

- - [Python3.7+](https://www.python.org/downloads/)

- - VS Code with the [Python](https://marketplace.visualstudio.com/items?itemName=ms-python.python) and [Pylance](https://marketplace.visualstudio.com/items?itemName=ms-python.vscode-pylance) extensions

+

+ - [Python3.7+](https://www.python.org/downloads/)

+ - VS Code with the [Python](https://marketplace.visualstudio.com/items?itemName=ms-python.python) and [Pylance](https://marketplace.visualstudio.com/items?itemName=ms-python.vscode-pylance) extensions

2. Fork the BentoML project on [GitHub](https://github.com/bentoml/BentoML).

3. Clone the GitHub repository:

- 1. Open the command palette with Ctrl+Shift+P and type in 'clone'.

- 2. Select 'Git: Clone(Recursive)'.

- 3. Clone BentoML.

+

+ 1. Open the command palette with Ctrl+Shift+P and type in 'clone'.

+ 2. Select 'Git: Clone(Recursive)'.

+ 3. Clone BentoML.

4. Add an BentoML upstream remote:

- 1. Open the command palette and enter 'add remote'.

- 2. Select 'Git: Add Remote'.

- 3. Press enter to select 'Add remote' from GitHub.

- 4. Enter https://github.com/bentoml/BentoML.git to select the BentoML repository.

- 5. Name your remote 'upstream'.

+

+ 1. Open the command palette and enter 'add remote'.

+ 2. Select 'Git: Add Remote'.

+ 3. Press enter to select 'Add remote' from GitHub.

+ 4. Enter https://github.com/bentoml/BentoML.git to select the BentoML repository.

+ 5. Name your remote 'upstream'.

5. Pull from the BentoML upstream remote to your main branch:

- 1. Open the command palette and enter 'checkout'.

- 2. Select 'Git: Checkout to...'

- 3. Choose 'main' to switch to the main branch.

- 4. Open the command palette again and enter 'pull from'.

- 5. Click on 'Git: Pull from...'

- 6. Select 'upstream'.

+

+ 1. Open the command palette and enter 'checkout'.

+ 2. Select 'Git: Checkout to...'

+ 3. Choose 'main' to switch to the main branch.

+ 4. Open the command palette again and enter 'pull from'.

+ 5. Click on 'Git: Pull from...'

+ 6. Select 'upstream'.

6. Open a new terminal by clicking the Terminal dropdown at the top of the window, followed by the 'New Terminal' option. Next, add a virtual environment with this command:

```bash

python -m venv .venv

```

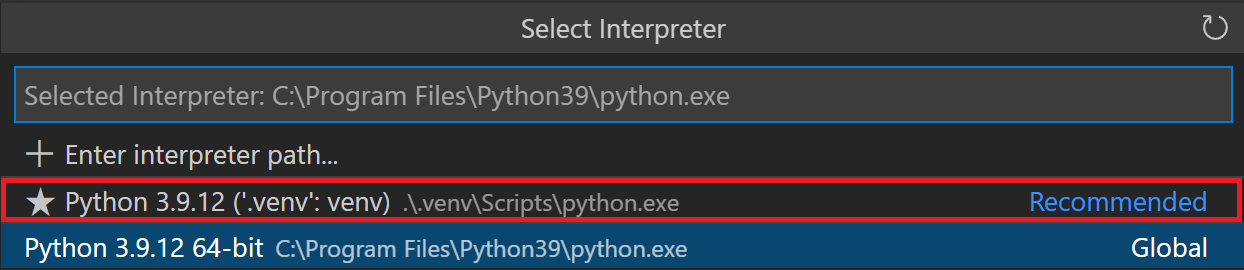

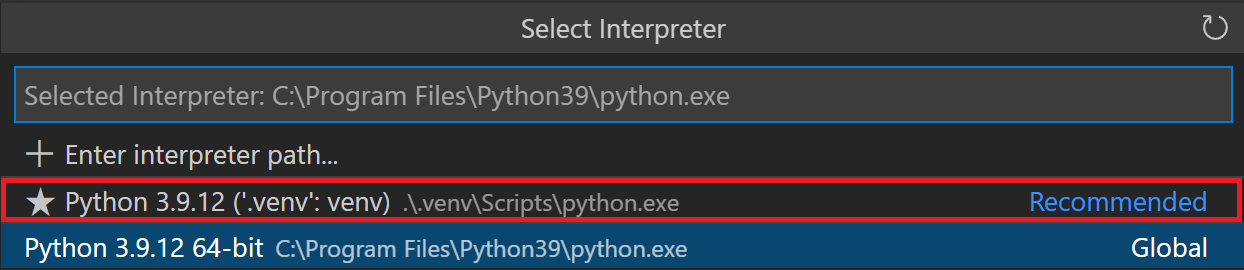

7. Click yes if a popup suggests switching to the virtual environment. Otherwise, go through these steps:

- 1. Open any python file in the directory.

- 2. Select the interpreter selector on the blue status bar at the bottom of the editor.

-

-

- 3. Switch to the path that includes .venv from the dropdown at the top.

-

+ 1. Open any python file in the directory.

+ 2. Select the interpreter selector on the blue status bar at the bottom of the editor.

+

+

+ 3. Switch to the path that includes .venv from the dropdown at the top.

+

8. Update your PowerShell execution policies. Win+x followed by the 'a' key opens the admin Windows PowerShell. Enter the following command to allow the virtual environment activation script to run:

```

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

```

-using the Command Line

1. Make sure you're on the main branch.

+

```bash

git switch main

```

2. Use the git pull command to retrieve content from the BentoML Github repository.

+

```bash

git pull

```

3. Create a new branch and switch to it.

+

```bash

git switch -c my-new-branch-name

```

@@ -128,11 +141,13 @@ If you are interested in proposing a new feature, make sure to create a new feat

4. Make your changes!

5. Use the git add command to save the state of files you have changed.

+

```bash

git add

```

6. Commit your changes.

+

```bash

git commit

```

@@ -141,59 +156,71 @@ If you are interested in proposing a new feature, make sure to create a new feat

```bash

git push

```

-using VS Code

1. Switch to the main branch:

- 1. Open the command palette with Ctrl+Shift+P.

- 2. Search for 'Git: Checkout to...'

- 3. Select 'main'.

+

+ 1. Open the command palette with Ctrl+Shift+P.

+ 2. Search for 'Git: Checkout to...'

+ 3. Select 'main'.

2. Pull from the upstream remote:

- 1. Open the command palette.

- 2. Enter and select 'Git: Pull...'

- 3. Select 'upstream'.

+

+ 1. Open the command palette.

+ 2. Enter and select 'Git: Pull...'

+ 3. Select 'upstream'.

3. Create and change to a new branch:

- 1. Type in 'Git: Create Branch...' in the command palette.

- 2. Enter a branch name.

+

+ 1. Type in 'Git: Create Branch...' in the command palette.

+ 2. Enter a branch name.

4. Make your changes!

5. Stage all your changes:

- 1. Enter and select 'Git: Stage All Changes...' in the command palette.

+

+ 1. Enter and select 'Git: Stage All Changes...' in the command palette.

6. Commit your changes:

- 1. Open the command palette and enter 'Git: Commit'.

+

+ 1. Open the command palette and enter 'Git: Commit'.

7. Push your changes:

- 1. Enter and select 'Git: Push...' in the command palette.

+ 1. Enter and select 'Git: Push...' in the command palette.