diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

index bcdd04c7fe5..531f2c30f94 100644

--- a/.github/workflows/ci.yml

+++ b/.github/workflows/ci.yml

@@ -77,12 +77,6 @@ jobs:

run: |

npm install -g npm@^7 pyright

- - name: Setup protobuf tools

- uses: bufbuild/buf-setup-action@v1.6.0

- if: success()

- with:

- github_token: ${{ github.token }}

-

- name: Cache pip dependencies

uses: actions/cache@v3

id: cache-pip

@@ -99,8 +93,6 @@ jobs:

run: make ci-format

- name: Lint check

run: make ci-lint

- - name: Proto check

- run: make ci-proto

- name: Type check

run: make ci-pyright

diff --git a/.readthedocs.yml b/.readthedocs.yaml

similarity index 60%

rename from .readthedocs.yml

rename to .readthedocs.yaml

index 94e742e486d..112e083ea9f 100644

--- a/.readthedocs.yml

+++ b/.readthedocs.yaml

@@ -1,25 +1,18 @@

-# .readthedocs.yml

-# Read the Docs configuration file

# See https://docs.readthedocs.io/en/stable/config-file/v2.html for details

-# Required

version: 2

build:

- os: "ubuntu-20.04"

+ os: "ubuntu-22.04"

tools:

python: "3.9"

jobs:

- post_checkout:

- - git fetch --unshallow

pre_install:

- git update-index --assume-unchanged docs/source/conf.py

-# Build documentation in the docs/ directory with Sphinx

sphinx:

configuration: docs/source/conf.py

-# Optionally set the version of Python and requirements required to build your docs

python:

install:

- requirements: requirements/docs-requirements.txt

diff --git a/DEVELOPMENT.md b/DEVELOPMENT.md

index 78e4b95a487..5b6887a12ce 100644

--- a/DEVELOPMENT.md

+++ b/DEVELOPMENT.md

@@ -18,22 +18,26 @@ If you are interested in proposing a new feature, make sure to create a new feat

2. Fork the BentoML project on [GitHub](https://github.com/bentoml/BentoML).

3. Clone the source code from your fork of BentoML's GitHub repository:

+

```bash

git clone git@github.com:username/BentoML.git && cd BentoML

```

4. Add the BentoML upstream remote to your local BentoML clone:

+

```bash

git remote add upstream git@github.com:bentoml/BentoML.git

```

5. Configure git to pull from the upstream remote:

+

```bash

git switch main # ensure you're on the main branch

git branch --set-upstream-to=upstream/main

```

6. Install BentoML with pip in editable mode:

+

```bash

pip install -e .

```

@@ -41,11 +45,13 @@ If you are interested in proposing a new feature, make sure to create a new feat

This installs BentoML in an editable state. The changes you make will automatically be reflected without reinstalling BentoML.

7. Install the BentoML development requirements:

+

```bash

pip install -r ./requirements/dev-requirements.txt

```

8. Test the BentoML installation either with `bash`:

+

```bash

bentoml --version

```

@@ -62,65 +68,72 @@ If you are interested in proposing a new feature, make sure to create a new feat

with VS Code

1. Confirm that you have the following installed:

- - [Python3.7+](https://www.python.org/downloads/)

- - VS Code with the [Python](https://marketplace.visualstudio.com/items?itemName=ms-python.python) and [Pylance](https://marketplace.visualstudio.com/items?itemName=ms-python.vscode-pylance) extensions

+

+ - [Python3.7+](https://www.python.org/downloads/)

+ - VS Code with the [Python](https://marketplace.visualstudio.com/items?itemName=ms-python.python) and [Pylance](https://marketplace.visualstudio.com/items?itemName=ms-python.vscode-pylance) extensions

2. Fork the BentoML project on [GitHub](https://github.com/bentoml/BentoML).

3. Clone the GitHub repository:

- 1. Open the command palette with Ctrl+Shift+P and type in 'clone'.

- 2. Select 'Git: Clone(Recursive)'.

- 3. Clone BentoML.

+

+ 1. Open the command palette with Ctrl+Shift+P and type in 'clone'.

+ 2. Select 'Git: Clone(Recursive)'.

+ 3. Clone BentoML.

4. Add an BentoML upstream remote:

- 1. Open the command palette and enter 'add remote'.

- 2. Select 'Git: Add Remote'.

- 3. Press enter to select 'Add remote' from GitHub.

- 4. Enter https://github.com/bentoml/BentoML.git to select the BentoML repository.

- 5. Name your remote 'upstream'.

+

+ 1. Open the command palette and enter 'add remote'.

+ 2. Select 'Git: Add Remote'.

+ 3. Press enter to select 'Add remote' from GitHub.

+ 4. Enter https://github.com/bentoml/BentoML.git to select the BentoML repository.

+ 5. Name your remote 'upstream'.

5. Pull from the BentoML upstream remote to your main branch:

- 1. Open the command palette and enter 'checkout'.

- 2. Select 'Git: Checkout to...'

- 3. Choose 'main' to switch to the main branch.

- 4. Open the command palette again and enter 'pull from'.

- 5. Click on 'Git: Pull from...'

- 6. Select 'upstream'.

+

+ 1. Open the command palette and enter 'checkout'.

+ 2. Select 'Git: Checkout to...'

+ 3. Choose 'main' to switch to the main branch.

+ 4. Open the command palette again and enter 'pull from'.

+ 5. Click on 'Git: Pull from...'

+ 6. Select 'upstream'.

6. Open a new terminal by clicking the Terminal dropdown at the top of the window, followed by the 'New Terminal' option. Next, add a virtual environment with this command:

```bash

python -m venv .venv

```

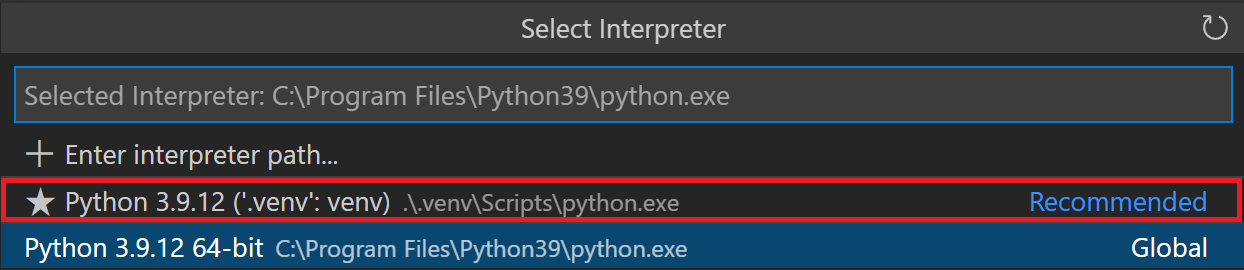

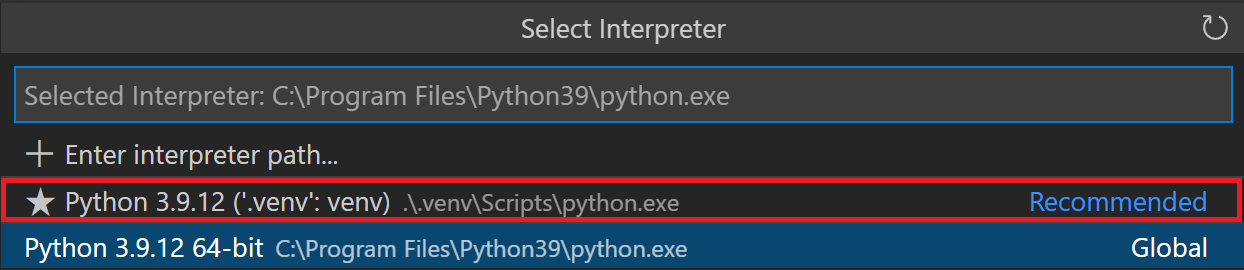

7. Click yes if a popup suggests switching to the virtual environment. Otherwise, go through these steps:

- 1. Open any python file in the directory.

- 2. Select the interpreter selector on the blue status bar at the bottom of the editor.

-

-

- 3. Switch to the path that includes .venv from the dropdown at the top.

-

+ 1. Open any python file in the directory.

+ 2. Select the interpreter selector on the blue status bar at the bottom of the editor.

+

+

+ 3. Switch to the path that includes .venv from the dropdown at the top.

+

8. Update your PowerShell execution policies. Win+x followed by the 'a' key opens the admin Windows PowerShell. Enter the following command to allow the virtual environment activation script to run:

```

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

```

-using the Command Line

1. Make sure you're on the main branch.

+

```bash

git switch main

```

2. Use the git pull command to retrieve content from the BentoML Github repository.

+

```bash

git pull

```

3. Create a new branch and switch to it.

+

```bash

git switch -c my-new-branch-name

```

@@ -128,11 +141,13 @@ If you are interested in proposing a new feature, make sure to create a new feat

4. Make your changes!

5. Use the git add command to save the state of files you have changed.

+

```bash

git add

```

6. Commit your changes.

+

```bash

git commit

```

@@ -141,46 +156,52 @@ If you are interested in proposing a new feature, make sure to create a new feat

```bash

git push

```

-using VS Code

1. Switch to the main branch:

- 1. Open the command palette with Ctrl+Shift+P.

- 2. Search for 'Git: Checkout to...'

- 3. Select 'main'.

+

+ 1. Open the command palette with Ctrl+Shift+P.

+ 2. Search for 'Git: Checkout to...'

+ 3. Select 'main'.

2. Pull from the upstream remote:

- 1. Open the command palette.

- 2. Enter and select 'Git: Pull...'

- 3. Select 'upstream'.

+

+ 1. Open the command palette.

+ 2. Enter and select 'Git: Pull...'

+ 3. Select 'upstream'.

3. Create and change to a new branch:

- 1. Type in 'Git: Create Branch...' in the command palette.

- 2. Enter a branch name.

+

+ 1. Type in 'Git: Create Branch...' in the command palette.

+ 2. Enter a branch name.

4. Make your changes!

5. Stage all your changes:

- 1. Enter and select 'Git: Stage All Changes...' in the command palette.

+

+ 1. Enter and select 'Git: Stage All Changes...' in the command palette.

6. Commit your changes:

- 1. Open the command palette and enter 'Git: Commit'.

+

+ 1. Open the command palette and enter 'Git: Commit'.

7. Push your changes:

- 1. Enter and select 'Git: Push...' in the command palette.

+ 1. Enter and select 'Git: Push...' in the command palette.