New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

c++ client Producer with name 'xxx-18-31667' is already connected to topic #13289

Comments

|

I have checked the C++ client, it looks like the error is not caused by the issue like apache/pulsar-client-go#639. See pulsar/pulsar-client-cpp/lib/ProducerImpl.cc Lines 68 to 70 in 8625ffe

The |

|

And I simulated the reconnection by modifying the SDK implementation just now. diff --git a/pulsar-client-cpp/lib/ClientConnection.cc b/pulsar-client-cpp/lib/ClientConnection.cc

index 3ad6f4062f4..43a3cdc83ce 100644

--- a/pulsar-client-cpp/lib/ClientConnection.cc

+++ b/pulsar-client-cpp/lib/ClientConnection.cc

@@ -49,6 +49,8 @@ static const uint32_t DefaultBufferSize = 64 * 1024;

static const int KeepAliveIntervalInSeconds = 30;

+static std::atomic_int reconnect_{0};

+

// Convert error codes from protobuf to client API Result

static Result getResult(ServerError serverError) {

switch (serverError) {

@@ -805,6 +807,12 @@ void ClientConnection::handleIncomingCommand() {

// Handle normal commands

switch (incomingCmd_.type()) {

case BaseCommand::SEND_RECEIPT: {

+ reconnect_++;

+ if (reconnect_ == 3) {

+ LOG_INFO(cnxString_ << " trigger reconnection manually");

+ close();

+ return;

+ }

const CommandSendReceipt& sendReceipt = incomingCmd_.send_receipt();

int producerId = sendReceipt.producer_id();

uint64_t sequenceId = sendReceipt.sequence_id();

@@ -812,8 +820,9 @@ void ClientConnection::handleIncomingCommand() {

MessageId messageId = MessageId(messageIdData.partition(), messageIdData.ledgerid(),

messageIdData.entryid(), messageIdData.batch_index());

- LOG_DEBUG(cnxString_ << "Got receipt for producer: " << producerId

- << " -- msg: " << sequenceId << "-- message id: " << messageId);

+ LOG_INFO(cnxString_ << "Got receipt for producer: " << producerId

+ << " -- msg: " << sequenceId << "-- message id: " << messageId

+ << " | reconnect_: " << reconnect_);

Lock lock(mutex_);

ProducersMap::iterator it = producers_.find(producerId);

diff --git a/pulsar-client-cpp/lib/ProducerImpl.cc b/pulsar-client-cpp/lib/ProducerImpl.cc

index f81e205475d..1ee938116d4 100644

--- a/pulsar-client-cpp/lib/ProducerImpl.cc

+++ b/pulsar-client-cpp/lib/ProducerImpl.cc

@@ -150,6 +150,8 @@ void ProducerImpl::connectionOpened(const ClientConnectionPtr& cnx) {

SharedBuffer cmd = Commands::newProducer(topic_, producerId_, producerName_, requestId,

conf_.getProperties(), conf_.getSchema(), epoch_,

userProvidedProducerName_, conf_.isEncryptionEnabled());

+ LOG_INFO(getName() << " newProducer userProvidedProducerName_: " << userProvidedProducerName_

+ << ", producerName_: " << producerName_);

cnx->sendRequestWithId(cmd, requestId)

.addListener(std::bind(&ProducerImpl::handleCreateProducer, shared_from_this(), cnx,

std::placeholders::_1, std::placeholders::_2));

@@ -191,6 +193,8 @@ void ProducerImpl::handleCreateProducer(const ClientConnectionPtr& cnx, Result r

producerName_ = responseData.producerName;

schemaVersion_ = responseData.schemaVersion;

producerStr_ = "[" + topic_ + ", " + producerName_ + "] ";

+ LOG_INFO(getName() << " handleCreateProducer userProvidedProducerName_: " << userProvidedProducerName_

+ << ", producerName_: " << producerName_);

if (lastSequenceIdPublished_ == -1 && conf_.getInitialSequenceId() == -1) {

lastSequenceIdPublished_ = responseData.lastSequenceId;i.e. there will be a reconnection for the 3rd time to send a message. And I also added some logs for debugging. Here are the output: From following lines: We can see I think there is something wrong at broker side. When public boolean isSuccessorTo(Producer other) {

return Objects.equals(producerName, other.producerName)

&& Objects.equals(topic, other.topic)

&& producerId == other.producerId

&& Objects.equals(cnx, other.cnx) // the connections are not the same

&& other.getEpoch() < epoch; // the epochs are not the same

}The code is from latest master, you could also check your own Pulsar source code. |

|

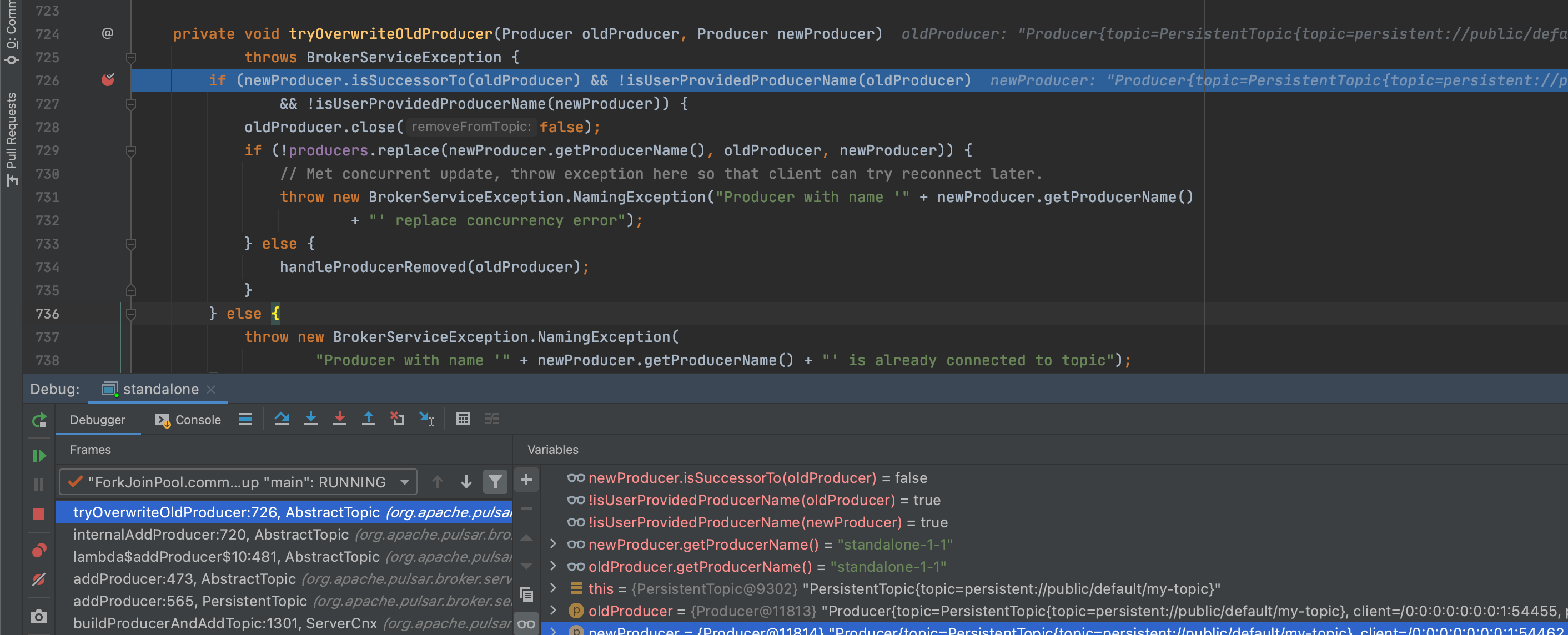

For example, the image above is the result after I modified @Override

public void removeProducer(Producer producer) {

checkArgument(producer.getTopic() == this);

//if (producers.remove(producer.getProducerName(), producer)) {

// handleProducerRemoved(producer);

//}

} |

|

I think it's the same problem, I have done some investigation in this issue: #13342 |

|

The issue had no activity for 30 days, mark with Stale label. |

|

The issue had no activity for 30 days, mark with Stale label. |

|

I'm going to close this issue for the same justification provided here #13342 (comment). It's like that this issue was solved in a subsequent release of Pulsar. |

Describe the bug

C++ producer may blocked sometimes, and from the logs in broker we can see

The text was updated successfully, but these errors were encountered: